Enotria: The Last Song, developed by Jyamma Games, is a Soulslike action RPG set in a world inspired by Italian folklore and traditional theatre. At its core, the game blends melee combat and magic attacks with music, in a way that every sound effect is designed from musical instruments. This particular approach presented an interesting challenge during the mixing phase.

In this article, Audio Director Aram Shahbazians and Lead Sound Designer Camilla Coccia break down how a clear bus system structure and a smart use of RTPCs in Wwise helped in controlling Enotria’s combat audio feedback, keeping every attack, spell, and parry readable.

The Audio Direction

From the start, Enotria: The Last Song was built on the idea of intertwining music and sound. We made it a rule that most sound effects should originate from musical sources, using traditional Italian instruments as a base. Weapon sounds, magic, and ambiences were all designed with strongly tonal elements, giving the game a unique sound.

This approach, however, came with challenges, especially during the mix. The fast-paced combat meant that multiple tonal sounds could trigger at once, increasing the risk of clashing frequencies and masking important feedback. We needed to account for these factors early in production and structure the Wwise project in a way that would give us as much control as possible over each audio event.

Content Development

Enotria’s combat is not scripted and can vary drastically, with multiple enemies, attacks, and events potentially occurring in any order and at any time. This led us to heavily rely on the tools in Wwise to manage priorities and dynamically tune each feedback, to keep the mix as clear as possible.

We focused on dividing the assets into macro-categories from the very beginning, testing which frequency range each sound might occupy and how often it might be triggered together with other sounds. This allowed us to prepare the assets early on and test them in gameplay prototypes to define high-level design guidelines.

For example, player damage sounds were intentionally placed in the midrange, making them sit clearly in the mix without overlapping with enemy damage feedback. Each weapon class and magic spell was designed with its own tonal identity, making sure that they occupied separate frequency spaces. Our goal was to have each attack instantly identifiable through sound, even with your eyes closed.

Music also required careful balancing, especially in boss fights. Some of the traditional instruments used had rich and noisy frequency elements, so we went through multiple mix passes to get them under control.

Designing a Scalable Bus Structure

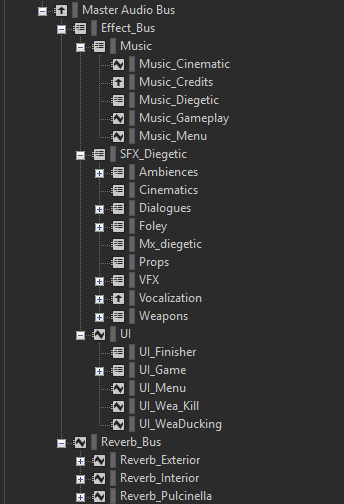

To keep the mix under control, organizing the project with a solid bus structure was one of our main priorities. With hundreds of assets triggering during combat, we needed to set up a scalable bus system. We divided the buses into main categories, mirroring the early categorization of the assets, and subcategories that could be expanded when needed.

Music

This bus system helped us manage different RTPCs depending on the type of music playing. For example, Music_Gameplay drives a sidechain RTPC that lowers the ambiences during boss fights to create more clarity. Diegetic music was not included in this bus because it required a specific setup which, in our case, was more convenient to keep in the general SFX bus.

SFX

To manage the variety of sounds, due to the numerous types of enemies, items, weapons, spells, etc, we divided the SFX bus into broad categories to help define the priority and behavior of each element. As the game evolved during development, we expanded this structure introducing sub-buses to better reflect our needs.

The Foley bus was separated into individual sub-buses for the player foley, NPCs and bosses. Boss foley was critical during their fights, especially for enemies involving close, physical combat. To manage these categories of sounds we further split their bus into individual buses for each boss that required a custom treatment. Routing their foley sounds in this way allowed us to create special RTPCs that we used to carve out space for them when needed.

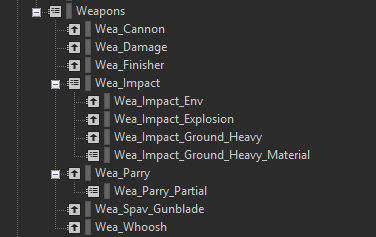

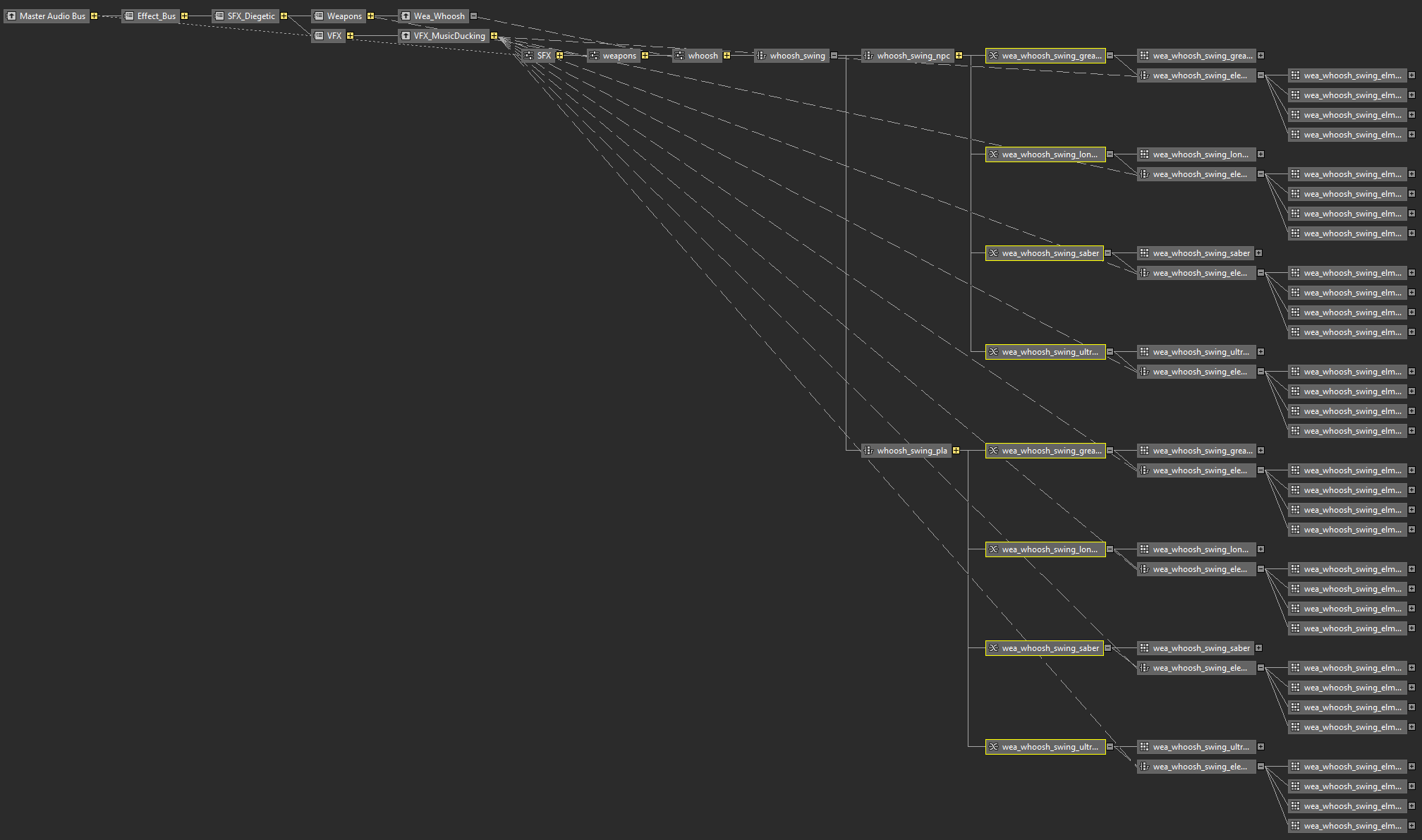

Weapons were the category where we spent the most time iterating and re-organizing the bus system. The game featured over 100 weapons, organized into class types: longsword, greatsword, polearm, morningstar, saber, ultra-greatsword, ultra-hammer and ranged.

Our starting point was a generic subdivision into melee Whoosh, Damage, Finisher, Parry, Impact and custom firearms. Due to the number of different weapons and the variety of surface materials, we used the following sub-categorization for the Impacts:

- Environment Impacts: here we routed all the sounds triggered when the weapons simply collided with any surface, managed by a system of switch containers with different materials (dirt, rock, stone, wood, water), for each weapon type.

- Explosions: this bus was used for special weapons like grenades.

- Heavy Ground Impacts: a bus used for all the ground impacts performed with heavy or special attacks.

- Material of the Heavy Ground Impacts: a bus used for the surface materials of all the ground impacts performed with heavy or special attacks. This was necessary because these sounds had a longer tail that would affect the sidechains too much. Routing them in a new bus gave us more granular control on them.

UI

The UI bus didn’t need as many sub-buses as the weapons, but to keep enough control over the different types of UI feedback we defined separate buses for Finisher UI, Game UI, Menu UI, a special bus for UI sounds that should apply a sidechain on the weapons, and a bus for the “enemy slain” feedback. The last one is a special extra-diegetic sound, heard every time an enemy has been dealt the final killing blow, and it must always be heard under any circumstances.

REVERB

We kept it as simple as possible, splitting them into exterior, interior, and a special reverb for Pulcinella playing his mandolin, activated when the player rests at the bonfire.

Making Room: Sidechaining and Prioritization

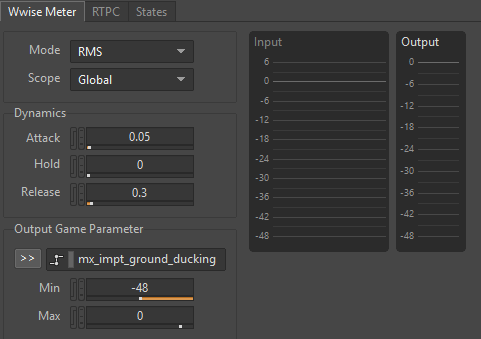

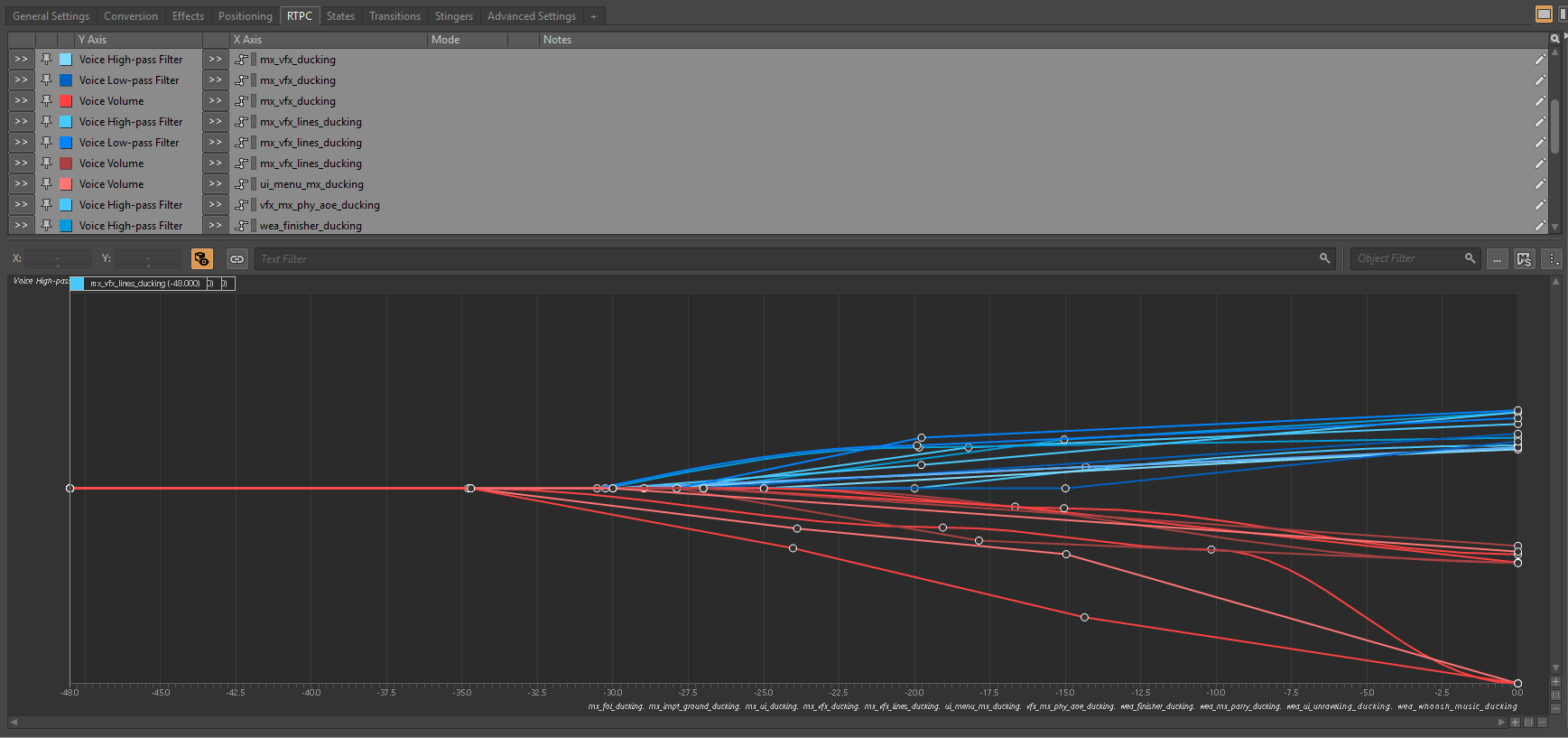

The combat pace needed a highly reactive system with defined rules to dynamically prioritize the sounds based on the context. We wanted the mix to mirror the moment-to-moment changes in the gameplay, making sure that the relevant feedback always came through, based on the player’s or the enemy’s actions. To achieve this, we built a detailed sidechain system with layered rules, allowing us to duck or highlight specific elements depending on what was happening on screen.

The two core audio elements that always had to be heard during combat were damage feedback and parries. Damage feedback was approached in a traditional way, ducking most of the other elements to give the damage the highest priority in the mix, although in a few special instances we had to create additional sets of rules to manage the edge cases. Heavy ground impacts needed to be clearly heard, since they are a core part of the heavy attacks of most weapons, but upon hitting the enemy, the two sounds were masking each other. After a few iterations, a good compromise was to apply a sidechain driven by the damage only on the surface material of the impacts, keeping the punch audible and carving out enough space for the layers of gore to shine. This approach worked in most cases but, as with the damage, it was not the only solution adopted.

The “special ability charged” UI SFX was one of the trickier cases. Since the ability charges when the player lands a hit on an enemy, often overlapping with damage feedback, it was designed to sit mainly in the high-frequency range to stand out from the other sounds. However, even with a custom sidechain in place, the slash layers from the damage sounds would consistently overpower it. The solution in this case was to go back and re-design the asset in the mid-low range of frequencies, and to fine-tune a sidechain driving filters to make room in the high range.

The parry is one of the most recurring sounds in combat, and can be triggered multiple times in quick succession. A chain of successful parries sends enemies into a “stagger” state, which triggers an extra-diegetic UI SFX. During our gameplay sessions, we noticed that the parry often masked this UI cue. However, sidechaining the parry alone wasn’t an option, as we risked losing essential tonal content tied to combat feedback. After several iterations, we implemented a cross-sidechaining system: the UI SFX carved out space in the high range of the parry and slightly reduced its volume, while the parry did the opposite, dipping the UI’s low end and boosting its gain. This mutual adjustment ensured both elements remained clear and impactful in any combat scenario.

Boss fights are some of the densest audio moments in the game, with music, enemy abilities, weapon impacts, and magic spells all triggering within tight windows. To keep the mix clear without compromising the music and the combat feedback, we implemented a dedicated sidechaining system for each boss music.

We spent significant time during pre-mix and final mix sessions iterating on the RTPC curve shapes, aiming for the right balance between reactivity and transparency.

Each sidechain meter was tuned per category, with attack and release values adjusted to suit the behavior of the triggered sounds. The release values especially had to be long enough to take the necessary space, but short enough to avoid unnatural or noticeable ducking effects on the music.

The parametric EQ was a very useful tool during the studio mix session, when we could finally test all the elements of the boss fight together in a controlled environment. We were able to surgically carve out space in specific frequency ranges to make sure that they would not mask important sounds like weapon whooshes, damages or parries.

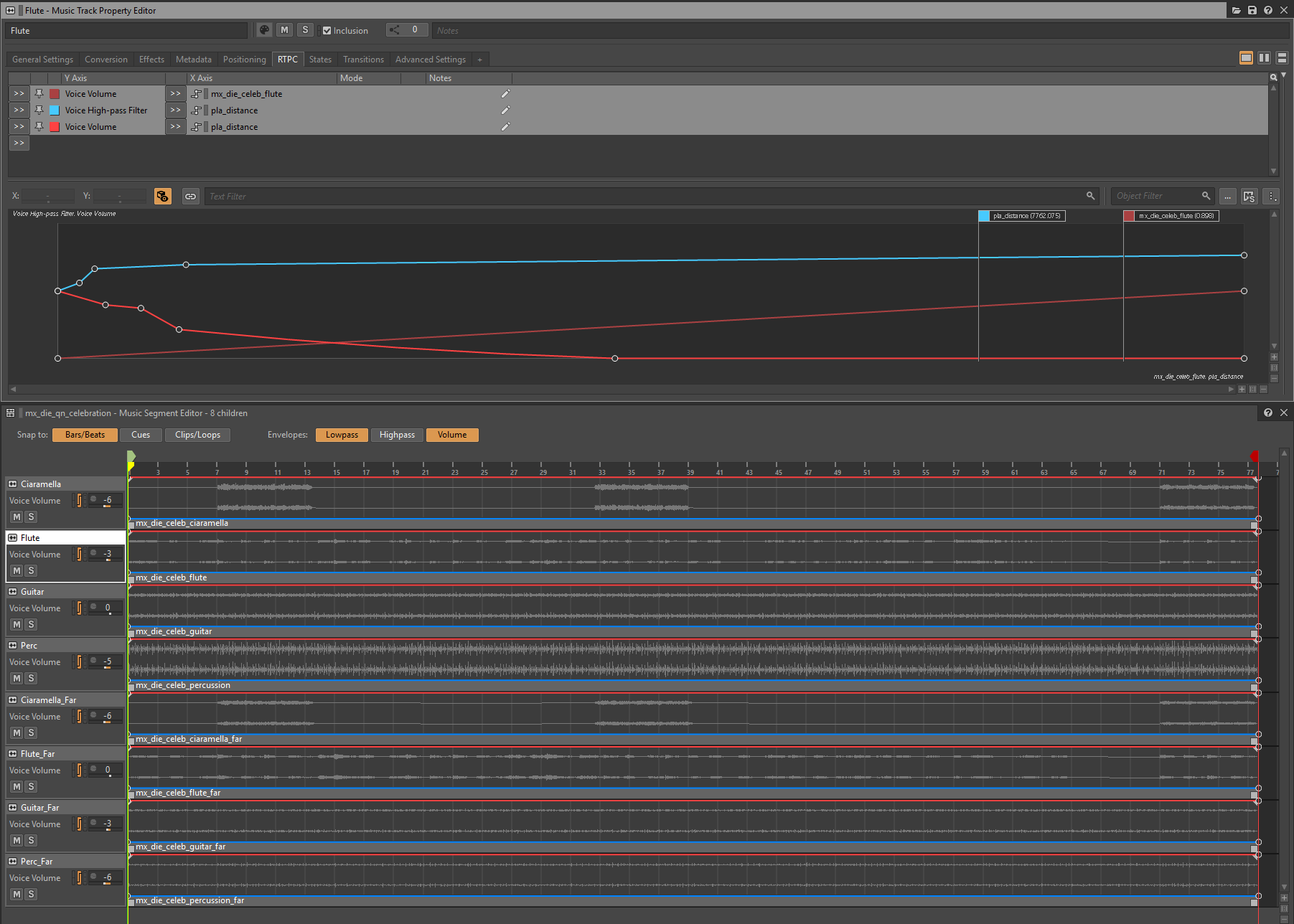

For each boss track, we used between 13 and 16 RTPCs to drive low-pass filters, high-pass filters, and volume adjustments. Special abilities triggered dedicated sidechains, while regular spells were routed through separate RTPC curves. This allowed us to fine-tune their impact on the mix depending on their intensity, frequency content, and gameplay context. Voiceovers posed a particular challenge, especially for bosses with deep, low-register voices that often competed with the music and other combat feedback.

To handle this, we duplicated critical RTPCs and applied them per phase of the boss fight music, allowing for phase-specific tuning. This gave us the flexibility to adjust sidechaining differently for bosses that changed form mid-fight, or for musical tracks that evolved across phases, such as switching instrumentation or introducing denser textures.

Foley for larger enemies required special care. We wanted to preserve the weight and impact of their movements without letting them dominate the mix or bury the music. Our solution was to apply a gentle sidechain on the music bus, driven by the Boss Foley meter, but also to route key combat elements to duck the foley actor-mixers of large bosses. This created room to keep the foley punch intact by cleaning up excessive sub-lows, preserving the sense of scale and physicality of the enemy.

Approach to the Listener

Since this is a third-person game where players often face fast attacks and navigate narrow spaces, we had to experiment with different listener positions. After several tests, we found a sweet spot between the character and the camera, slightly closer to the camera, while keeping the listener rotation aligned with the player character’s position.

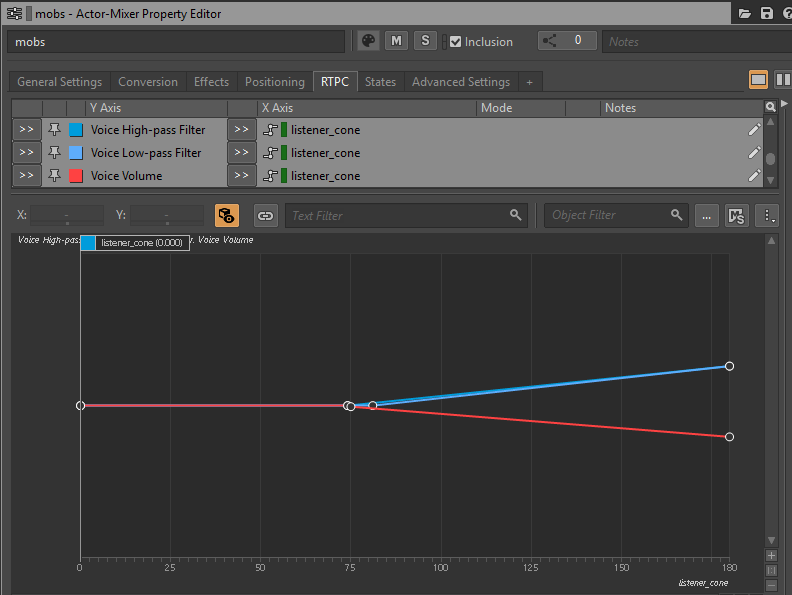

The game’s level design is also quite vertical, with areas like Quinta bustling with ambient activity, from environmental sounds to NPC chatter. Because the game is mixed in stereo, we had to enhance 3D spatial clarity. We started working with the built-in emitter cone attenuation, but found that combining it with additional RTPCs gave us better control, especially in moments where players receive multiple audio cues from different directions, such as enemy attacks mixed with dialogue or diegetic music.

We relied heavily on the listener cone and elevation cone, both part of Wwise’s built-in parameters. These were essential in improving the positional readability of enemies, NPC interactions, and diegetic music. To take this a step further, we also created a custom elevation cone not tied to the built-in parameter. This was applied to specific ambient emitters, allowing us to create a more dynamic spatial feeling for static elevated sources, helping the player perceive vertical distance and separation even in a stereo mix.

Dynamic Mixing

During development, certain elements required a more tailored approach, situations where a standard setup wasn’t enough to achieve the desired clarity. In these cases, we used a combination of custom RTPCs, switch containers, and attenuation settings to fine-tune the mix.

Ambience

In the city of Quinta, the ongoing celebration atmosphere was built through a layered system combining crowd walla, individual NPC dialogue and efforts, and diegetic music. Since players could reduce the number of NPCs by attacking or killing them, we designed each element to adapt dynamically to the evolving state of the environment.

The crowd walla was driven by a crowd_size RTPC, which adjusted the playback of different walla loops based on the number of NPCs still alive. This ensured that the density and liveliness of the background ambience reflected the player's actions in real time.

For diegetic music, we built a system around four main musicians located in the city’s central square. Each musician had both a "near" and "far" instrument stem, with a player_distance RTPC crossfading between them. Additionally, a musician_death RTPC was tied to each performer, muting their respective stem the moment the corresponding NPC was killed. After fine-tuning all the attenuations, this created an environment that felt alive and reactive, changing in mood and fullness as players interacted with the population in the city.

Magic

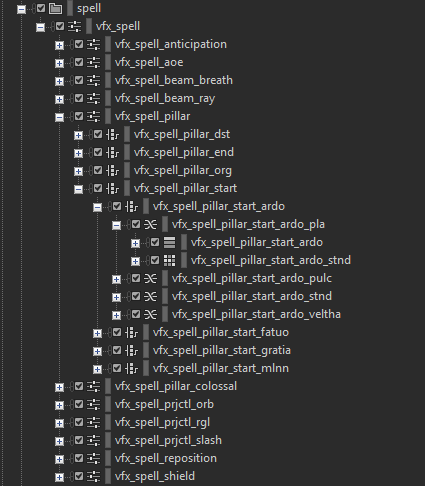

We had to carefully balance spells that differed in intensity, duration, and size. Early on, we established a two-tier classification to manage them more effectively:

- Light Spells: One-shot effects with short tails, such as projectile origins or spell impacts.

- Heavy Spells: Longer, more invasive sounds like beams, pillars.

This classification allowed us to assign separate RTPCs and set up differently the release values in the meters for each spell category.

Some spells were shared between enemies and the player, but with subtle differences in behavior. To avoid dealing with multiple custom implementations, given the number of enemies and spells present in the game, we relied on a nested switch container system that triggered the correct sounds based on which character was casting the spell. This setup allowed us to treat players, enemies, and bosses as distinct categories, applying custom attenuations and filters as needed, greatly simplifying the final mix process.

For spells that could be triggered simultaneously by multiple enemies, we added a custom RTPC called Spell_Mix. This parameter tracked the number of active instances of a specific spell and dynamically adjusted EQ filters in real time. It helped create enough space and prevent buildup in the mix, keeping it clean even during high-intensity fights.

Weapon

Weapon audio events were classified by movement type: swing, heavy swing, settle, windup, long windup, and thrust. We followed the game’s eight macro weapon categories as a base, but for boss weapons and special cases, we created additional sub-categories to handle unique materials and shapes.

Since most weapons could be used by both the player and NPCs, we built a switch container hierarchy to split them accordingly. This setup allowed us to apply different attenuations and spread curves, particularly for the player’s weapons, to preserve the stereo feel from the players’ point of view.

As with other core gameplay elements, weapon whooshes piloted sidechains on the music and ambience actor-mixers, ensuring they always felt powerful. Among combat elements, damage feedback held a higher priority than whooshes. To reflect this, damage sounds passed through a dedicated meter that sidechained the whooshes. A fast attack and release time on the damage meter ensured the whooshes remained aggressive enough, and the punch of the damages cut through the mix even in the most hectic moments of combat.

Weapons could also be infused with magic elements, which appeared visually as trails during swings and thrusts. To reflect this in audio, we placed swing and thrust movements inside a blend container, paired with a switch container handling the elemental layers. This allowed us to layer the base whoosh with the appropriate magical effect.

However, this setup introduced mixing issues. The elemental trails were long enough to cause excessive ducking of the music and ambience. To resolve this, we routed them through a separate bus with a dedicated meter, which controlled less aggressive sidechain RTPCs.

Conclusion

Enotria: The Last Song was a true testing ground for combat mixing. Managing so many audio events, all capable of triggering rapidly and simultaneously, was an inspiring challenge. It pushed us to think systemically when approaching the mix. The answer for us was to route and prioritize smartly, building a solid foundation in Wwise that could handle the heavy lifting. This gave us the freedom to concentrate on the individual cases that needed special attention.

A big thank you goes to Leonardo Mazzella and Daniele Oliviero from the audio and code team for all their help and support, and to the whole development team with whom we shared this journey. A special thank you to the Audiokinetic support staff for their invaluable assistance throughout the project. Thank you for taking the time to read this, we hope this deep dive offered some useful insights into our process!

Comments