Introduction

In game audio development, we often face the following challenges: You’ve just set up a new Wwise project and are ready to test the boss fight in real time. However, you find that the existing game management (GM) commands are not working well as expected.

Is your account level too low to directly access the boss fight in this specific level? That's okay, let's start over from the beginning.

When you defeat the boss in a short period of time, only to find that the boss's skills haven't been fully revealed? No worries, just ask the game designer to adjust the values.

Finally, you find that a particular ultimate move is hard to reproduce due to its complexity. However, it takes time for game programmers to update GM commands, and you can't wait...

In the end, you can only rely on your vague impression of the fight to fix issues in the project.

There are even more headaches: the server only gives you one chance to do a large-scale test; and, you can only do one or two tests on multiplayer fight scenes because there aren't enough people; and so on...

Can we record our gameplay like a movie and save it as a Wwise soundtrack so that they can be played back whenever we want?

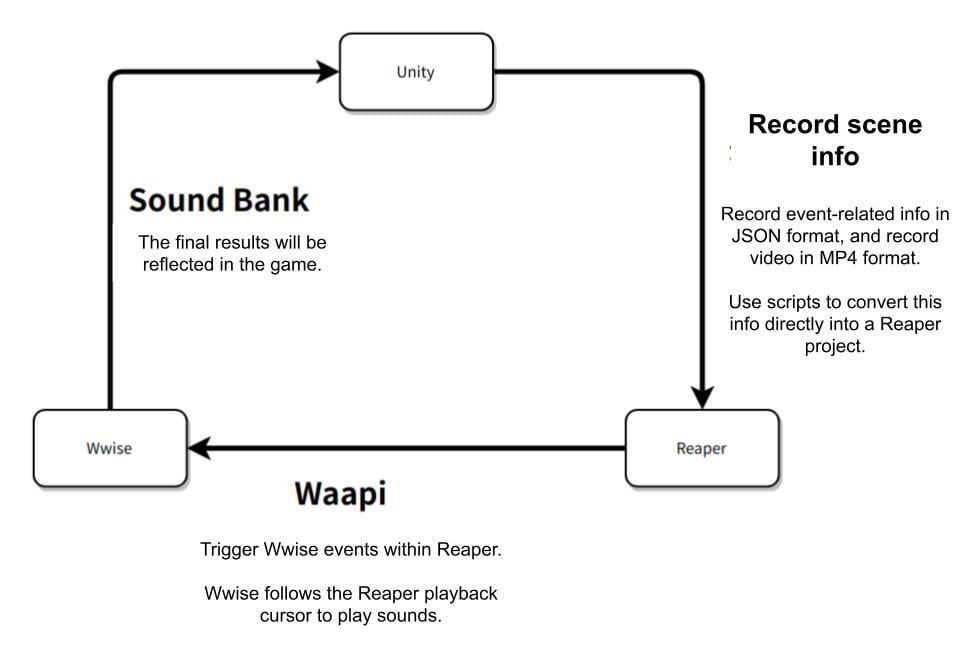

Sure. We’ve developed the URW (Unity-Reaper-Wwise) playback toolchain. We can simply record a full gameplay, and generate a Reaper project. Then, you can trigger Wwise events within Reaper, and debug and fix issues whenever you want.

The generated Reaper project is your exclusive Wwise soundtrack. In Reaper, you can review your soundtrack as needed. This makes Wwise a mixing console for Reaper, allowing sound designers to refine the audio performance as many times as they like.

In addition, recording your gameplay as a soundtrack (Reaper project) means that designers can freely adjust the playback position in the project, navigate to a specific point in time, and refine a particular frame or a small part of the gameplay.

Figure 1 - Toolchain Flowchart

Video 1 - Unity-Reaper-Wwise Toolchain Demo

Step 1: Record Audio Info in Unity

Since we are playing back Wwise sounds, we need the sound data to be played back. But, what kind of data format should we use? And, how should we capture the sound data?

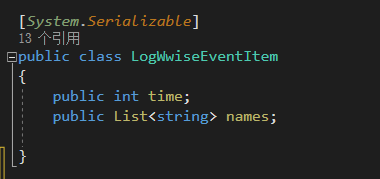

About Data Format

This is just a preliminary version. And, the data format is quite simple. In a word, it's all about knowing which sound events were triggered and at what time. See the figure below.

Figure 2 - Data Format

(13个引用 = 13 references)

About Data Capture

We evaluated two data capture strategies at an earlier stage:

1. Embed capture logic at runtime in Unity.

The ideal solution should work like this: All sound playback actions in the project are managed through a unified entry point. The capture logic is embedded at this entry point. Every sound that comes in will be captured and stored. However, different projects have different logic. There may be projects that do not have such a unified playback entry point. Our primary requirement for this workflow is versatility, meaning that it can be used for any project. So, you don’t have to modify the logic to adapt to this workflow for different projects. Also, it should work in Editor mode. Finally, there is one unconfirmed concern at the implementation level. Considering the time stability of the game once it’s running, there may be discrepancies in the captured time data for sounds that should have been triggered at the same time. Given all this, we implemented the following data capture strategy.

2. WAAPI

There is a subscription interface in WAAPI: ak.Wwise.core.profiler.captureLog.itemAdded.

This subscription function executes the callback function you defined when new log entries are added to Wwise. My approach is to store the info of new entries at this point, and then, after recording is complete, use another function to calculate the list of entry info and generate the data needed for playback.

It should be noted that:

- Wwise’s entries are measured in milliseconds. And, multiple events can be triggered at the same time. So, corresponding measures should be taken for both storage and playback.

- Wwise’s entry time is the time elapsed since the sound engine was initialized. So, it’s possible that the first recorded data entry might have a very large timestamp value. (You might have started recording 1 hour after initializing the sound engine, in which case the time of the first sound data could be >= 60*60*1000). Therefore, whether it is for timely playback after recording, or for the recorded data to be able to play back from the beginning of the Reaper project generated later, all data time should be moved forward proportionally. The approach I chose is to start all from 0 seconds or 0.5 seconds.

The rest involves writing the playback time control logic, user interface logic, and save/load logic. However, they will not be covered in detail here.

Step 2: Json to Rpp

In the process of generating rpp files (Reaper projects), I chose to write the script using Node.js. For technical sound designers, I believe that JavaScript based on Node.js is a relatively lightweight option that allows you to build tools quickly, focusing more on your sound design.

First, Node.js has an npm package management tool that can bind together the dependency packages required by scripts, making the script development process relatively smooth. For example, FFmpeg is a very powerful audio and video processing tool. It supports various audio and video formats, and provides rich features and parameter options. It’s also convenient to use in Node.js. You only need to install it via the npm command:

|

Plain Text |

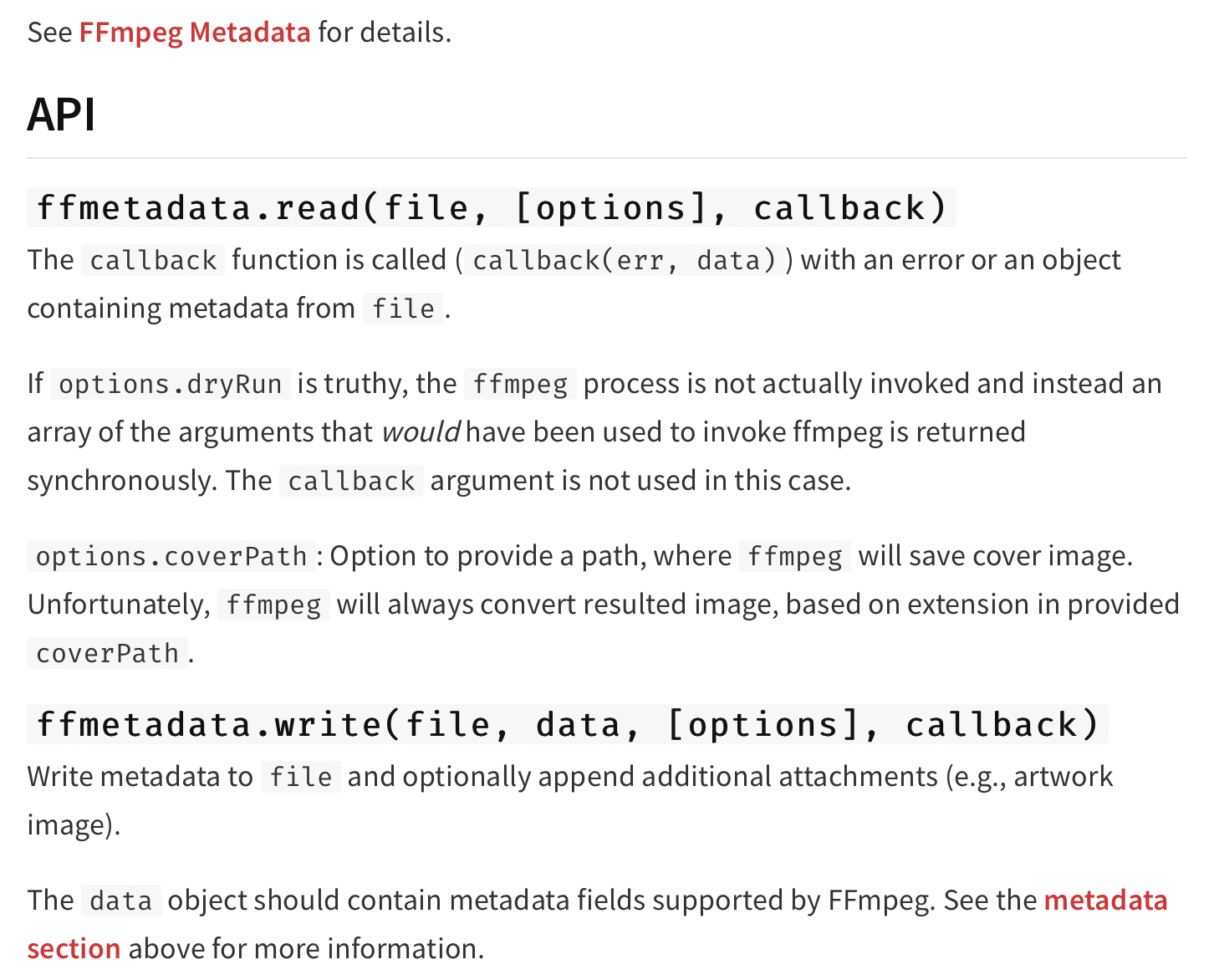

After installation, you can import the fluent-ffmpeg module into your Node.js code, and use its APIs to process audio and video. In the tool I wrote for the audio department to tag metadata, I also called the relevant APIs in FFmpeg:

Figure 3 - Example of FFmpeg Metadata API in npm

Compared to other programming languages, Node.js performs better at handling IO-intensive tasks. It means that Node.js scripts can read and write large amounts of audio data faster without causing the program to slow down due to blocking. Also, Node.js provides an asynchronous programming model that allows scripts to perform IO operations without blocking the main thread, thereby maintaining high application responsiveness.

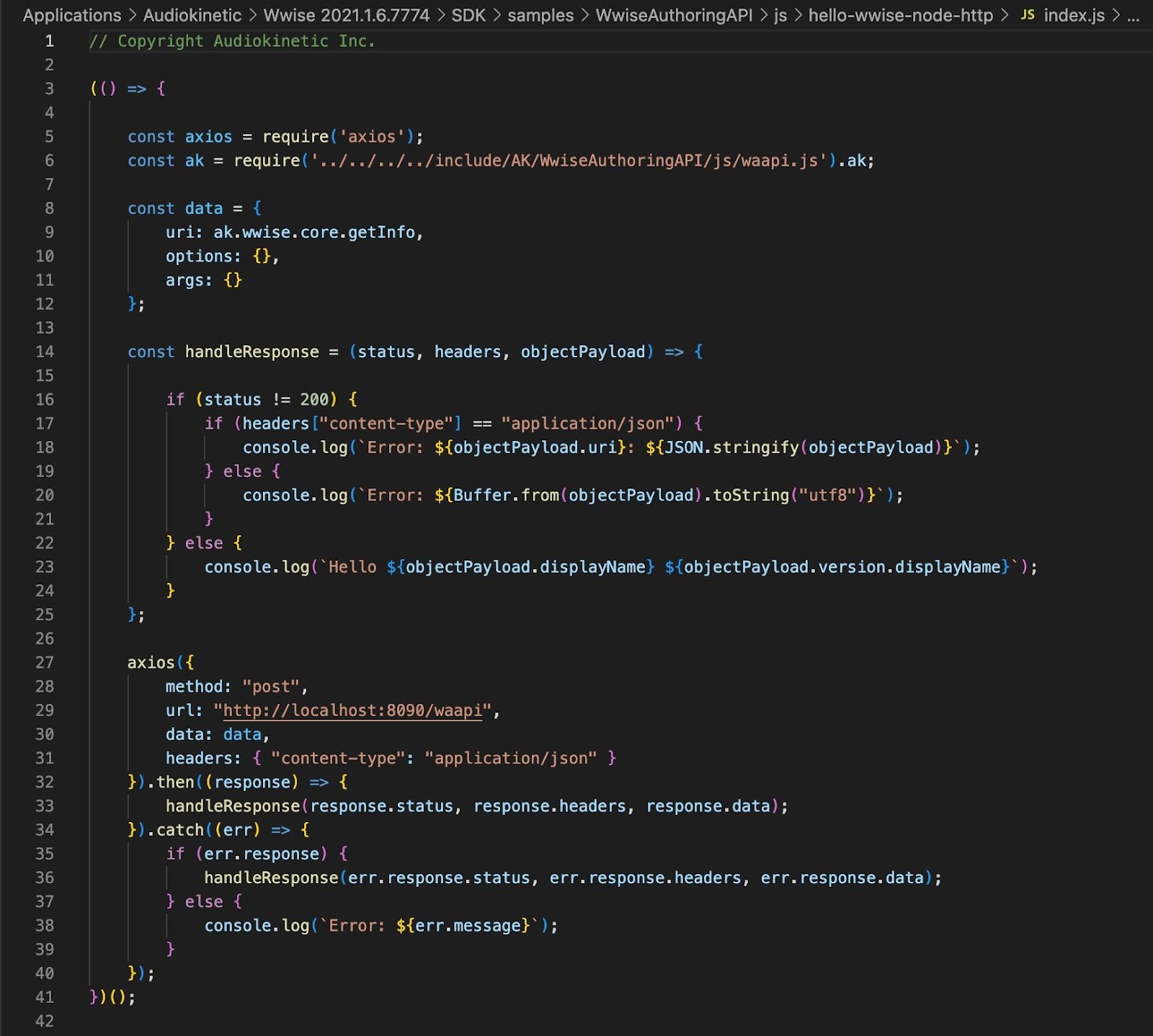

Even more exciting is that JavaScript has a related WAAPI interface, allowing you to interact with Wwise in web pages, desktop applications. This also provides some inspiration for technical sound designers while building their workflows. Through the WAAPI interface, you can use JavaScript to communicate and interact with Wwise easily. This enables real-time control, management, and automated processing of audio assets. For example, you can use the WAAPI interface to modify sound events dynamically, create and manage sound objects in-game and more. This provides more possibilities for game audio design.

Figure 4 - The Official "Hello Wwise" WAAPI Example Written in JavaScript

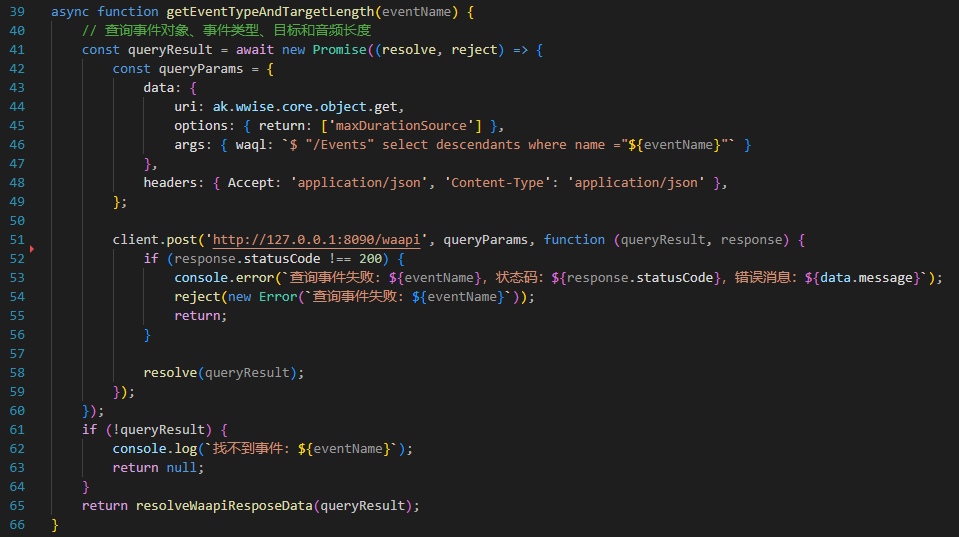

In the current playback tool, I use WAAPI to obtain the maximum length of audio samples. This will be converted to the length of items in rpp. The URI I used is ak.Wwise.core.object.get, with "options" set to 'maxDurationSource'. I sent an HTTP request to Wwise in WAQL format to retrieve the information.

Figure 5 - Query Event Info Using WAAPI

(// 查询事件对象、事件类型、目标和音频长度 = Query event objects, event types, targets, and audio length)

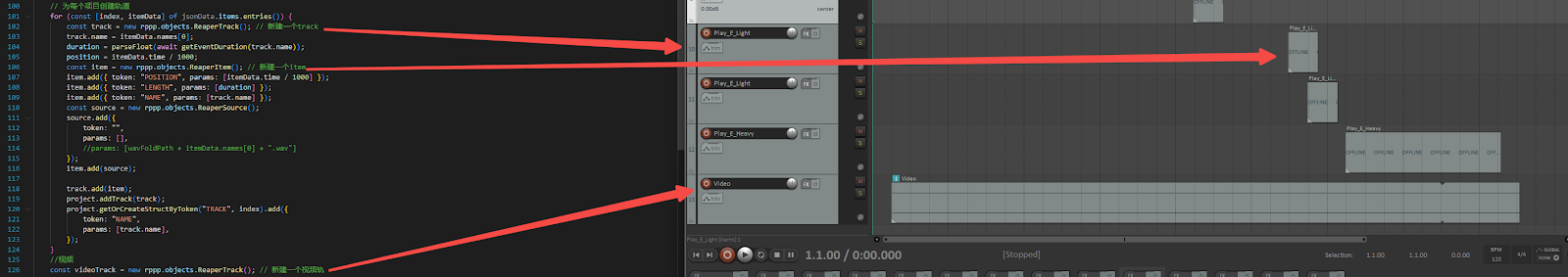

So far, using the JSON and MP4 files recorded in Unity with the info retrieved from Wwise, I’ve been able to generate rpp files (Reaper projects) in this workflow.

Figure 6 - Code Comments and rpp Project

Step 3: Reaper to Wwise (ReaWwise Caster)

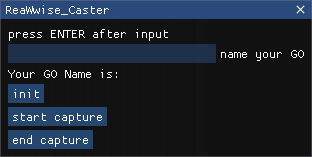

Figure 7 - ReaWwise_Caster UI Interface

Summary

The original purpose of implementing the playback workflow in Reaper was to provide sound designers with an environment where they could debug Wwise projects in sync with video in a DAW. This approach is more in line with the workflow of DAW users. Also, it aligns with the playback logic used during video post-production.

I’ve designed the script as a small UI interface, essentially acting as a miniature remote control. You just turn the switch on, put the remote control aside, and forget about it (Of course, if something goes wrong, you’ll have to step in).

To achieve this design goal, the tool needs to implement the following features:

- Capture items via event trigger based on the playback cursor’s position

- Connect to WAAPI and invoke APIs via a channel

- Play video and post events with low latency

- Enable or disable the capture feature freely without affecting Wwise communication

Frontend Extensions

ReaWwise is a plug-in for Wwise that allows users to integrate SFX and music assets from Reaper into Wwise for use in game. So, ReaWwise provides APIs for interaction between Reaper and Wwise. This allows developers to quickly and efficiently transfer SFX assets from Reaper into Wwise projects and use them in-game.

![]()

Figure 8 - Frontend Extension 1

![]()

Figure 9 - Frontend Extension 2

Implementation of WAAPI calls in Reaper

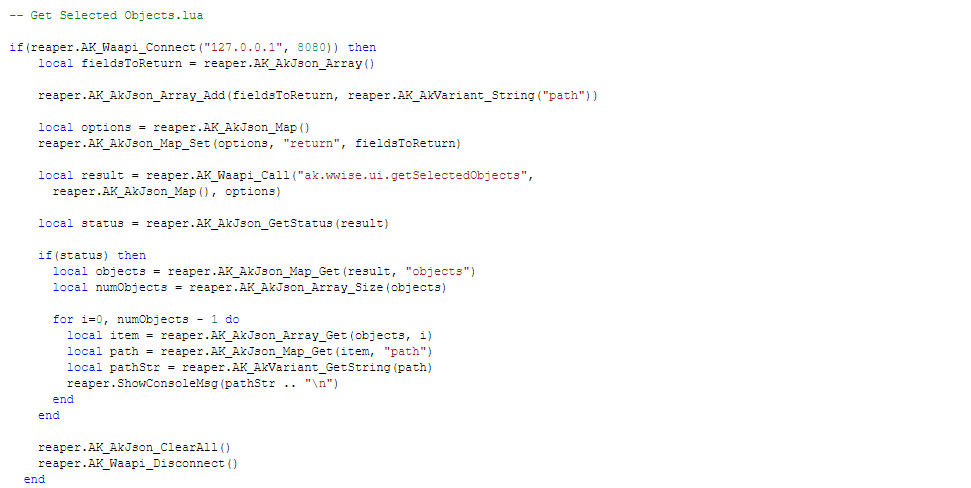

In January 2024, Audiokinetic officially expanded the functionality of ReaWwise, providing the ability to call WAAPI APIs in ReaScript:

Figure 10 - The Official Example Script Written With ReaScript

Related APIs:

|

Lua |

With ReaScript-related APIs, you can collect relevant info contained in items and tracks in Reaper. Finally, convert it to the required format with reaper.AK_AkJson_Map_Set() to call ak.soundengine.postEvent.

Capture items via event trigger based on the playback cursor’s position

Since the ReaScript APIs do not provide a trigger feature for capturing items, you will have to write your own. The basic idea is to collect the starting position data of each track item, refresh the playback cursor position coordinates in real time, and post an event when it’s determined that the position overlaps with the starting position.

However, two problems arose when actually writing the code: refresh function interval (using "while do" will crash instantly) and judgment point accuracy.

After some effort, the problem was solved.

- ReaScript provides a frame refresh function for smooth and worry-free operation.

|

Lua |

- To ensure the accuracy of sound playback, the starting position is set to 1 ms to balance latency and accuracy.

Future Features

Establishing the Reaper-WAAPI bridge enables more parameters in Reaper to be converted into Wwise-related parameters, achieving the goal of controlling Wwise playback behavior from within Reaper. Ultimately, we hope Wwise works as an extension and auxiliary mixing console for Reaper. Future features:

- Control RTPCs with track envelope

- Control Switch & State changes via item

- Assign GO to tracks and update GO coordinate info in real time based on data captured by the Unity playback device

- Integrate ReaWwise's native features to enable seamless replacement of Reaper items and Wwise audio clips without any loss of quality. This allows you to restore the original sound in Wwise directly within Reaper, apply plug-in adjustments to the audio assets, and export the project back to Wwise with a single click.

Credits

Thanks to the tremendous support of @Chenggong Zhang. The Unity info extraction chain was developed by him. Also, he helped us solve numerous challenges during the development. He should have been credited as a co-author, but he preferred to stay in the shadows like a hidden guardian~

Comments