Hello Wwisers!

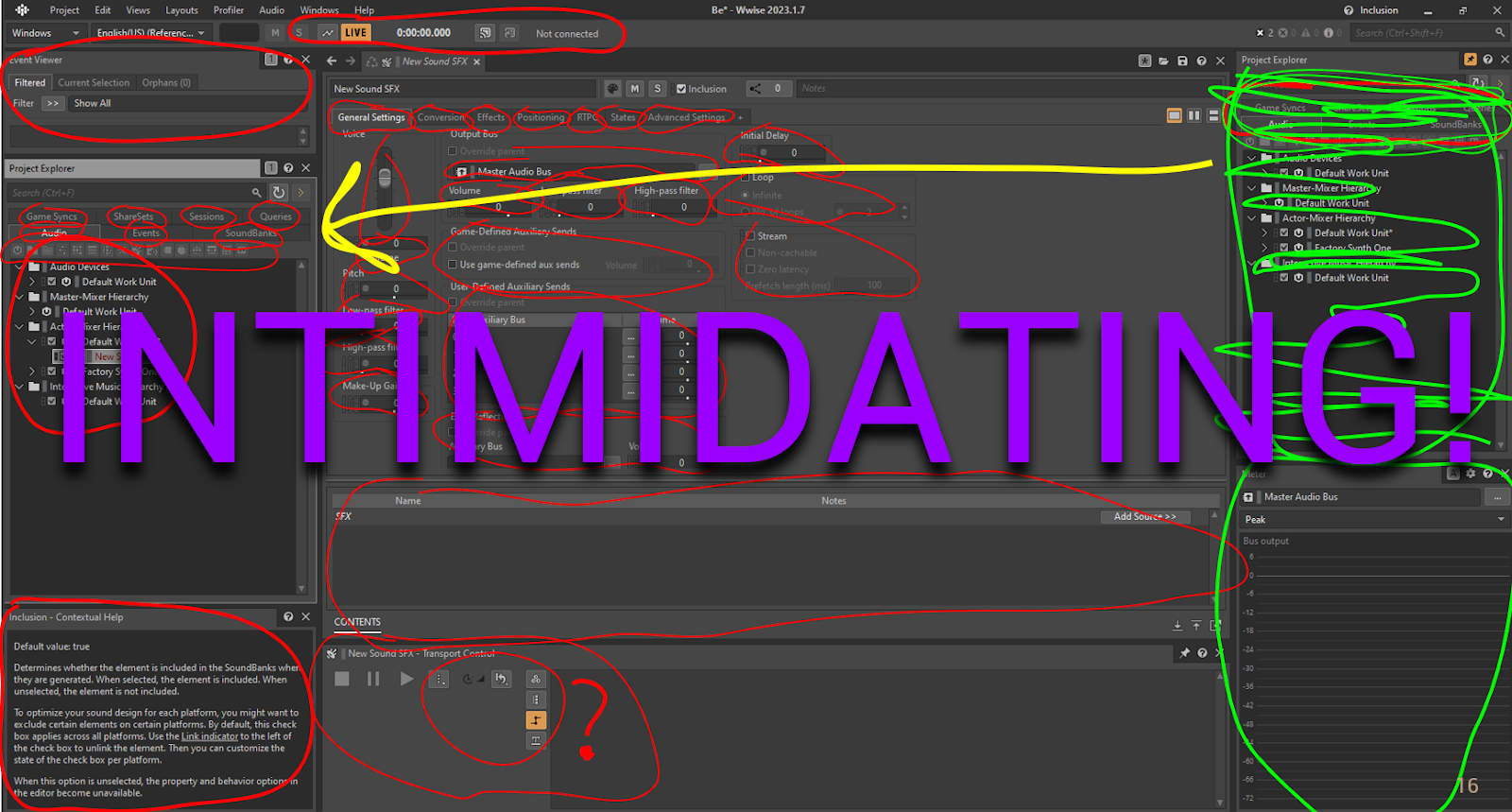

Wwise can be intimidating when you’re first starting out, and it’s easy to feel overwhelmed. It doesn’t have to be that way. In this article we’ll go through a simple, step-by-step approach to using Wwise and implementing audio into an Unreal Engine project – from importing sounds and setting up systems in Wwise, to calling them from the game engine.

I’m Alexander Hamparsomian, a freelance sound designer from Sweden. Several years ago I did Audiokinetic’s Wwise Fundamentals course. It was great for learning the essential features and workflows, but I was still left wondering: how are the actual game calls set up in the engine?

That’s the gap we’re going to fill here. And to make it practical, we’ll use an Unreal Engine project called Implementournament. Along the way, I’ll also share some of my own thought process, how I approached designing sounds from the player’s perspective, focusing on how audio can support the gameplay, immersion, and overall experience in this game.

Wwise can be intimidating when first starting out.

Prerequisites

- Unreal Engine 5.3

- Wwise (latest version) + Wwise Fundamentals course

Getting Started

Implementournament was a competition created and hosted by Jon Kelliher and Avishai Karawan in the audio community Airwiggles. The goal was to improve the technical side of game audio by designing assets, integrating them, and creating a playable build of the game.

Implementournament challenge info

The Game Audio Implementournament 2024: Complete Documentation explains how to download and set up the project.

Wwise Unreal Integration explains how to set up Wwise in the project, and how to call events, game syncs, etc.

The Game Audio Implementournament - Project Breakdown shows everything from how to play the game, to the blueprints, events, variables, and how they work.

Learn the Game First

At this stage I recommend that you play the game and get a feel for it.

Take notes of how the game works and what audio solutions you want to create. You start to think if this was a real game, what do I want the players to experience and how do I make it as immersive as possible for them. The thought process might go something like this:

“There is a lot of laser shooting – I’ll want plenty of variation to avoid repetition and keep it fresh.”

“Flying is a core mechanic. Boosting increases speed and triggers stronger visuals and particle effects - I can emphasize that feeling of speed with pitch modulation and dynamic layers.”

“Asteroids explode after a few hits and can be destroyed from far away – I can use filters, attenuation and delay to convey distance. To make the explosions feel even more impactful, I can duck other sounds.”

Try it Yourself

At this point, I really recommend you stop reading and try it out for yourself first. Part of the learning is in the problem solving. Start with setting up a simple event and try to do something different for each new event that you create. If you get stuck or need help, join the Airwiggles audio community (join anyway, it’s great). Everyone is very friendly and helpful, and if you search for “implementournament” you’ll find posts from when the competition ran that might already have your answer, or give you inspiration.

In the rest of the blog I go through some of the events that I set up and my thought process. But really, try it out for yourself first before reading further!

My Approach

For this project, I focused on the implementation side, and kept the sound design side to a minimal. I wanted to spend more time understanding how Unreal Engine communicates with Wwise. How to set up game calls, use the variables, and build the logic that makes everything work together.

Debugging with Print Strings

First, I like to understand how the events in Unreal Engine work and what values the variables have. To do this, I set up print strings for all the events in the Blueprints BP_Spaceship and BP_Asteroid.

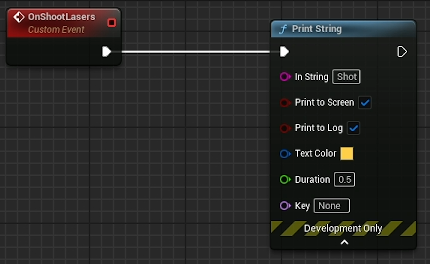

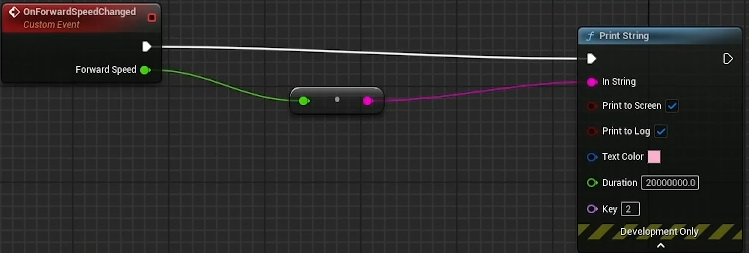

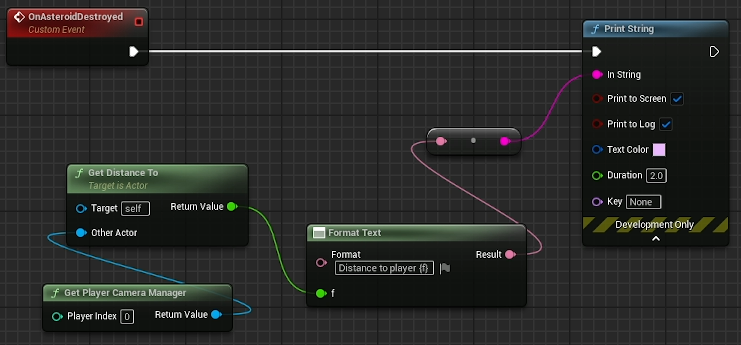

I’ll show three examples here; shooting lasers, engine forward speed, and asteroid destruction.

Prints “Shot” on the screen when event is triggered.

Prints out the value of the Forward Speed variable.

Prints the distance from the player camera to asteroid destroyed.

The following video shows the print strings in action for all the events.

Now that I know how the events trigger and what values the variables have, I can plan my Wwise setup.

Lasers

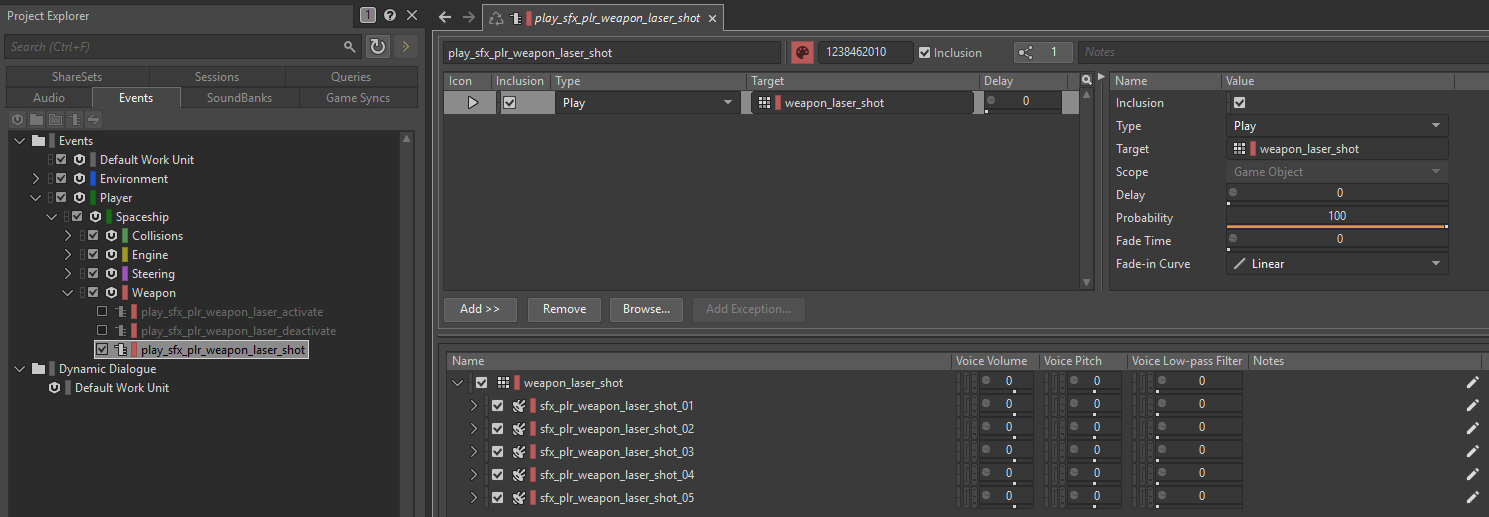

For shooting the lasers, I created a Random Container with variations to avoid repetition.

Laser shot Random Container with variations

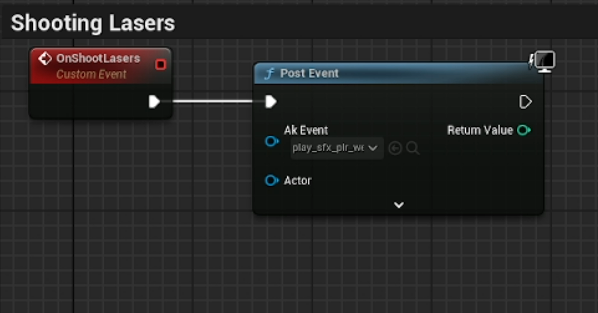

In Unreal Engine, I connected the OnShootLasers event with a post event node and chose the Wwise laser shot event. Every time the Unreal event is triggered, it will play the laser shot sound.

Unreal Engine, post event node with laser shot.

Engine

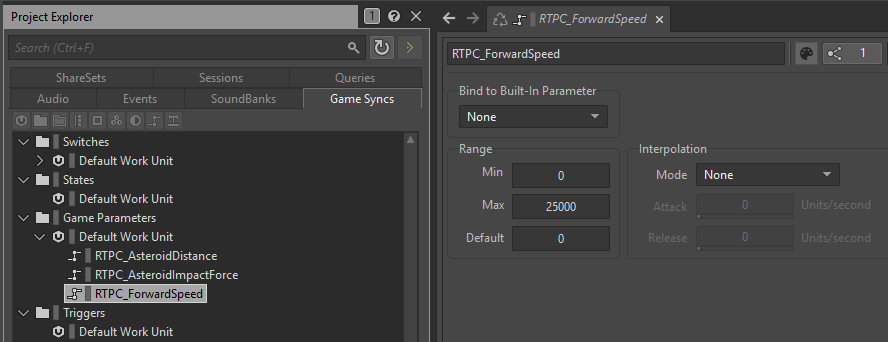

From the Forward Speed print string, I learned that regular thrust goes up to 12,000, while boost takes it to 25,000. I created a game parameter “RTPC_ForwardSpeed” with min value of 0 and max value of 25,000.

Game Parameter RTPC_ForwardSpeed ranging from 0-25,000.

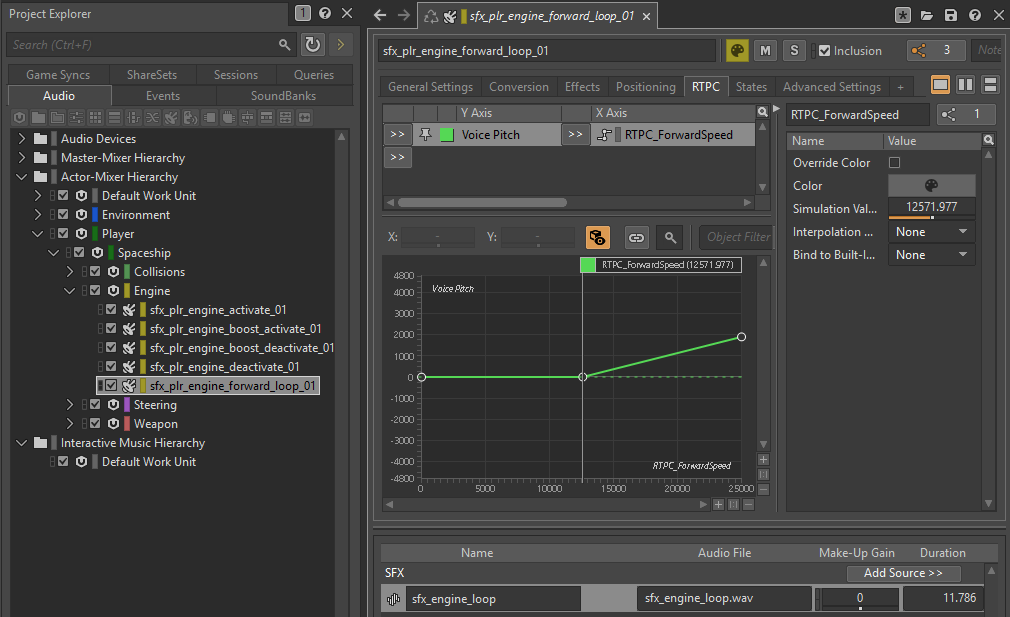

I connected it to my engine forward loop sound and added pitch modulation to enhance the sensation of acceleration. When boosting, increasing the pitch makes the speed feel more intense. The pitch will be raised by 2,000 units when reaching maximum (the value got decided after I had designed the engine loop sound and tested it).

Engine forward loop sound with RTPC modulating pitch. Values above 12,500 will increase the pitch.

In Unreal Engine I used the Set RTPCValue node for RTPC_ForwardSpeed, and triggered/stopped the engine loop based on the “Is Thrust Active” variable. I also added one-shot activate and deactivate sounds for both regular thrust and boost.

Unreal Engine, Set RTPCValue node with RTPC_ForwardSpeed

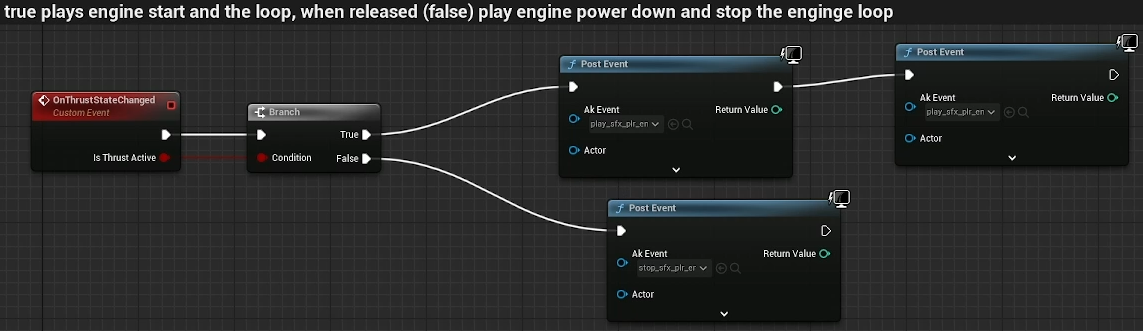

If “Is Thrust Active” is true, play regular thrust and engine forward loop. If false, stop engine forward loop and play engine deactivate.

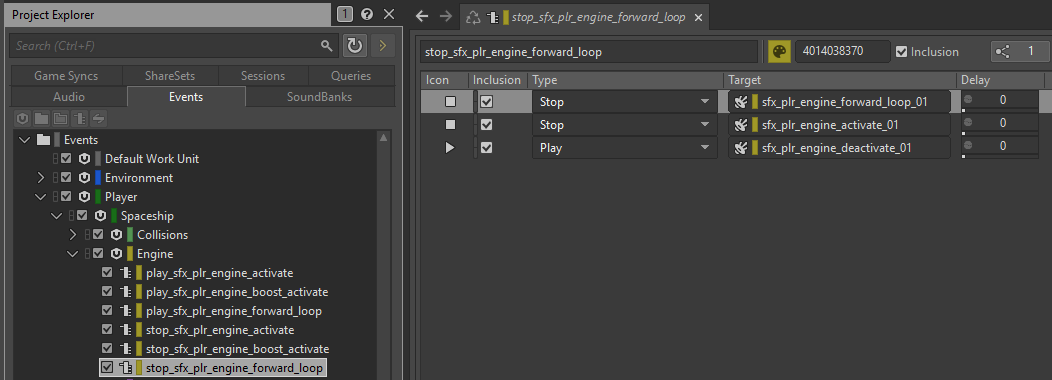

Stop engine forward loop event. Stops both the engine forward loop and the engine activate, and plays engine deactivate.

The following video shows the pitch modulation for the engine loop and all the other events connected to the engine:

Asteroid explosions

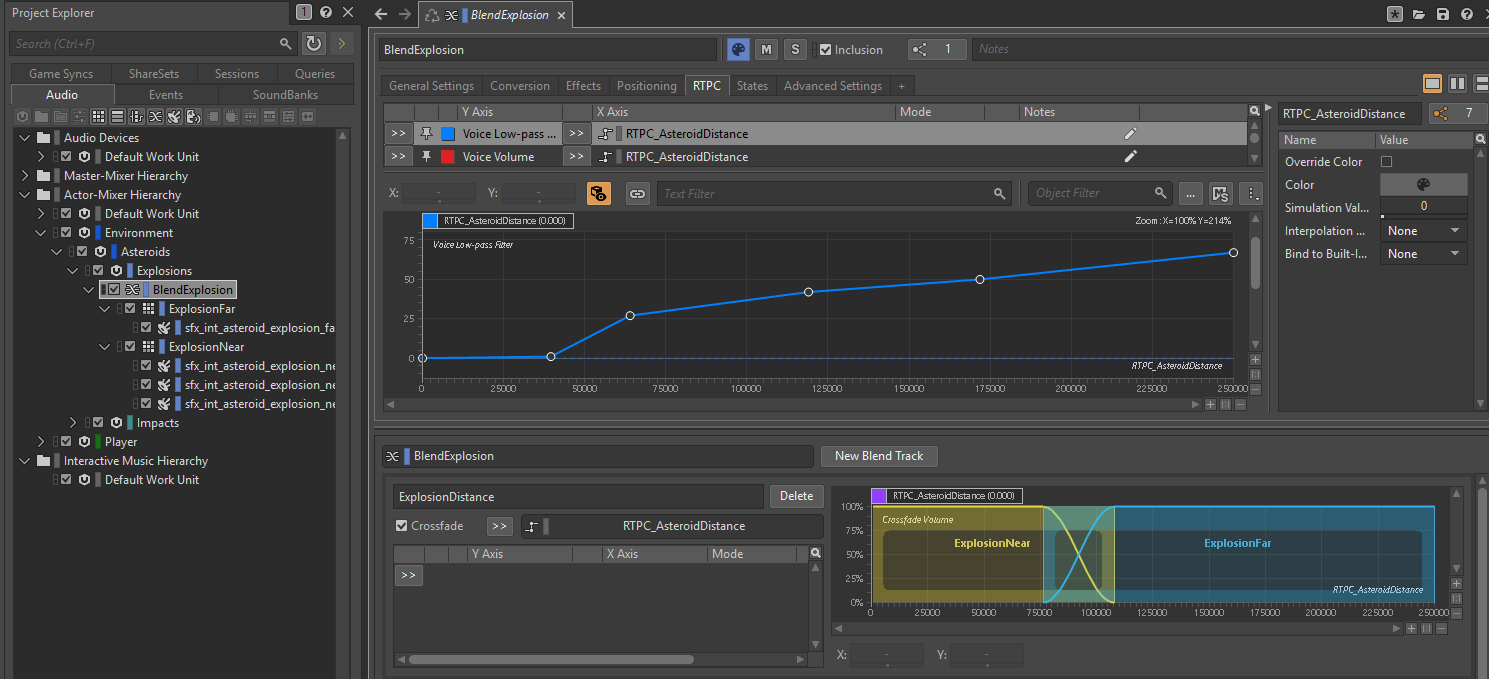

From the print string I learned that the maximum hit distance is 250,000 units. I created RTPC_AsteroidDistance ranging from 0-250,000. To try something different I connected it to a Blend Container that has near and far explosion sounds.

- 0-7,500: plays near explosion.

- 7,500-11,000: crossfade between near and far.

- 11,000+: plays far explosion.

Asteroid Explosions Blend Container with RTPC modulating frequency and volume.

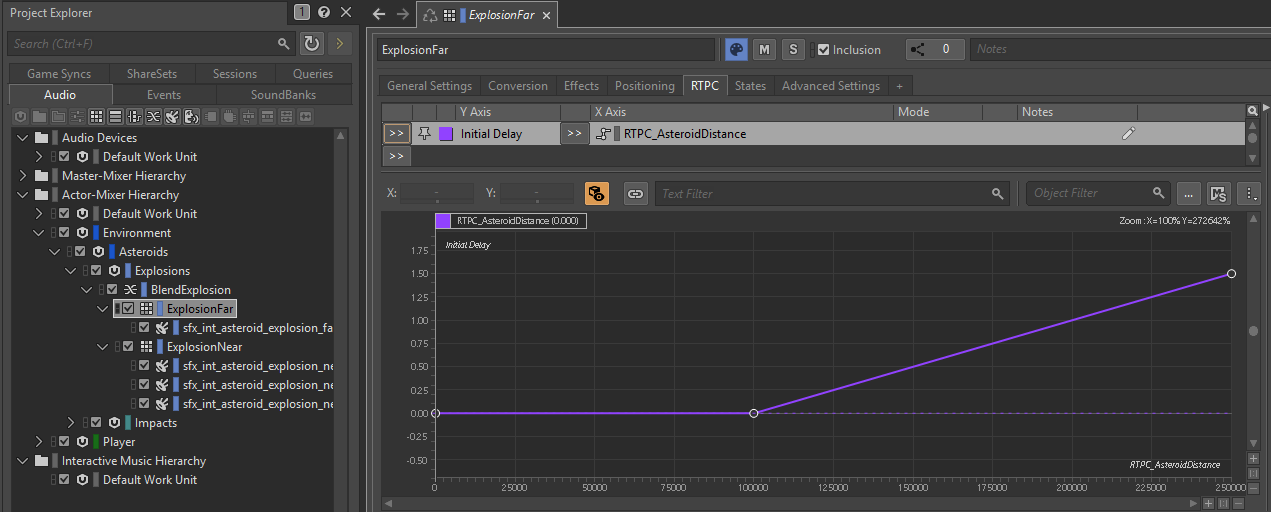

I also used RTPC_AsteroidDistance to apply a low-pass filter and reduce volume with distance, making far explosions sound bassier and quieter for a stronger sense of depth. For those explosions, I added an initial delay so the sound triggers later, reinforcing the idea that it takes time for the sound to reach the listener.

Far explosion with RTPC modulating initial delay.

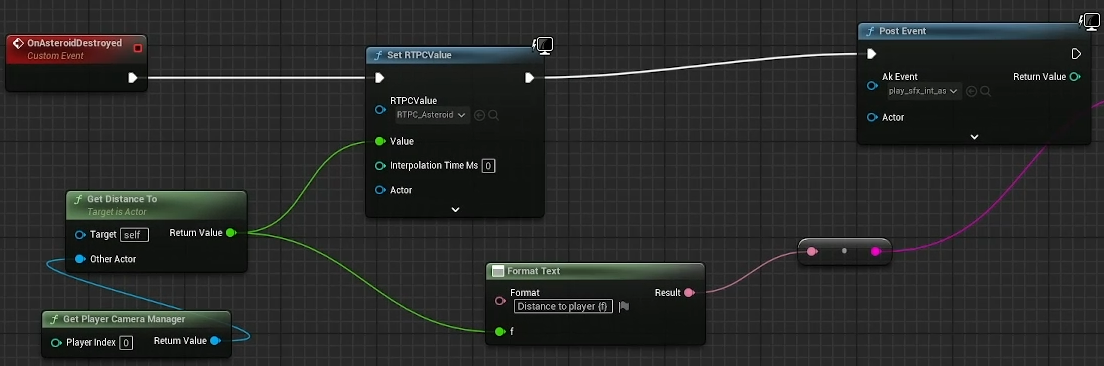

When OnAsteroidDestroyed triggers, Unreal measures the distance from the asteroid to the player camera using Get Distance To node (self = asteroid, Other Actor = Player Camera Manager). That value is sent to RTPC_AsteroidDistance before the sound plays, allowing Wwise to instantly pick the correct Blend Container variation and apply the right filter, volume, and delay.

RTPC_AsteroidDistance is set before the Wwise event triggers.

The Asteroids also have “health points”, meaning you need to shoot them with the lasers a few times before they explode. I added impact sounds for that and implemented them in a similar fashion as for the explosions.

The following video shows the Blend Container and how the filter, volume and delay settings are applied for asteroid explosions, and also the laser impacts:

Ducking

When I was creating a ducking system and setting up different mix busses, I thought about what I want the players to experience if this was a real game. I imagined it like:

“When you’re boosting, you’re trying to get somewhere fast, maybe towards enemies or running away from them – engine should be in focus, so lower other sounds.”

“In battle, when asteroids or enemy ships explode, you should feel the danger – explosions in focus, lower other sounds as well as the engine.“

“When you collide with asteroids there should be some feedback to the player to tell them that is not how to drive – lower the engine sound.”

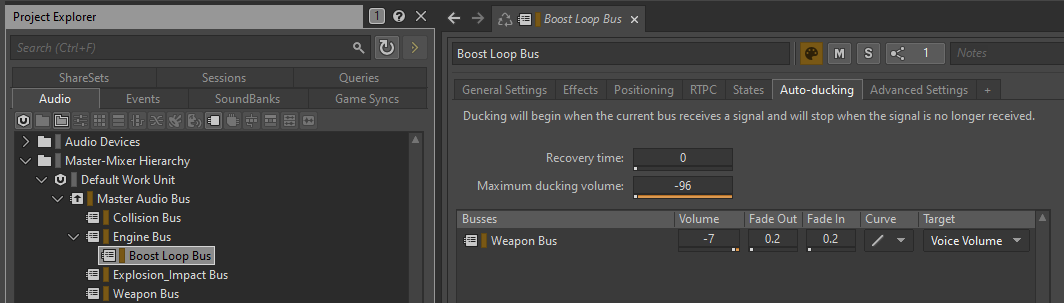

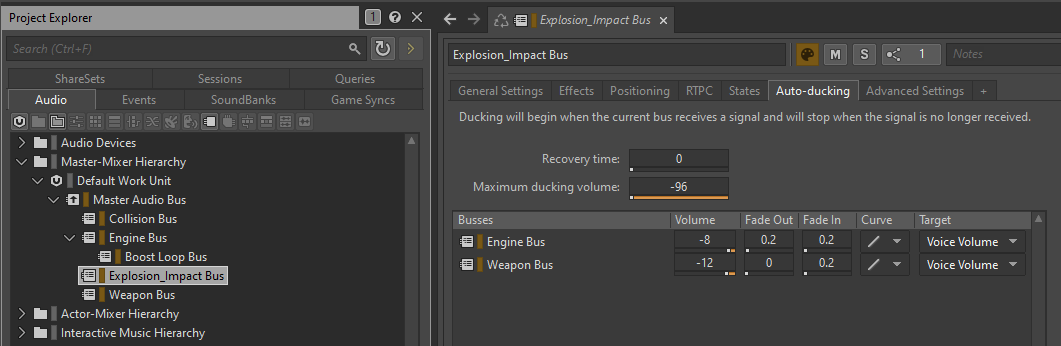

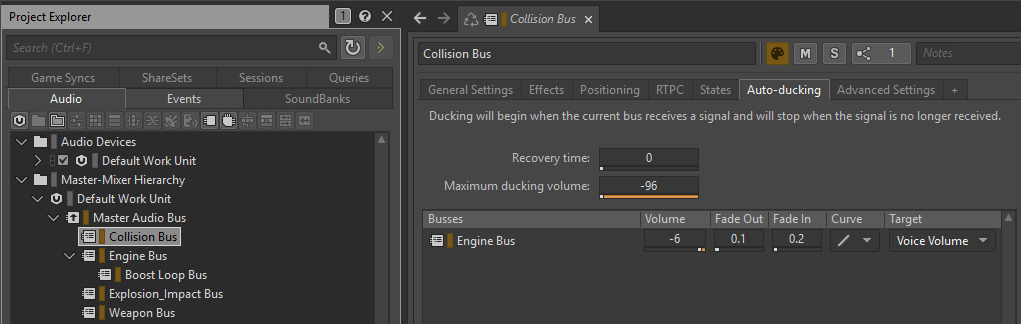

I created different audio busses for the engine, boost, explosions, weapons and collisions, and routed respective sounds to them. I decided on these ducking rules:

- Boosting lowers weapons.

- Explosions lower engine and weapons.

- Collisions lower engine.

When engine boost is active, the weapons become lower in volume.

When asteroids explode, the engine and weapons become lower in volume.

When the player collides with asteroids, the engine lowers in volume.

Here is the video showcasing the ducking system:

These are just some of the things I did for the Implementournament. I have a more in-depth breakdown where I go through the events in more detail which can be viewed here:

Closing note

There is so much more you can do with this project. During the competition, people set up VO that triggered during certain events, added asteroid flyby sounds, and one person even made each asteroid into a radio beacon playing a new channel when you flew past it.

That is why I think the Implementournament is such a great project. It’s fun, flexible and gives you a complete view of how audio is implemented from start to finish. It lets you learn the tools, experiment, and to think creatively as a sound designer and game developer.

If you choose to work in Wwise and are a beginner, just take it step by step. Start with a simple event, then try something different with each new one you create. Soon, you’ll see that Wwise isn’t as intimidating as it seemed at first, and you’ll start to enjoy the creative freedom it gives you to make your game’s audio come alive.

Thank you for reading and best of luck with your implementation!

Comments

Maria Lazareva

October 15, 2025 at 05:47 am

I has just starting with the Implementournament project today to practice with the UE, was watching Avishai's videos and then by accident stumbled upon your article! 0_o What a coincidence! Awesome! Thank you so much! I'll be digging in it this week!