Version

|

Wwise Unreal Integration Documentation

|

The goal of the AkAudioMixer module is to provide a platform-agnostic Unreal Audio Mixer, ultimately allowing the Unreal and Wwise audio mixers to run concurrently.

The Unreal Audio Mixer is already platform independent, that is, it works equivalently on Windows, Mac, Android, and consoles. Unreal achieves this platform independence with the use of a Mixer Platform that handles the interaction between the platform’s hardware and the platform-independent code. Both the Unreal Audio Mixer and Wwise SDK automatically initialize and handle a platform’s audio. However, most platforms cannot receive audio from multiple sources at once, especially consoles that initialize one unique audio system; some will crash if initialized twice, some will allow both systems to interact with the same objects blindly.

The solution proposed thus far by Wwise documentation has been to disable Unreal’s Audio Mixer when a project is expected to use Wwise (see Frequently Asked Questions). The AkAudioMixer module provides a concurrent, platform-agnostic solution, whereby Unreal's Audio Mixer default platform (XAudio2, SDL Audio, AudioOut, etc.) uses a Wwise platform instead. This allows Wwise to properly initialize the Platform Sound Engine, and allows Unreal to process audio.

This module provides two different input components, both processed through a basic AkAudioInputComponent.

See Posting Audio Input in Wwise 301 course for more information.

FAkMixerPlatform allows the Wwise Sound Engine to act as a substitute for the platform-specific part of the Unreal Audio Mixer. Through a stereo feed, it’s then possible to retrieve the end result of the Unreal mixdown as a Wwise Audio Input.

The previous solution of disabling the Audio Mixer had limitations, as no audio clock was provided to Unreal, thus, there was nothing to drive the audio feeds. For this reason, Unreal would not provide audio at all. Having something (FAkMixerPlatform) that drives the Audio Mixer means the entire Unreal audio subsystem stays fully functional, alongside the Wwise audio system.

The current solution only provides Unreal audio as stereo. It’s expected that this Unreal mixdown will be muted in Wwise, and will only be used as a makeshift solution. Having two different, concurrent spatialization methods will cause audio issues, hence this solution is provided as a quick workaround. Once the FAkMixerPlatform is properly set up, it’s expected that a project will use Unreal submixes when an actual input for an object is necessary.

AkSubmixInputComponent allows you to easily connect any Unreal audio submix to a Wwise Audio Input component. This makes it possible to retrieve part of a mix, such as a movie’s audio, and send it to Wwise individually. The submix can be provided in any channel configuration, as required by Wwise. Not surprisingly, there are limitations and performance considerations to pushing audio from Unreal to Wwise. It’s preferable to configure the entire flow directly in Wwise whenever possible.

Using a UAkSubmixInputComponent is the preferred method to retrieve Unreal audio from individual actors. Through the usual Wwise Unreal Integration, it’s possible to link the Audio Input Component with its actor position, and then use Wwise integrated spatialization, or even the object-based pipeline in 2021.1 and up, to achieve the project’s desired audio properties and quality.

This component does not require the FAkMixerPlatform, it can be used independently. However, be aware that initializing both Unreal’s platform-specific audio and Wwise’s platform-specific sink simultaneously will still cause some platforms to crash, while having two concurrent audio pipelines

Both FAkMixerPlatform and UAkSubmixInputComponent are initialized through a Wwise “Play” Event. This allows the Wwise Sound Engine to start polling the Unreal components.

It’s important to keep in mind that enabling the Event starts the polling process, and this process should never be virtualized: it’s either started or stopped, and the FAkMixerPlatform input component should always be playing, otherwise the Unreal audio pipeline will not work properly.

Once the initialization is done, the overall audio pipeline can be considered as a tree, executed every few milliseconds, to provide the next audio chunk to the hardware.

The tree starts with the platform hardware requesting an audio buffer from the Wwise Sink. Then the Wwise Sound Engine polls any playing Audio Input Components for their audio.

At this point, FAkMixerPlatform is polled, which requests the next buffer from the Unreal Master Submix. This, in turn, empties Unreal’s circular audio buffers, allowing the entire pipeline to continue working.

Having two sequential, concurrent systems will add some latency to the end mix. Unreal assumes the A/V component plays at its own speed, and will use a circular buffer to provide audio at the same speed that the Audio Mixer requests it.

The Unreal Master Submix also has a circular buffer set up in the Audio Mixer’s FOutputBuffer to ensure audio is provided at a valid speed.

Since the individual submixes are pushed by the Master Submix, and Wwise requests its buffers at the same time, it means both Unreal and Wwise push requests.

Finally, there is also Wwise’s Sink buffering, which can be customized by code.

The Wwise Audio Input source plug-in allows any input from external sources to be used inside Wwise. This is what’s used on the Wwise side for any given input.

In order to capture the Unreal Mix, we must create one Wwise Audio Input, as well as an Event that starts the data acquisition process.

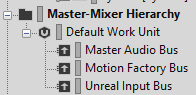

The recommended process is to create a bus in the Master-Mixer Hierarchy and a Sound SFX object that uses a Wwise Audio Input source plug-in. Yours can be named differently than in this example, and can be set up differently. What’s important is having an eventual connection to the Master Audio Bus, even if the audio as provided by this component is to be ultimately discarded. If you are muting the end result, you must make sure the voice is not virtualized, as this would effectively remove the audio polling necessary to keep the Unreal Audio pipeline running.

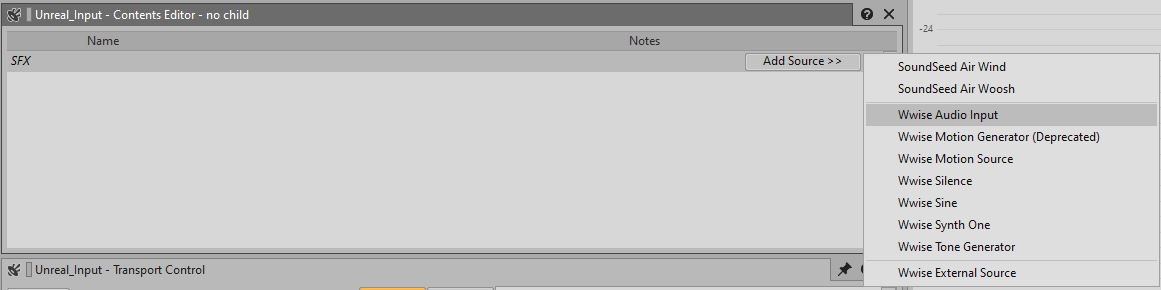

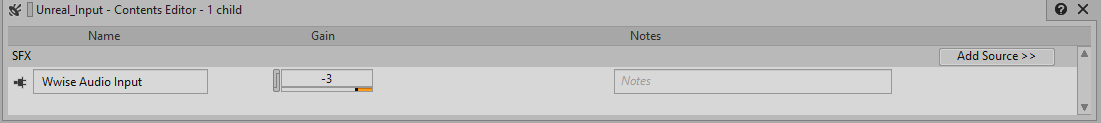

The creation of the Unreal_Input Source is straightforward. Once an empty SFX is created, you add a Wwise Audio Input source plug-in by selecting the “Add Source >>” button in the Contents Editor.

A volume offset can be set up at your leisure at the source level or directly on the Sound SFX object.

Once the Sound has its Audio Input source set up, you’ll need to route this sound to the appropriate Output Bus, which is the “Unreal Input Bus” in this example.

Finally, there must be an Event added that will Play this Sound SFX, otherwise the Audio Input will not be started. The playing of this Event is also used in Unreal to determine which Audio Input to use for the FAkMixerPlatform.

To create an Event targeting the Audio Input plug-in, select the Sound SFX, right-click and select New Event > Play. The Event will be automatically named Play_[SoundName], but it can be renamed to anything else.

|

Warning: The FAkMixerPlatform must be “Playing” at all times. This is what drives Unreal’s Audio Mixers to process audio for its components. Without the FAkMixerPlatform being played, Unreal’s circular buffers will fill up, potentially adding latency to your audio from this point on. If you want to mute this channel, make sure it’s still being played, and never virtualized or stopped due to lack of audio. |

If your project uses Event-Based Packaging, the Event will be available automatically. If your project uses the standard SoundBank packaging, the first loaded SoundBank should contain the FAkMixerPlatform’s “Play” Event, and it should never be unloaded.

You must tell Unreal to use the Wwise audio mixer instead of the platform’s own Audio Mixer. For each platform, add the following lines to <Project>/Config/<Platform>/<Platform>.ini or <UE4_ROOT>/Engine/Config/<Platform>/<Platform>Engine.ini. Note that the project settings will override the engine settings. You may need to create the file if it does not yet exist.

Then, once you have the proper Wwise Integration activated, you must use the previously created Play Event in your Project Settings’s Audio Input Event. This is what binds the FAkMixerPlatform component in your Unreal project to the Wwise sound engine:

That’s it for the basic setup! At this point, you should generate the SoundBanks in Wwise (or in the Unreal Waapi Picker) and restart the Unreal Editor. Note, a restart is required each time you change the Audio Input Event, as it’s only initialized once. If you start the Profiler on the Unreal Player in Wwise Authoring, you should be able to see the meters of your Audio Busses moving.

Unreal determines how to encode audio through the ITargetPlatform interface. On each platform, the GetWaveFormat and GetAllWaveFormats methods are called by the Derived Data system in order to determine which audio format is supported. This is not overridden by the AkAudioMixer system. You should make sure a supported, generic format is provided through this function, either by overriding the necessary files yourself, or by making sure the conditions are met to use generic encoding, and not any platform-specific encoding.

Otherwise, if you’re comfortable modifying Unreal source code, you can change the target platform’s audio formats in the following file pattern: Engine/Platforms/<PlatformName>/Source/Developer/<PlatformName>TargetPlatform/Private/<TargetName>TargetPlatform.cpp

Ensure the platform’s audio format is equivalent to the one provided in the FMixerPlatformWwise’s CreateCompressedAudioInfo method as found in this file:

Plugins/Wwise/Source/AkAudioMixer/Private/AudioMixerPlatformWwise.cpp

The Wwise project preparation for any UAkSubmixInputComponent is similar to the one for FAkMixerPlatform: in your project, you must create a new Wwise Audio Input Source, and have an Event to be played. This Event will be bound to your Unreal project later on in a Blueprint you must create yourself.

|

Warning: Warning: As with the FAkMixerPlatform input, you need to make sure the Wwise object is never virtualized or stopped on its own. It must be Unreal that plays or stops the Event. Otherwise, buffers will keep on filling up on the Unreal side, causing lags and memory usage spikes. |

This first part allows you to set up your Unreal project to override the default Master Submix and customize the Audio Submix flow. If you only complete this step while keeping the new Master Submix as-is, without any tree inside the environment, there will be no audible changes between the Default Master Submix and your new submix. You can have only one Master Submix per Unreal project, it will be shared by all the worlds.

Create a new “Sound Submix” in your project that will serve as the “Master Submix”. You can use any name, but it should still convey that it’s the Master. We named it “MasterSubmix” in this example.

Go to your project’s Audio Settings, in the Mix section, and set the Master Submix to your newly created Audio Submix.

At this point, you have an empty custom Master Submix, and you have no special routing. Your project should sound identical to a version with the Default Master Submix.

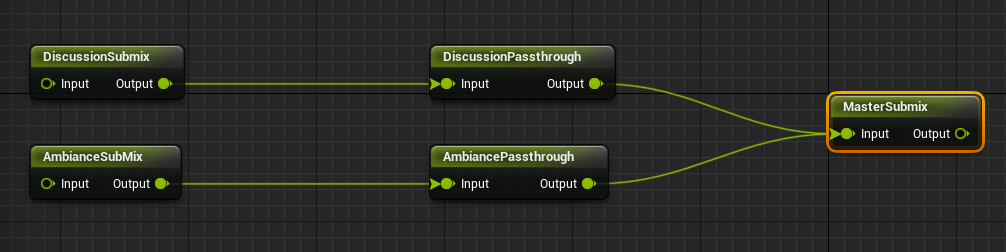

In order to efficiently capture the audio of a group of actors inside Unreal, you must create two new submixes for each submix category. Due to the way submixes are handled in Unreal, we must have a hierarchy of two distinct submixes. This makes sure our submix audio is not pre-mixed with anything other than its own audio.

For proper nomenclature, we decided to use the submix name, and suffix it with “Passthrough” for the second one. In our example, we used AmbianceSubmix and AmbianceSubmixPassthrough, respectively. You are free to use any given name, as needed, for your own submixes

Open the custom Master Submix, as defined in the Creating and Using the Master Submix section. Then, add a graph where the different Passthrough are set between the real submix and the Master Submix. You can have more submixes, and you can define any given order for the submixes. The important part is to have a Submix -> Passthrough -> Next in Tree order.

For example, you can have the following graph if you have two different submixes:

At this point, the submixes are unused and unassigned. Your project will sound exactly as it did before, since all audio from all objects will still be routed to the Master Submix. However, once actors send their audio to their own custom submix, such as the DiscussionSubmix and AmbianceSubmix as defined here, audio will be sent to both the Discussion UAkSubmixInputComponent and the Master Submix, causing duplicated audio.

To prevent this duplication, you need to set the Output Volume of the multiple Passthrough mix to 0. This will ensure that no audio will be sent to the Master Submix during mixdown, while the entire audio of the Discussion and Ambiance submixes will be delivered to a custom Wwise Audio Input component.

Adding audio to a submix is very easy. In Audio Effects, in the Submix tab, set the asset’s submix to the submix you wish to use.

|

Warning: If you still want to go with the multiple assets for one submix approach, it might be preferable to have the Play Event executed at level start, and the Stop Event executed at level end. This will have obvious performance consequences, as the audio channel will be open permanently in that level, so if you have more than a few, those will all push audio to Wwise that will need to be mixed. Another possibility is to have one master component that decides the life cycle of the audio data transmission, while having more than one sub-component provide audio whenever necessary in this tutorial. |

In this example, we imported a new WAV file to the Unreal Content Browser, and we changed the submix to define the final destination.

If you are following the tutorial in order, at this point, playing back this SoundWave asset will not produce any audio, as we effectively set the Passthrough volume to 0, so the audible track doesn’t go to the Master Submix. And we have not set up the Event to Play and Stop the required Audio Component.

It is also not set up as anything other than a component, so it’s not used inside your current Unreal world. We will perform these steps in the following section.

This section shows the example of how to set up the UAkSubmixInputComponent in order to bind the created submixes to the Wwise Audio Input Component. This is a usage example, and you are free to set it up as you want, depending on your project’s specific requirements.

In this tutorial, we are providing audio through an AmbientSound actor, but it’s possible to use any other Audio Component, or even Audio-Video components, to play back audio to submixes.

First, you must create a new Blueprint class, with thse Unreal AmbientSound as parent class. Notice there’s an AkAmbientSound actor that’s also accessible. This one is used to create a Wwise-specific Ambient Sound, so it has no purpose for this tutorial.

|

Note: For performance purposes, it’s highly recommended to use an AkAmbientSound whenever possible, but it is unnecessary in the case of the AkAudioMixer, as the audio would already be played and rendered in Wwise. |

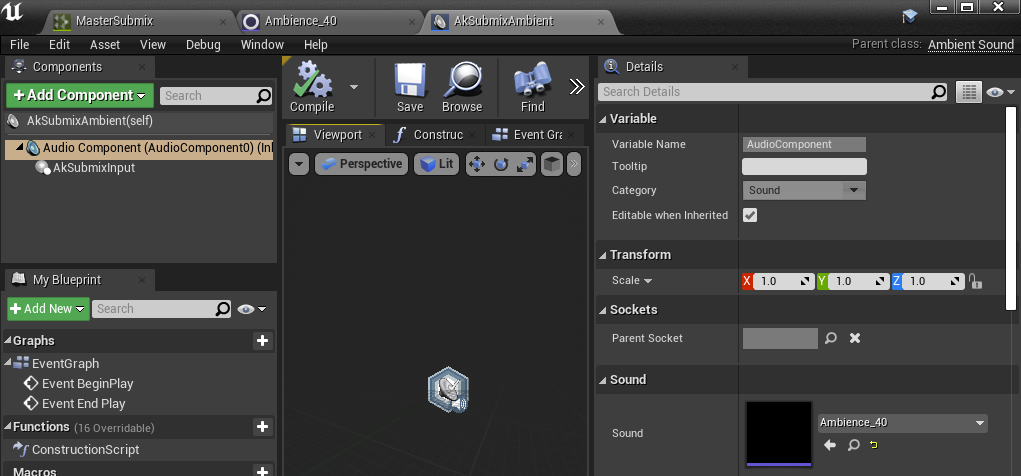

For this example, we named the AmbientSound actor component “AkSubmixAmbient”. You can name it according to your own naming schemes.

Link the previously created SoundWave to the AmbientSound object in the Sound section (as seen in the bottom-right part of the following figure). This is still a generic component, so it’s simply used as a default value.

We must have an Unreal component that controls the Play and Stop Events, and binds the submix to a Wwise Audio Input Component. This is done through the AkSubmixInput Audio Component.

In your AkSubmixAmbient Blueprint, under the root component, add the AkSubmixInput component.

In the Event Graph, be sure to post the Ak Submix Input component’s event on BeginPlay, and stop the component on EndPlay.

This makes sure audio data transmission is active when BeginPlay is done, and stops it as soon as End Play is reached. This is where you can be creative for optimization purposes. If you want to optimize this more than the default values, follow your own actor’s life cycle; start and stop the Audio Input processing only when it’s needed.

You can now compile and save the blueprint.

Drag and drop your new blueprint in the level to instantiate it.

In the Details panel, set the SoundWave of your choice in the Sound property. This is the real value, not the default one.

Select the AkSubmixInput component.

Now, this is where you will define the Ak Audio Event to start playing the Wwise Audio Input Component, and the Submix to Record on this Component. Select the proper Submix to Record in the Submix Input section, and select the Ak Event to Play the Audio Input.

That’s it! At this point, the submix should be used to play the Ambient sound, the submix should be muted (since the output volume is set to 0 in the Submix tree), and the Audio Input should be properly used in Wwise to play back the audio for that object

Questions? Problems? Need more info? Contact us, and we can help!

Visit our Support pageRegister your project and we'll help you get started with no strings attached!

Get started with Wwise