Object-Based Sound Systems

The market's desire for immersive audio experiences has propelled an array of speaker configurations that project far beyond 7.1, encompassing the height dimension alongside an enhanced planar capacity. This has forced some surround sound systems such as Dolby Atmos and DTS:X, as well as emerging distribution standards like MPEG-H, to come up with the concept of audio objects in order to abstract the speaker configuration onto which spatialized audio content should be rendered.

A very important distinction between channel-based and object-based audio is that channel-based relies on a fixed number of channels from the point of production to playback, and audio mixes are designed for a strict speaker configuration or have to be downmixed to accommodate simpler speaker set-ups. Object-based audio implies that ’audio objects’ (i.e. mono source + 3D coordinates relative to the listener) must be preserved, and passed over to the renderer to spatialize over the user set-up. In other words, object-based audio holds ‘objects’ or audio elements as individual streams and allows each stream to contain metadata describing where it should play in a three dimensional space, agnostic to the speaker set-up. Audio objects can be individually placed spatially with precision during production, and each audio object is rendered in real time to the right combination of speakers through a process called adaptive rendering, which compliments speaker layouts. Adaptive rendering also permits adjustments to the mix on the receiving end.

The object paradigm translates well with binaural virtualization of audio, common with VR. This process is typically implemented by taking each individual audio object, and passing it through a filter (HRTF). Its frequency response will depend on the 3D position of the source relative to the listener.

The Drawbacks of Preserving Objects

While object-based audio looks at optimizing the quality of the rendering from a spatial perspective, preserving objects comes with limitations on the audio design process because it is incompatible with important audio authoring practices.

For example, sound designers may wish to control the dynamic range of a group of sounds. They typically do so by adding a compressor on a bus which sums the inputs – in other words, creates a submix. Submixing is used for various other reasons such as:

- Performance

- Side-chaining

- Mastering

- Metering, loudness metering

- Grouping

In Wwise, objects of the Master-Mixer Hierarchy are used for these purposes. A bus becomes a mixing bus when it needs to.

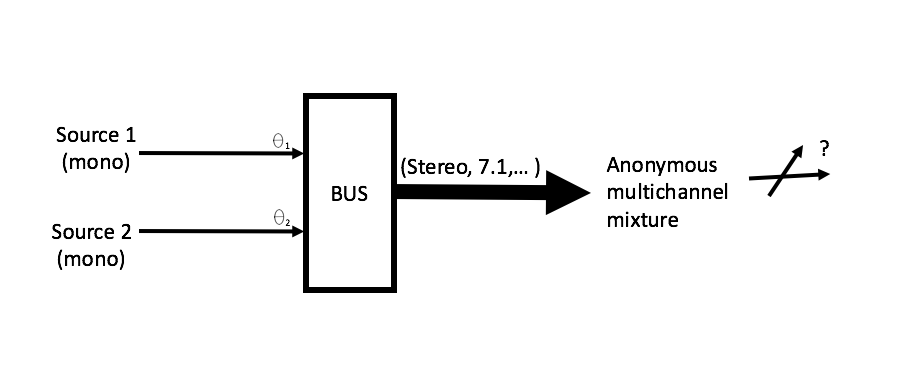

Now, say you route two 3D sources to a mixing bus, the audio data that you get at the output of the bus will represent a superposition of multiple sources/objects, from which you will have lost the constituting directional components.

Figure 1 – Two sources with different incident angles are panned and mixed onto a multichannel bus. The action of panning implies converting the spatial representation of the input (here, expressed with an angle of incidence Ɵi) to that of the output (for example, by distributing the signal on two or more of a 7.1 speaker setup). The output is a mixture of panned objects, and does not have a distinct angle of incidence (it is not an object, in the sense that was given earlier).

Therefore, object-based audio is incompatible with submixes because objects only exist upstream of mixing, and this can limit the sound designer from using authoring practices that are habitually applied to submixes.

Case Study

The mixer plug-in interface in Wwise is an object-based plug-in architecture, because mixer plug-ins require the use of individual sound sources or ‘objects’ in order to access the 3D position of each input and produce binauralized audio. While they appear to be a great framework for implementing binaural virtualizers, using them will in fact impose some constraints on the design process due to the limitations of object-based audio:

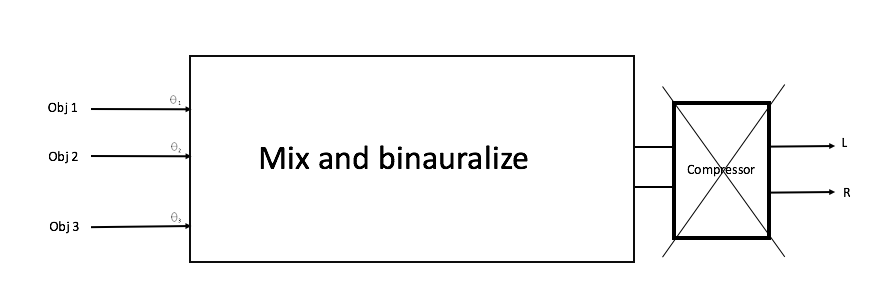

1. You should not add Effects on a binaural signal (at least not non-linear Effects such as compressors) because they can break the spatial audio illusion you are trying to achieve with binaural processing.

Figure 2 – Incorrectly adding a compressor Effect downstream on the binaural signal at the output of the mixer plug-in.

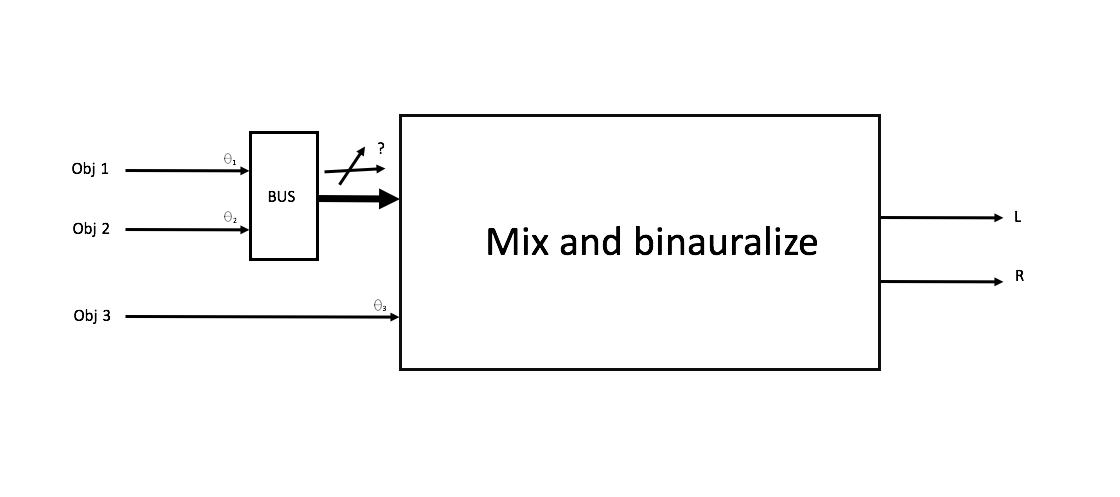

2. You should not use mixing busses upstream of the mixer plug-in, because the resulting submix will no longer contain the 3D information needed for binaural processing.

Figure 3 – Mixing objects upstream of the mixer plug-in. The submixed signal does not carry a single object and, therefore, cannot be binauralized by the plug-in.

Figure 3 – Mixing objects upstream of the mixer plug-in. The submixed signal does not carry a single object and, therefore, cannot be binauralized by the plug-in.

The trade-off

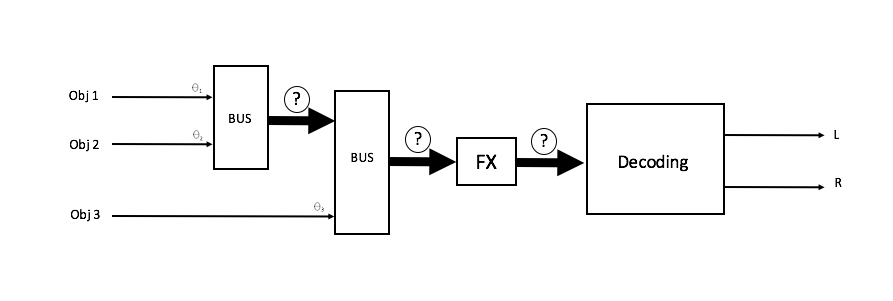

Now, wouldn’t it be nice if we could have the best of both worlds? If we could render audio with decent spatial accuracy without the shortcomings of object-based audio? Stay tuned to learn about how ambisonics may help you out with this!

Figure 4 – Objects are submixed freely and panned onto a multichannel spatial audio representation, which conveys the directional intensity of all its constituents and can also be manipulated by adding, for example, Effects.

Commentaires

Konstantinos Kaleris

October 16, 2019 at 06:18 am

Hello, very useful info. We are trying to achieve something similar. Did you use a custom 31-speaker setup in the "Channel Configuration"? How did you set it up? Thanks! Konstantinos (Audio and Acoustics Technology Group, University of Patras)