In the world of games, there are many characters that are completely fictitious. Characters like enormous dragons, gigantic soldiers, werewolves, zombies, and cyborgs each come with their own personality, battling the player or, at times, befriending them as the story progresses. It is the character's voice that adds personality to the virtual being, giving it life and thus playing an extremely critical role in providing the best gaming experience. However, it isn't easy to create voices for characters that don't actually exist. If it is a multilingual game, the workload can begin to multiply. KROTOS Dehumaniser Live is an innovative technology specializing in vocal effects, and works as a runtime plug-in for Wwise. The Dehumaniser Live vocal processing functions enable high-quality, creative voices making it, for example, possible to morph a werewolf's voice into a human voice, in real-time.

Krotos Ltd is one of Audiokinetic's community plug-in partners, which offers advanced audio technology. KROTOS Dehumaniser Live is comprised of four different components:

- Dehumaniser Simple Mode

- Dehumaniser Advanced Mode

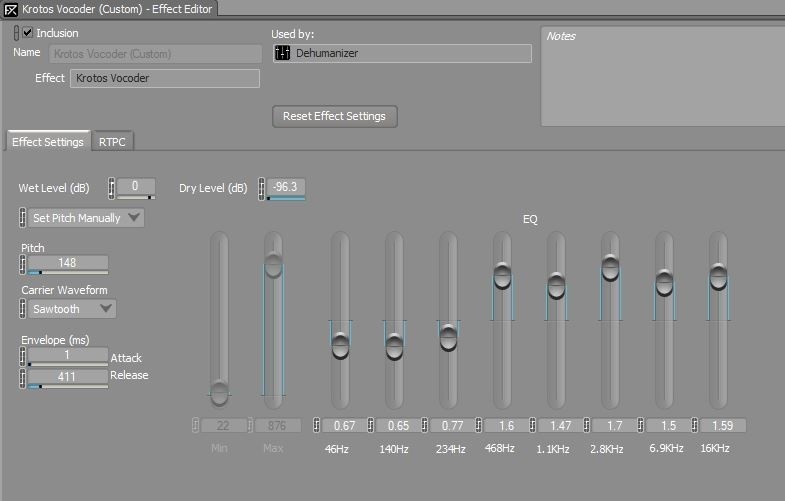

- Vocoder

- Mic Input

There are two modes in the main component, Dehumaniser Live:

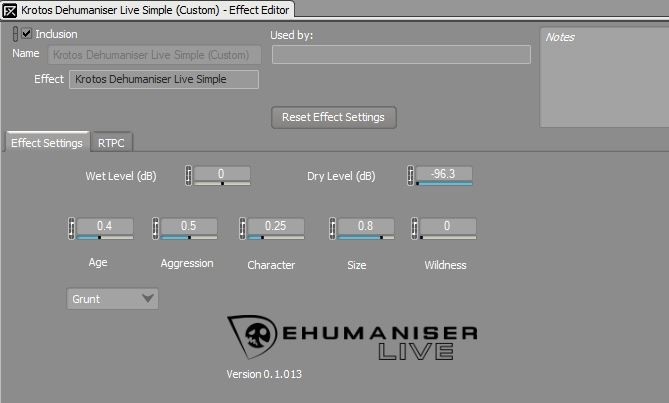

Dehumaniser Simple Mode

- Age

- Aggressiveness

- Size

- Character

- Wildness

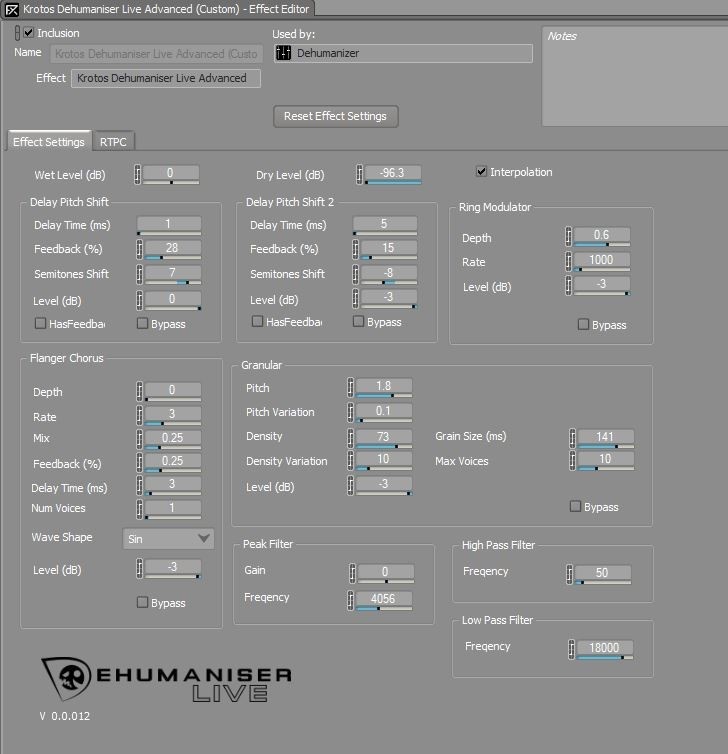

Dehumaniser Advanced Mode

- Granular

- Delay Pitch Shifting (x 2)

- Flanger/Chorus

- Ring Modulator

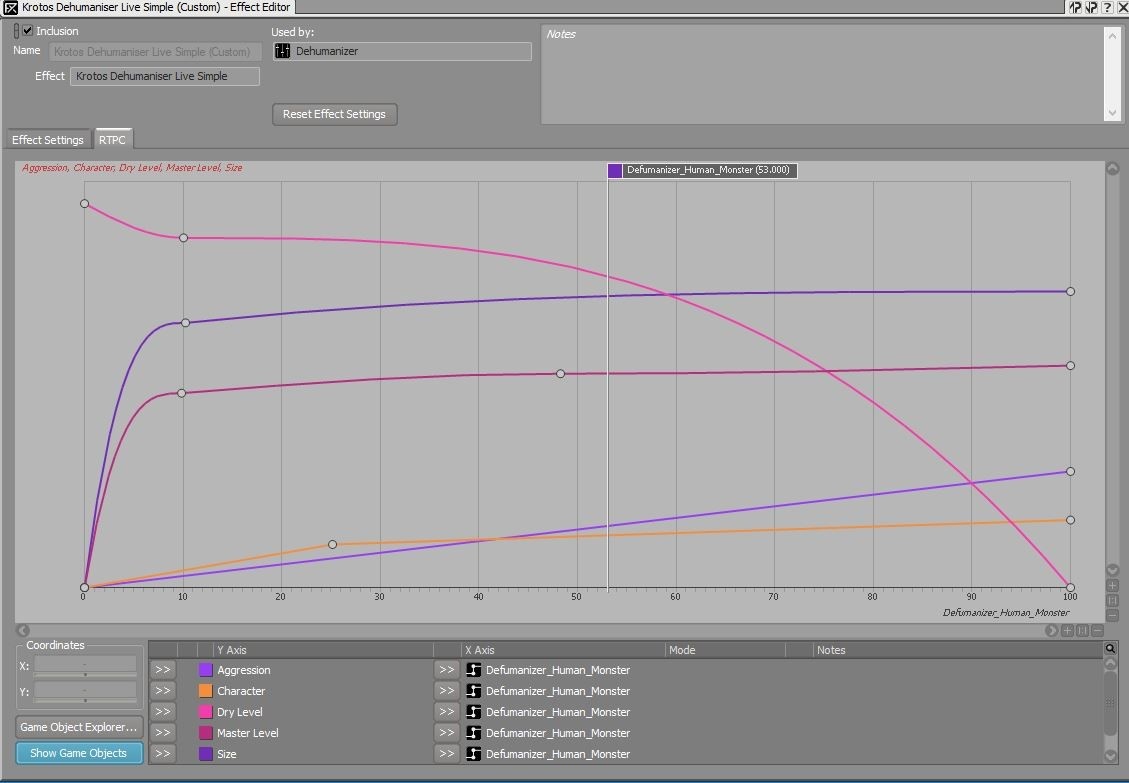

In Simple Mode, the possibilities are concentrated into five parameters, so morphing a voice becomes a simple operation. It also saves CPU use. Meanwhile, Advanced Mode offers more than 20 parameters for you to tweak. It can, however, increase the CPU workload. Both provide very distinct effects, so you can decide which of the two modes is best for the situation on hand. All parameters can be processed in real time using RTPC. Configure an RTPC for the parameter that changes a human character into a monster, and the character can morph smoothly in-game, too. You don't need different assets for the original voice and the processed voice, so you can also save on memory.

In Dehumaniser Simple Mode, the Character and Size parameters are crucial, and the effects are obvious. It is a good idea to use these two parameters to give foundation to the character through size and texture, and adjust the other parameters to get the result you desire.

In Dehumaniser Advanced Mode, the two Delay Pitch Shift parameters are important. These set the base pitch, and you can configure the Granular parameter for added layers of creepy textures. You can then use the various effects included in the package and fine-tune the final voice effects. Vocoder produces a mechanical voice, like a robot's. If you select Pitch Tracker Mode, it will track the input signal's pitch variation, so I recommend you try it out first. Set Pitch Mode gives you a typical robotic voice effect. You can use the Career parameter's ten waveforms and 8-band EQ to enhance the robot voice texture.

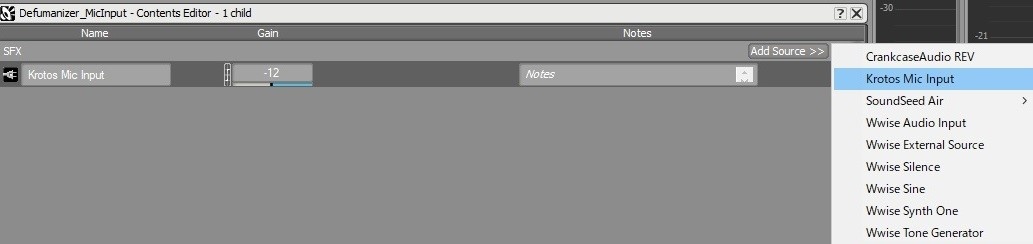

Finally, Mic Input is a component that connects the plug-in to your computer's mic input, allowing you to play back your own voice in Wwise, in real time. That means you can use your voice to test the Dehumaniser Live functions. Of course, if you pair Dehumaniser Live with your game's voice chat system, it can act as a run-time component that processes the user's voice in real time.

Audiokinetic held a demonstration at CEDEC 2017 in Japan this past August, showcasing how Dehumaniser Live can be combined with dialogue localization features in Wwise to obtain an innovative workflow, attracting interest both at the Audiokinetic booth and in our sponsored lecture. The Audiokinetic sponsored session at CEDEC 2017 focused on how to use the Wwise dialogue workflow. We invited Adam Levenson and Matthew Collings from Krotos, the developer of Dehumaniser Live, as well as dialogue recording specialist Tom Hays, from RocketSound, to talk about the new possibilities of dialogue production using Wwise. Masato Ushijima, Audiokinetic in-house product expert, also introduced various dialogue functions from Wwise.

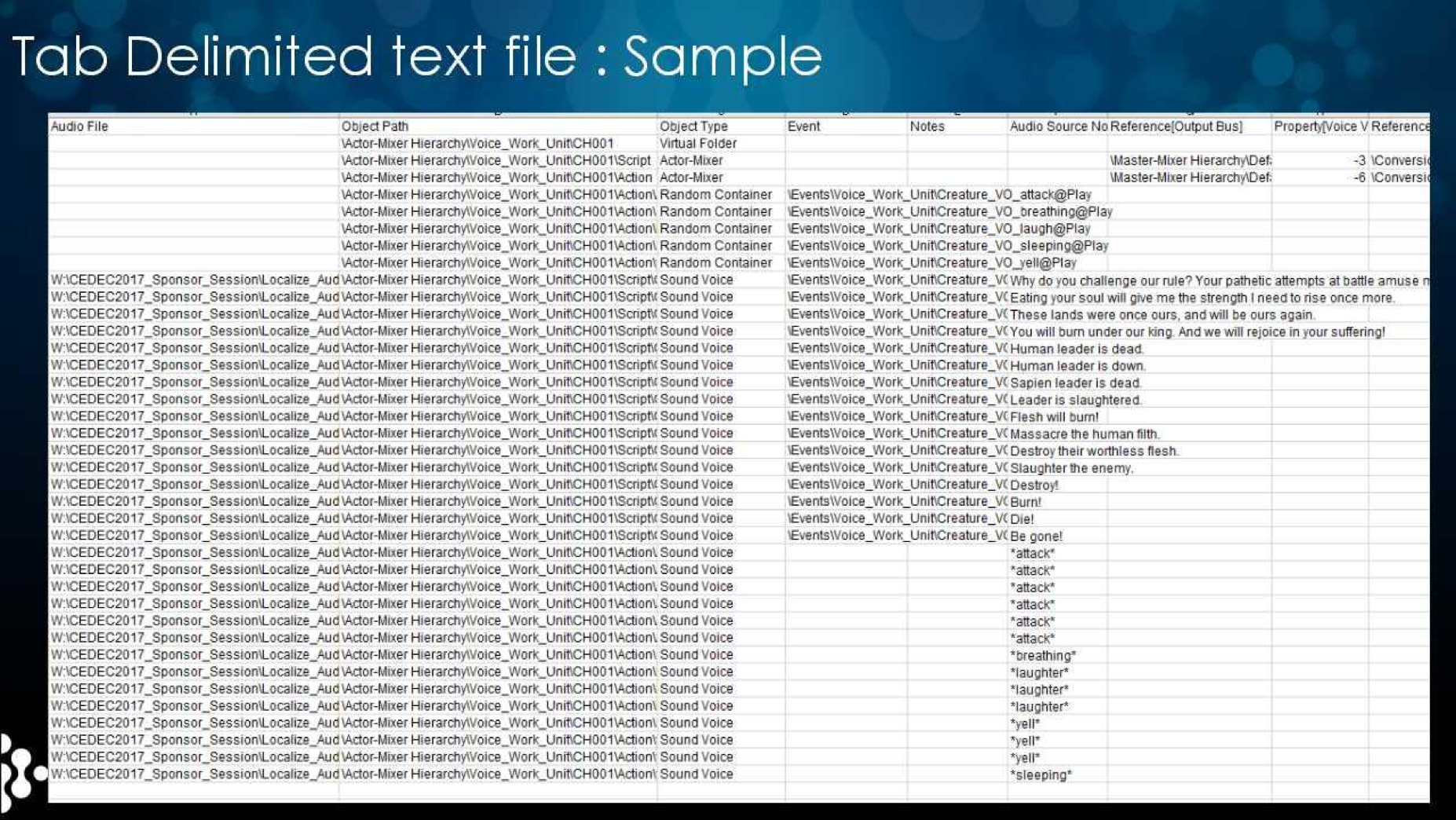

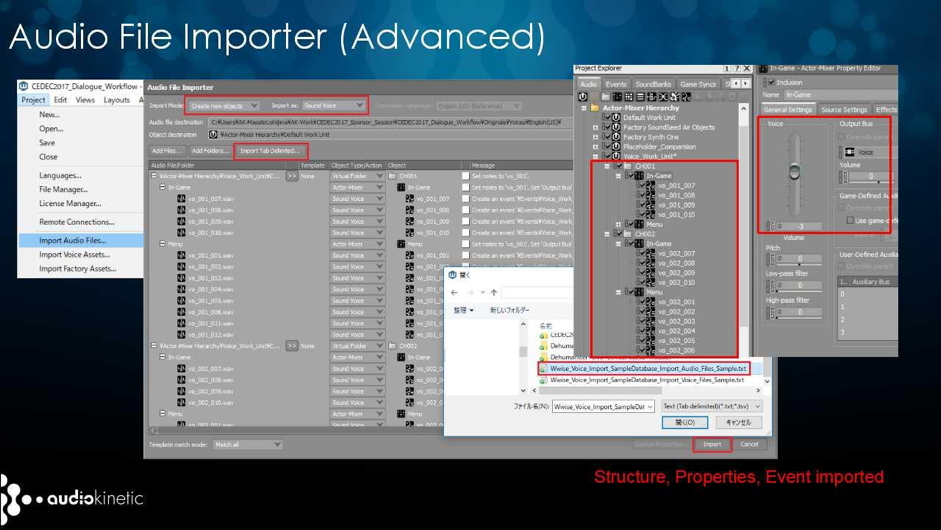

Dialogue is one of the areas of game audio development that requires a large amount of work in recent years. Reducing the number of steps involved with dialogue means increasing your time being creative with effects and music. Many game developers rely on spreadsheets to manage dialogue, but Wwise allows you to import voice data directly from the database. You can perform batch imports that contain the Event and property settings, so integrating dialogue should be very quick and reliable.

After you've integrated your dialogue assets into Wwise, it's time for Dehumaniser Live. Matthew Collings presented the basic features of Dehumaniser Live and demonstrated some presets. He gave us monster voices and robot voices, and just watching him play with a string of presets gave us an endless amount of ideas. In Wwise, you can track performance, tweak parameters, and even link with RTPCs to enable interactivity at runtime. Dehumaniser Live works as a plug-in to Wwise, so there is no need to process the voice data recorded on the DAW to add effects and then output the sound again.

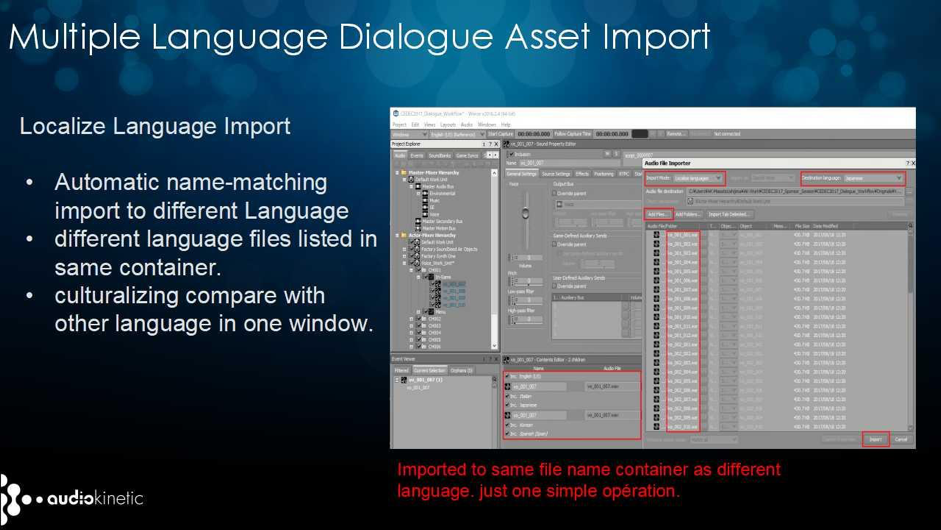

Wwise supports multilingual games. If you import voice data as Sound Voice objects, you can change them according to the Language setting. If you import the same dialogue line in different languages and use an identical filename for them all, Wwise will automatically look at the filenames and store them in the same object. As long as the filenames match, all you need is to drag and drop them into Wwise for implementation. No room for manual errors here. If you create a monster voice with Dehumaniser Live and switch to another language, you can apply the exact same settings to a different language. You no longer need to take the extra step of going through each language and repeating the effect settings.

Tom Hays talked about some of the issues associated with the conventional approach to dialogue production, from an outsourcing provider's point of view. The main point he raised was that he may have absolutely no idea how the post-recording voice files would be used in-game. With Wwise, he can use Work Units to take advantage of version management systems like Perforce to deliver data for implementation, so, as an outsource vendor, he is able to control the quality of what he delivers. If the developer provides him with the project's game build, he can play back the dialogue in-game and test the results, and he stressed that Wwise dialogue features allow him to significantly improve both efficiency and quality.

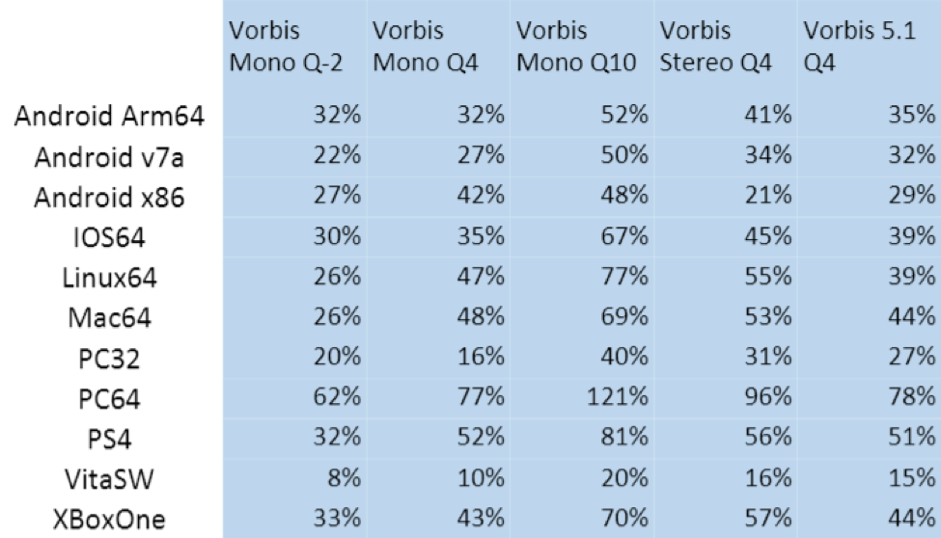

The final part of the presentation focused on Vorbis-related improvements in Wwise. Even social networking games can now carry up to 10,000 voice files, and while game consoles perform drastically better than before, it is still important to keep those sound data sizes compressed to the minimum. Audiokinetic has made proprietary enhancements to Wwise Vorbis. Depending on the waveform data and settings, there is more than 20% optimization compared to already-optimized previous versions of Wwise. The dialogue workflow has been improved, and performance can be maximized.

댓글

Félix Tiévant

November 23, 2017 at 03:38 am

Really impressive! Can this plugin be used at bus level? Also, small typo on the advanced tab: FreqUency!

Joshua Hank

January 20, 2025 at 12:00 pm

Just found this now - I sadly guess this cooperation wasn't continued?