- Part 1. Scaling Ambition

- Part 2. The Crowd Soundbox System

- Part 3. Additional Layers

PLANET COASTER - CROWD AUDIO : PART 2

The crowd soundbox system

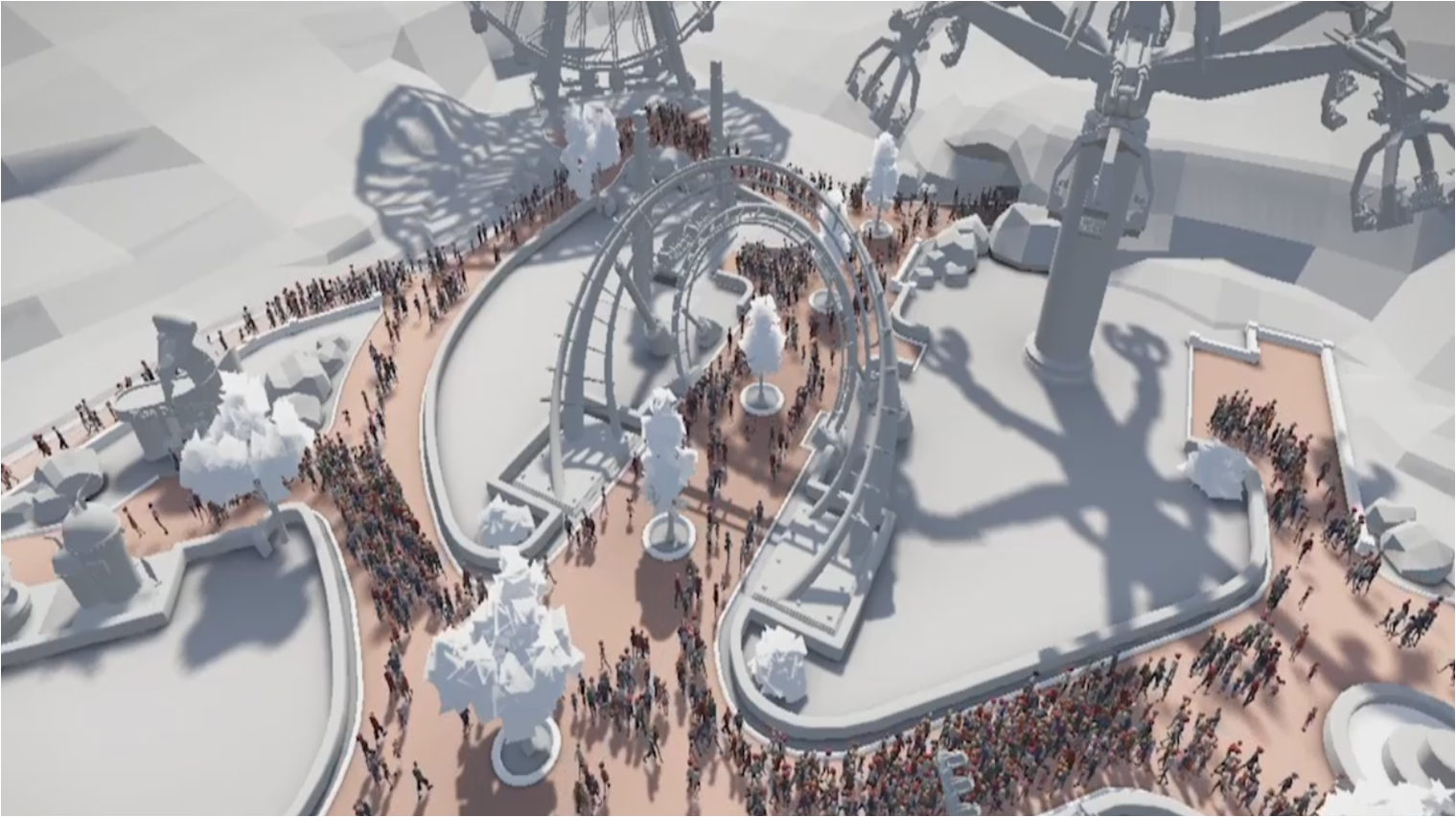

During pre-production, it became clear that using an ‘emitter per guest’ solution is not effective when crowds are thousands of guests strong. Such a system would cause the amount of CPU work to scale exponentially as more and more guests wandered into your park.

Gameplay and simulation code managing crowds face similar problems. Our programmers turned to a fluid dynamics simulation rather than path-finding to increase the number of fully-simulated guests on screen at any given time. If you would like to know more, our Principle Programmer, Owen Mc Carthy. has written an article for Gamastura on the topic here.

During pre-production, Frontier’s audio programmers worked closely with Owen to align our work with his. This allowed audio to decouple the ‘amount of CPU work’ from the ‘amount of guests’. Rather than placing a single emitter on each member of the crowd and filtering through the list to see where they are, audio code performs this step using data Owen had made available through a park-wide crowd grid.

The crowd simulation grid is a fixed size and subdivided into cells. The time to scan through all the cells and find where guests are is also fixed. Since both values are fixed, the workload is distributed over multiple frames so the amount of work this system does is predictable, at the worthwhile cost of slightly increasing lag.

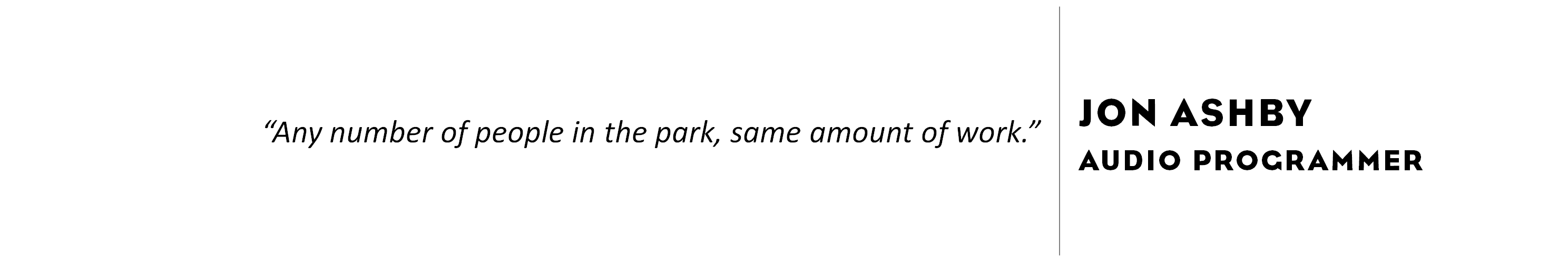

For audio content, we ran various experiments using different crowd recordings and densities. Our goal was to recreate a crowd made from grain-like elements rather than using fixed loops. Audio designer Michael Maidment ran a lot of tests with coders Dan Murray and Jon Ashby, and from their experiments we decided that a crowd (around the camera) can be expressed by four emitters placed in the four directions of the compass. A fifth emitter is added to describe the crowd in the entire park.

Furthermore, the best performance-to-quality ratio for us was in using three audio assets for crowd size (small, medium, and large) divided into different combinations of crowd diversities (adult male/female, teenage male/female, child). We would have liked to go even more small-grain (individuals, groups of two or four) but found that to be a path of diminishing returns.

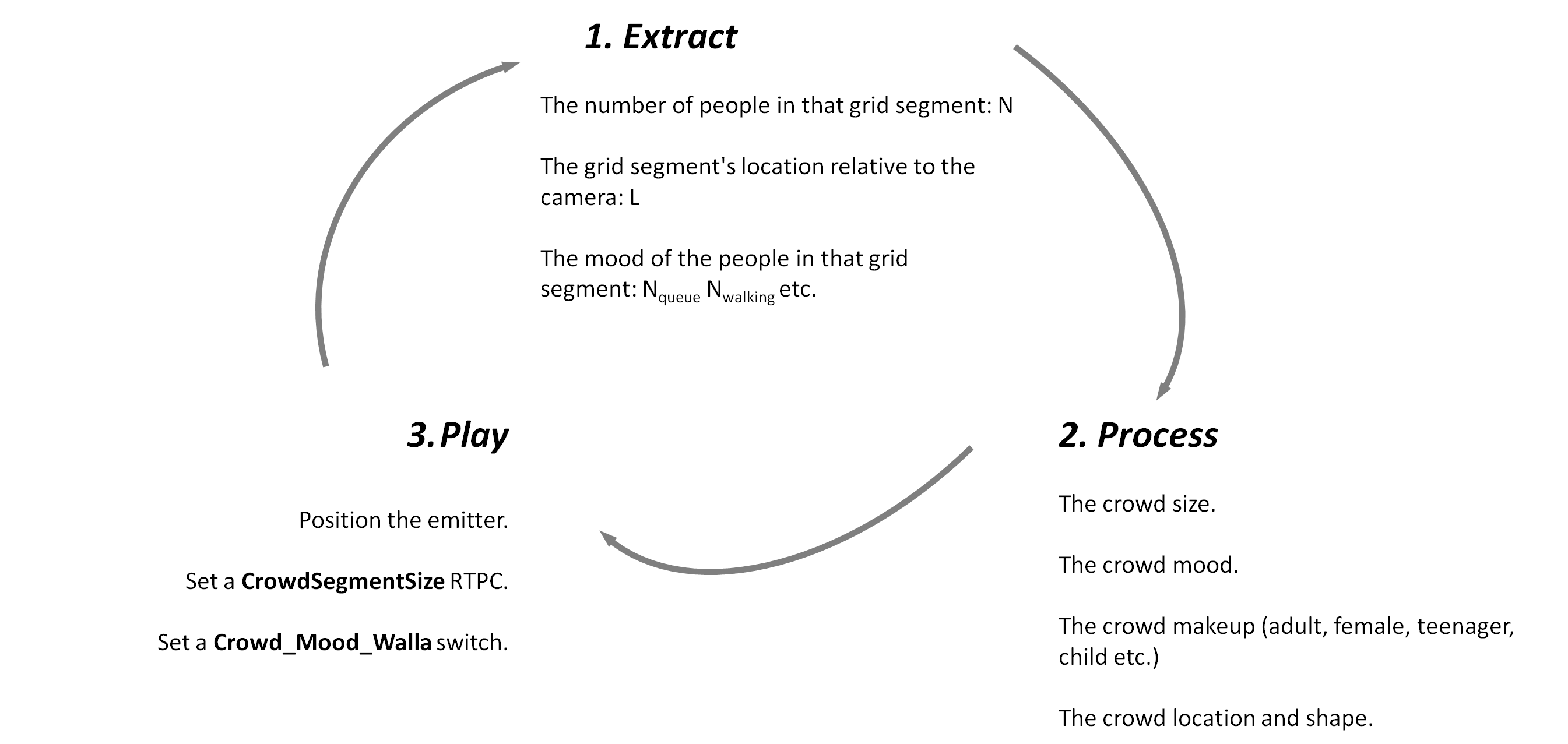

The Soundbox brings all of the above together and ties audio content to the emitters. It is a system that uses data to make an informed decision regarding where to put emitters and what to play on them at any given moment.

A Soundbox’s cycle starts by collecting data which it analyzes for relevant information such as crowd density, size, diversity, location, and behavior. Once the Soundbox has an understanding of what the clusters of crowds spread around in a park ‘look’ like, it can create and place emitters in approximate ‘correct’ locations around the camera.

The Soundbox creates sounds from data, rather than the traditional way of deriving audio content from pre-placed emitters on game objects which means work can be distributed across frames, emitters can be pooled, and it circumvents the pitfalls of scaling.

Extracting data from the crowd simulation grid. The crowd simulation grid (yellow squares) contains information about guest locations. Audio code extracts guest density, which the debug render displays as white pies. The emitters (‘Close 1’ to ‘Close 4’) are positioned where the averaged densities of crowds are. ‘Far 1’ is a background layer which takes the entire grid into account, not just guests around the camera.

The Crowd Soundbox constantly iterates through three stages, distributing the workload over multiple frames. The more detailed the extraction and processing, the longer it takes to go through a cycle, though with a much more precise-sounding result. The speed of the user-controlled camera influenced our settings when finding the right balance between quality and update speed. The loop updates emitters' every frame while it extracts and processes 1/30 of the crowd grid in the same time frame. A full refresh takes one second at 30 fps.

The Soundbox solves a few interesting problems in audio:

- It provides a framework for deriving context from available data.

- It applies rule-sets through a single interface and can dynamically change these where needed.

- Audio code no longer starts sounds based on triggers in the game code (which might have to be filtered afterwards).

- It manages the amount of work in a more predictable way, regardless of the size of the crowd.

USING DATA TO INFORM A VIRTUAL SOUNDSCAPE

Crowd Soundbox: Mood and size

One of the goals for gameplay and animation was to create a readable crowd that players could use to gauge the success of their park, so naturally the audio team wanted to express that behavior. When the Soundbox positions emitters (and understands where, how large and what the make-up of the crowds are) it also needs to know how they are behaving. We call these behaviors ‘moods’.

When we first started thinking about moods, we approached it in a granular way. We were thinking of nausea, boredom, excitement, congestion, queuing, and many more possibilities. As is often the case when adding audio to visuals, it can have adverse effects. A bored (grumbling) crowd is quickly an annoyance and experiments confirmed this.

We decided against mood audio and made the distinction that a happy crowd would be lively and any type of problem in the park would be expressed as a tendency for the crowd to be quiet to prompt player investigation. Congestion and queuing are standard expectations in coaster parks, and we found upon visiting parks for research that people tend to be louder in queues, often due to the close proximity of loud rides.

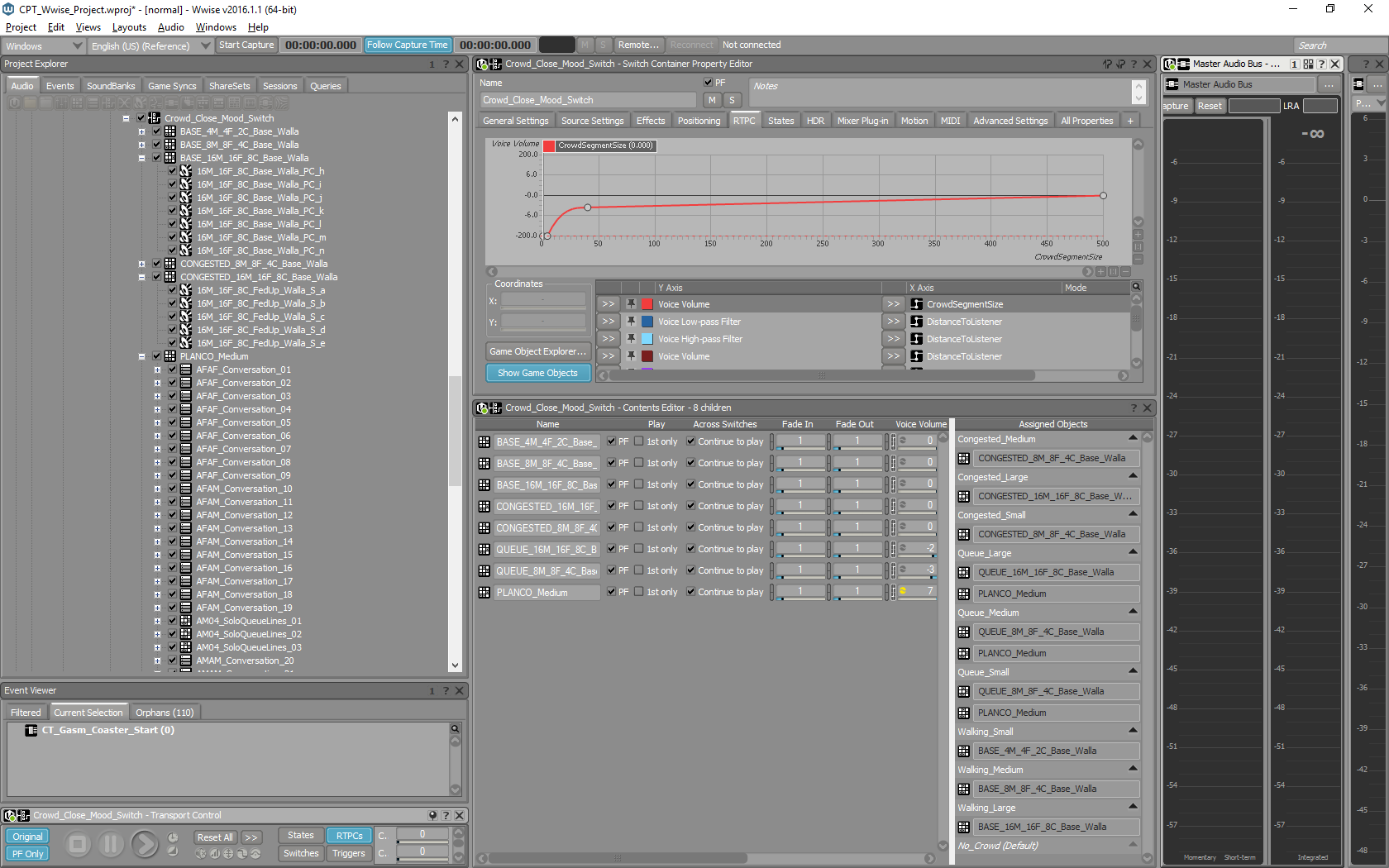

There was another reason not to choose to directly simulate moods in audio. We seldom see values over 60 percent of any specific mood type in a grid-cell (in other words, a group of guests doesn’t necessarily all share the same mood State) so, we often couldn’t make out a single defining mood. We could have either created lots of different assets or used Blend Containers, but decided both were expensive solutions without a decent return. Instead we used thresholds so that if 40 percent of people are queuing, we switch the audio on that emitter to ‘queue’.

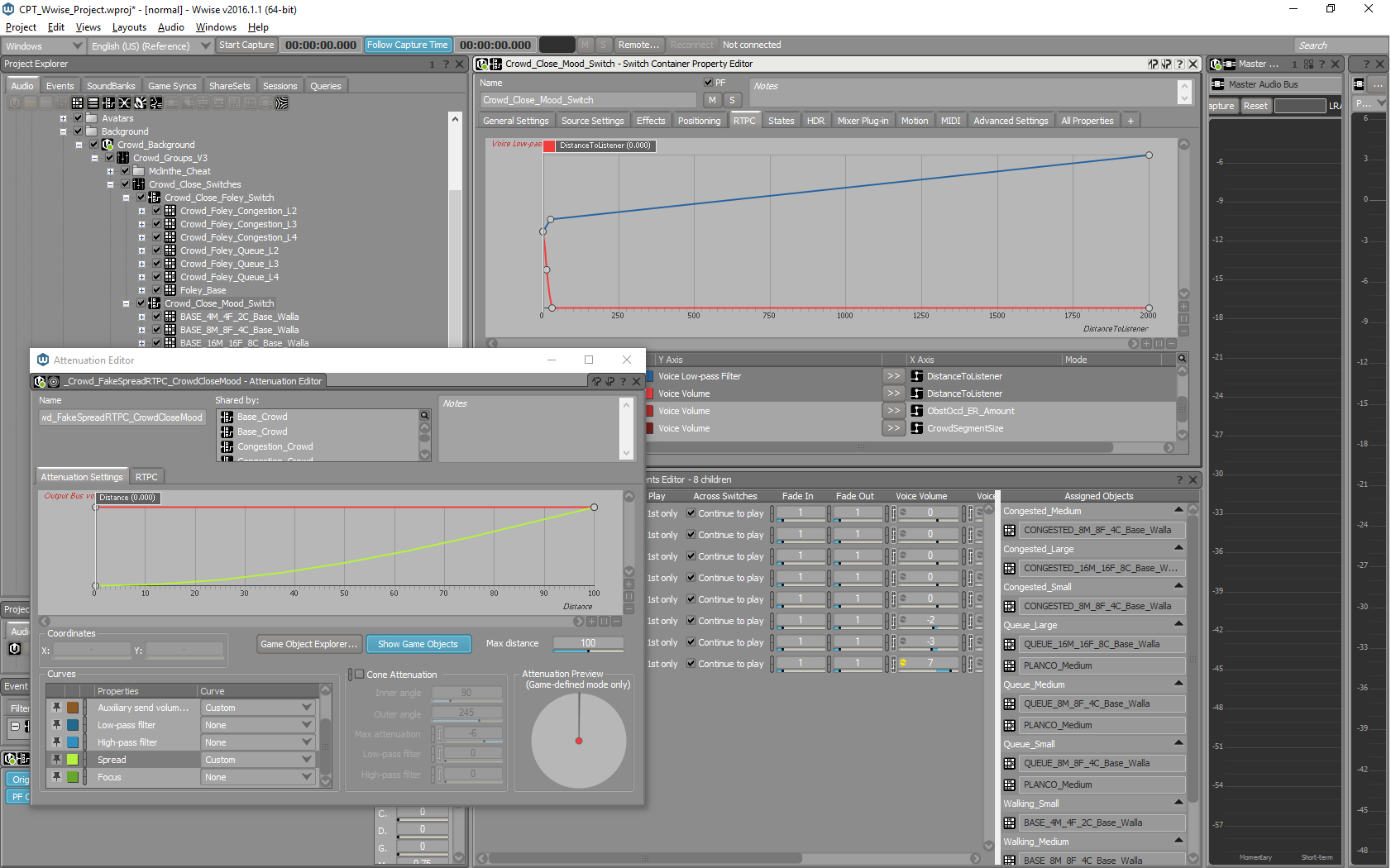

Crowd mood Switch set-up in wwise. Rather than using Blend Containers for crowd sizes, we used the smallest required amount of size variations for each mood to balance quality vs. cost. Planco (a Planet Coaster language created by senior audio designer James Stant) adds variations to the crowd loops. The same conversations are used in more detailed conversations when the camera is close to guests.

USING DATA TO INFORM A VIRTUAL SOUNDSCAPE

Crowd Soundbox: Positioning emitters

After we had established that we can describe a crowd around the camera accurately enough by using just four emitters with a fifth emitter describing the total crowd, we worked on placing the emitters in the right positions.

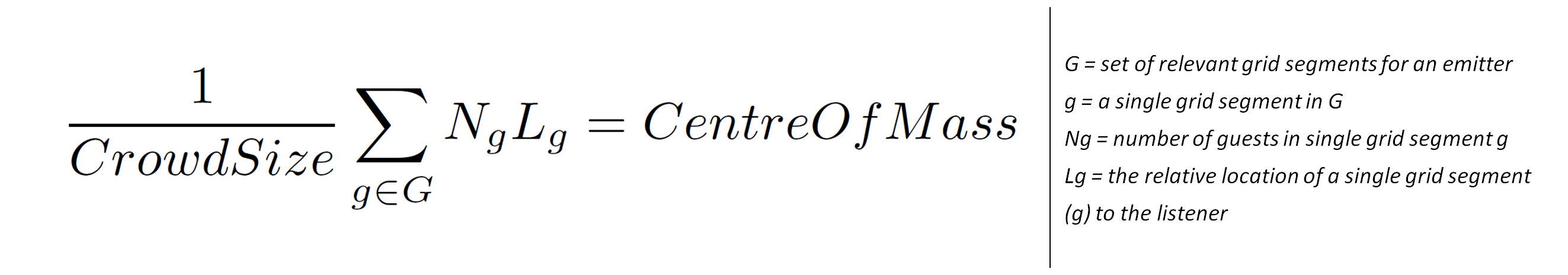

To place the emitters in the right position, the Soundbox calculates the center of mass for the crowd size with the cardinal points. The formula for doing so works like this:

Using the resulting data, emitters are placed in the right location. When guests are found to be clumped to the left of the camera, the emitter is positioned to the left. If they are evenly balanced around the camera, the emitter will be directly on top.

USING DATA TO INFORM A VIRTUAL SOUNDSCAPE

Crowd Soundbox: Calculating Spread

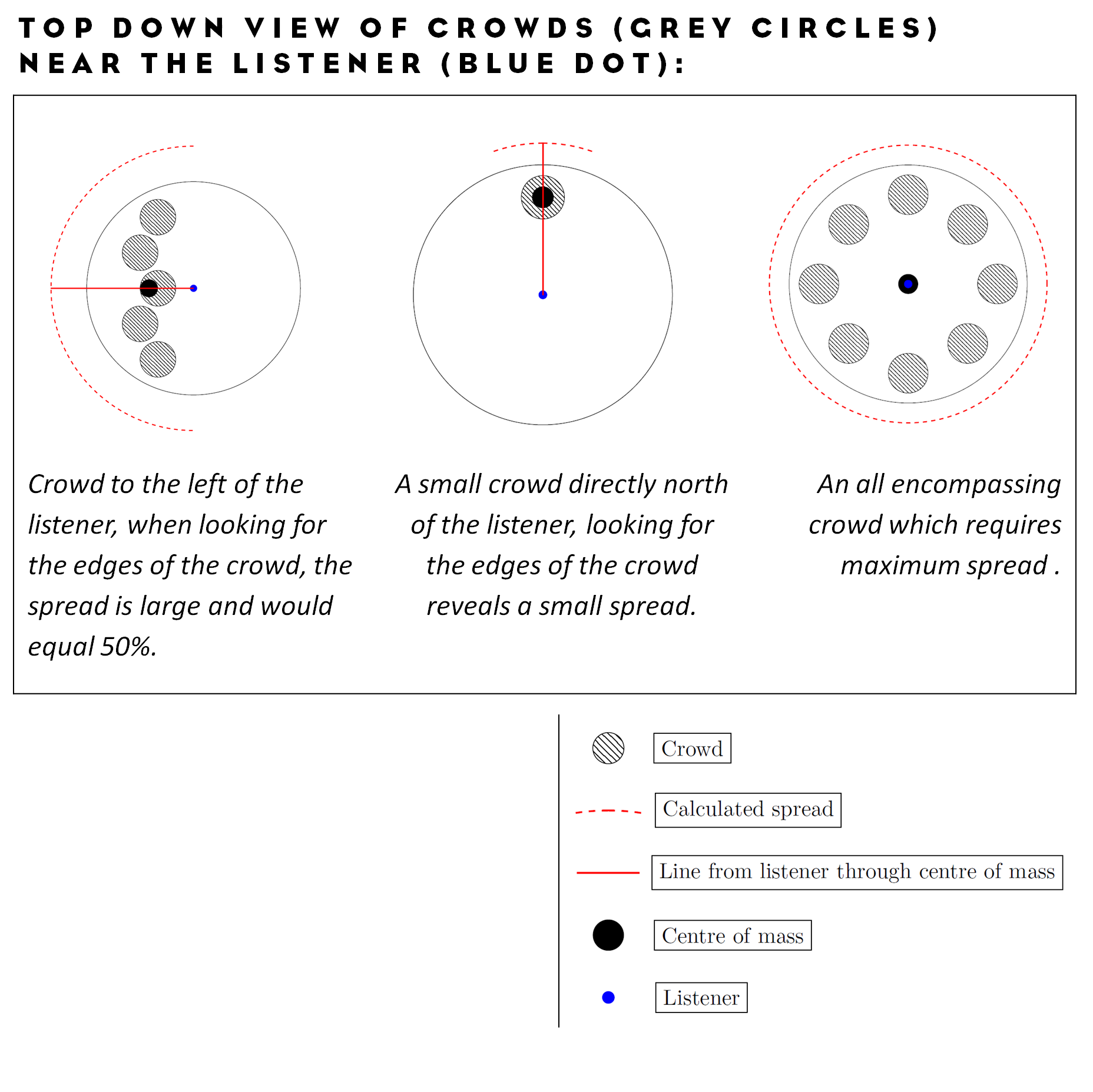

Positioning crowd emitters on a center of mass – rather than where the entire crowd is – inevitably leads to edge cases. For example, the center of mass can be to the left of the listener while the crowd extends around the listener to the right. Fortunately, Wwise has a perfect way to deal with this by using Spread. Unfortunately, Spread is fixed in an attenuation curve, so to butcher an old saying: ‘if we can’t change Spread, maybe we can move the mountain’. Enter the Soundbox, which controls where emitters are placed!

To firstly obtain Spread, code looks towards the center of mass from the camera and begins finding the outermost left and right edges. As it arcs around and finds 90 percent of the crowd, the arc represents how ‘dispersed’ the crowd is and translates it to a good Spread value to use:

Top down view of crowds (grey circles) near the listener (blue dot):

The Soundbox controls positioning (emitters in the soundbox are not tied to ‘physical’ world objects after all) and we can manipulate where in the world they appear. We use that functionality to position emitters closer or further away from the camera and simulate distance purely to obtain a Spread value from the attenuation curve. The attenuation curve doesn’t contain any actual volume or distance attenuation as it only informs Spread.

Dynamic spread in Wwise. Repurpose the attenuation curve and manipulate the emitter position to create dynamic spread. An RTPC controls the actual attenuation.

As the emitter is moved further away from the camera, we still use the center of mass as an axis, so it is always in the correct 3D location. Directionality is unaffected by our tweaking; but, to get correct distance filtering, we also send a corrected ‘distance to listener’ RTPC which contains the ‘actual’ distance position of the crowd.

In the next blog we’ll look at what additional background/foreground layers add to the core Soundbox system.

댓글