Who am I?

I am an artist and a creative programmer working primarily on contemporary art projects. As a programmer, I work with various clients to produce artworks which would broadly fall into the category of generative art.

As an artist, I am one half of the artist duo Xenoangel alongside Marija Avramovic.

Xenoangel

Marija and I have a varied practice, ranging from painting and sculpture to real-time animation. Our animations are simulated worlds created in a video game engine (we’re using Unity). The worlds we create are home to NPCs (Spirits, Scavengers, Critters, etc.) who interact with each other and with their world in novel ways in order to tell a story.

We are interested in World Building and we are fascinated by ideas of non-human intelligences, as well as the blurry concept of Slow Thinking… By this I mean, thinking at the speed of a forest, a mountain, a mycelial network of fungi, a weather system or a world.

Over recent years, we have made a series of these real-time animation artworks, each one trying to tell a specific story:

Sunshowers is a dream of animism in which all objects of the virtual world use the same ludic AI system to interact with each other. It is based on the fox’s wedding scene of Kurasawa’s film, Dreams. It was a commission for the Barbican’s exhibition, “AI: More Than Human”.

The Zone is an online experimental essay exploring artifacts and phenomena from Roadside Picnic (Strugatsky brothers). It features a text contributed by writer and curator Amy Jones.

https://scavenger.zone/

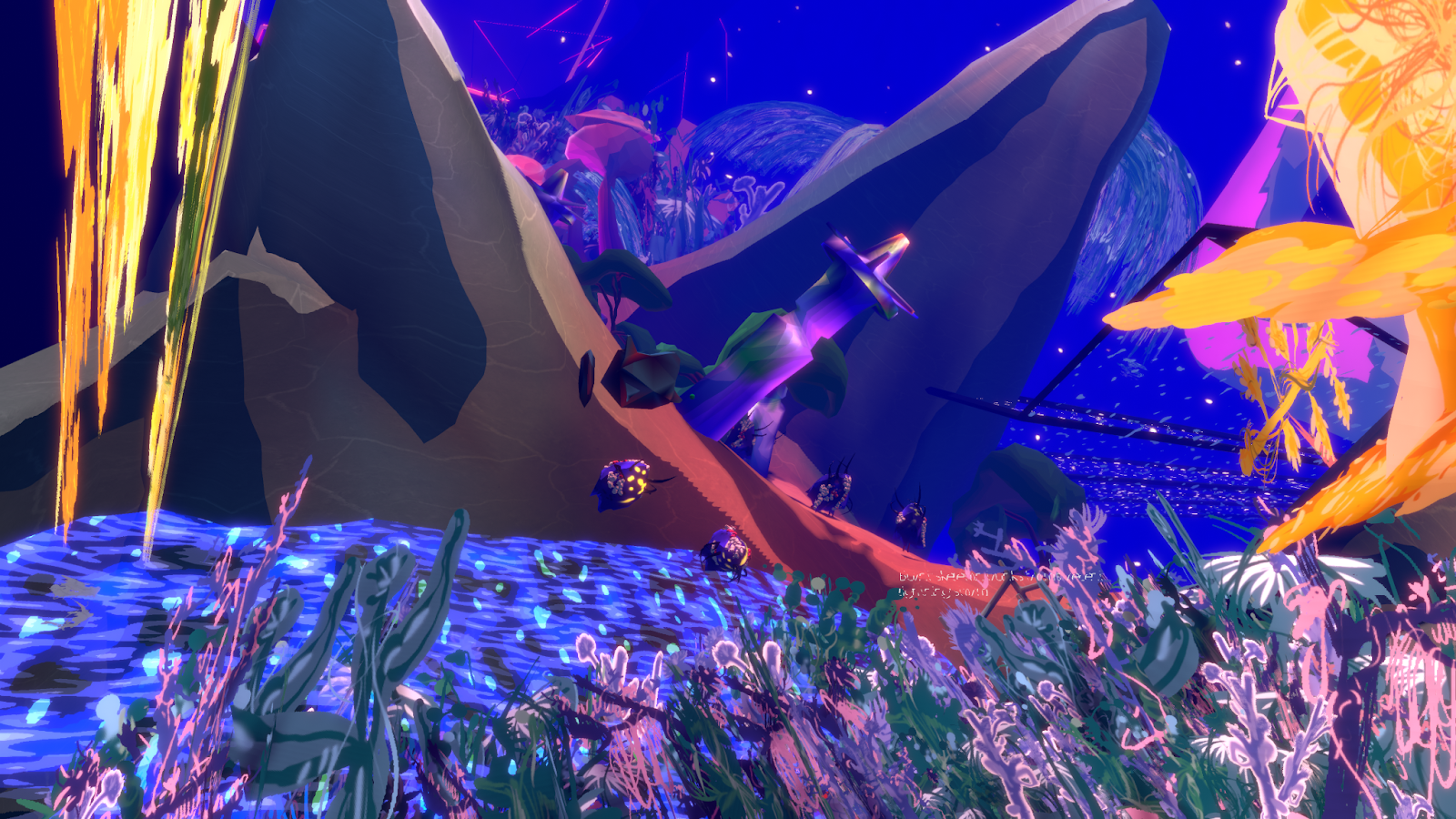

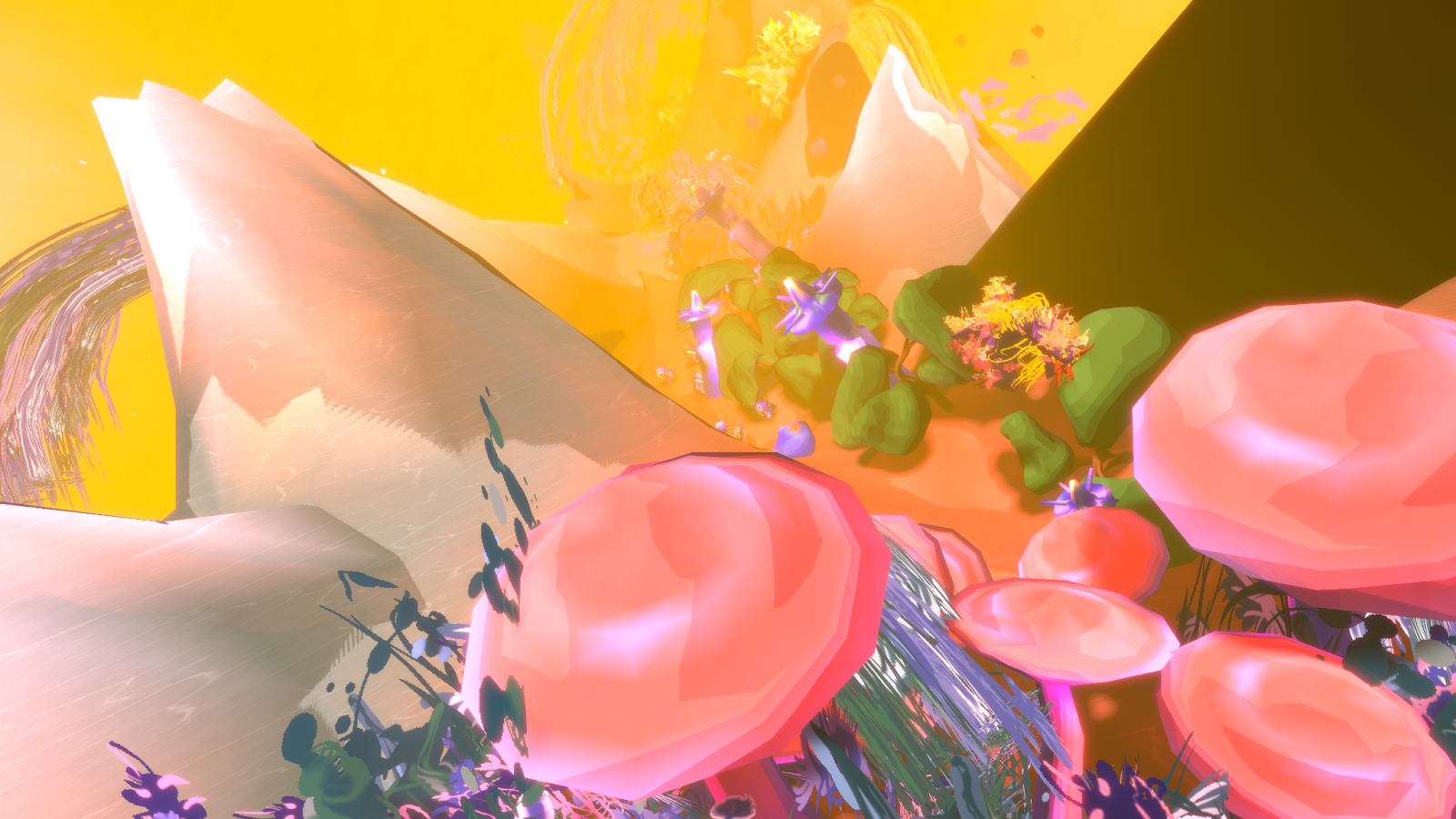

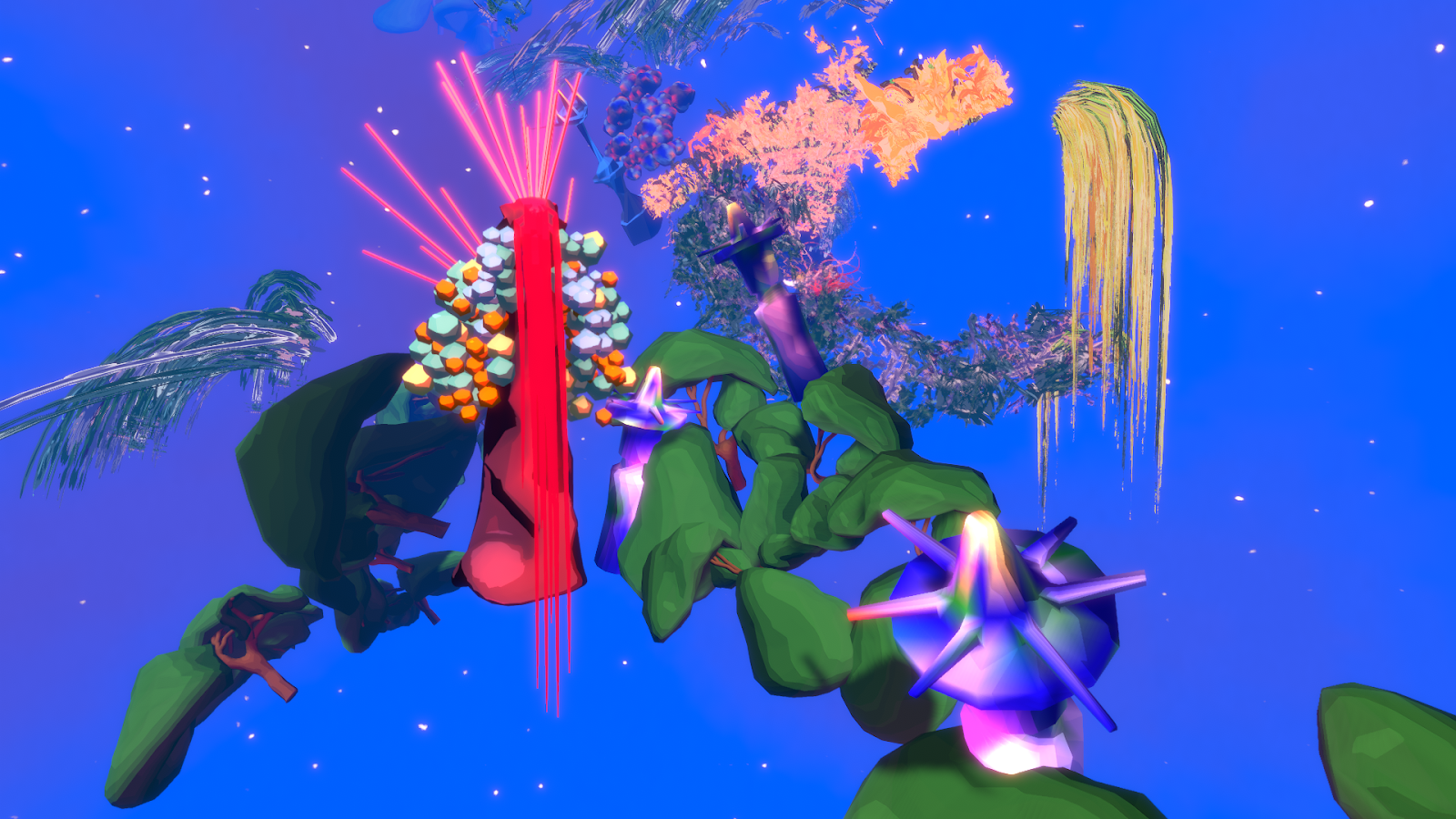

And most recently, Supreme is a symbiotic world on the back of a mythic beast - a slow-thinking ecosystem where archaic non-human Critters find stories in vibrant objects to help them uncover a pre-history. Supreme is also host-being for tentacular writings by several different authors and artists who have created texts from a shared starting point: Symbiosis and Co-existence.

For this post, I wanted to share some of my experiences working as a contemporary artist in the medium of real-time animation. I will talk about using tools originally conceived for video games to create art pieces with a focus on sound and how we have used Wwise as the audio engine for our works. I think the best way to do this is to focus on our most recent real-time animation piece…

Supreme | A Case Study

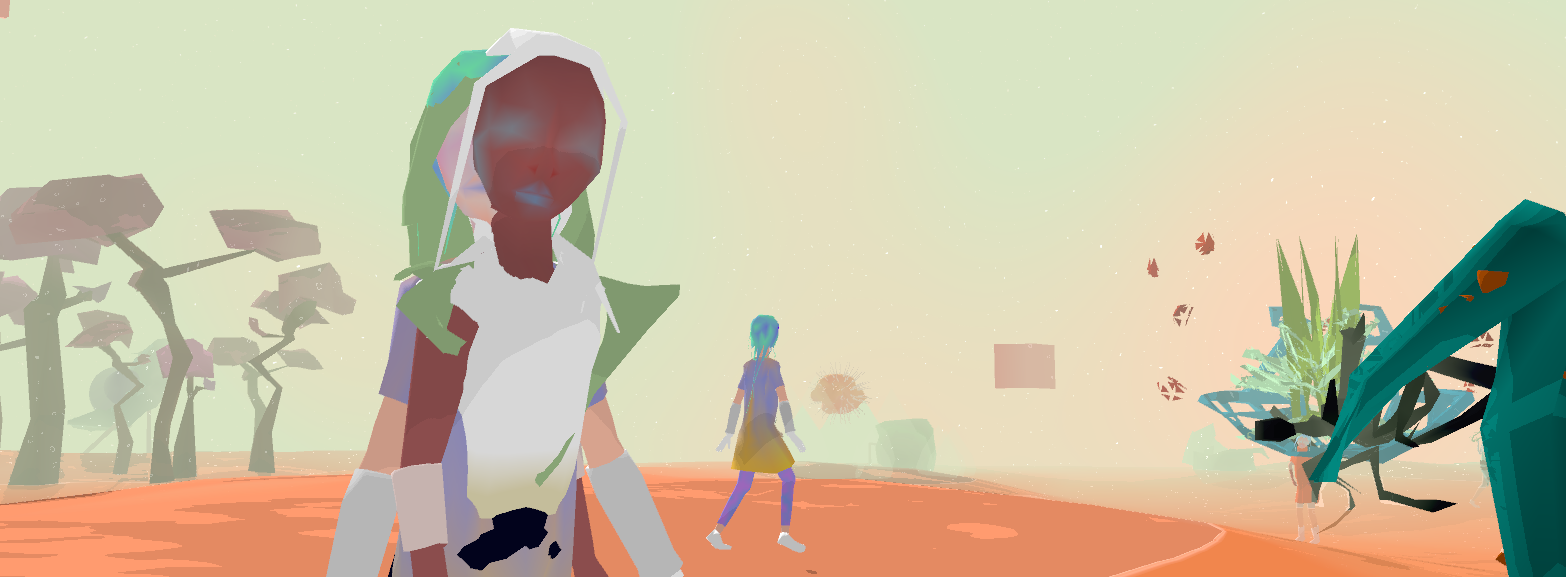

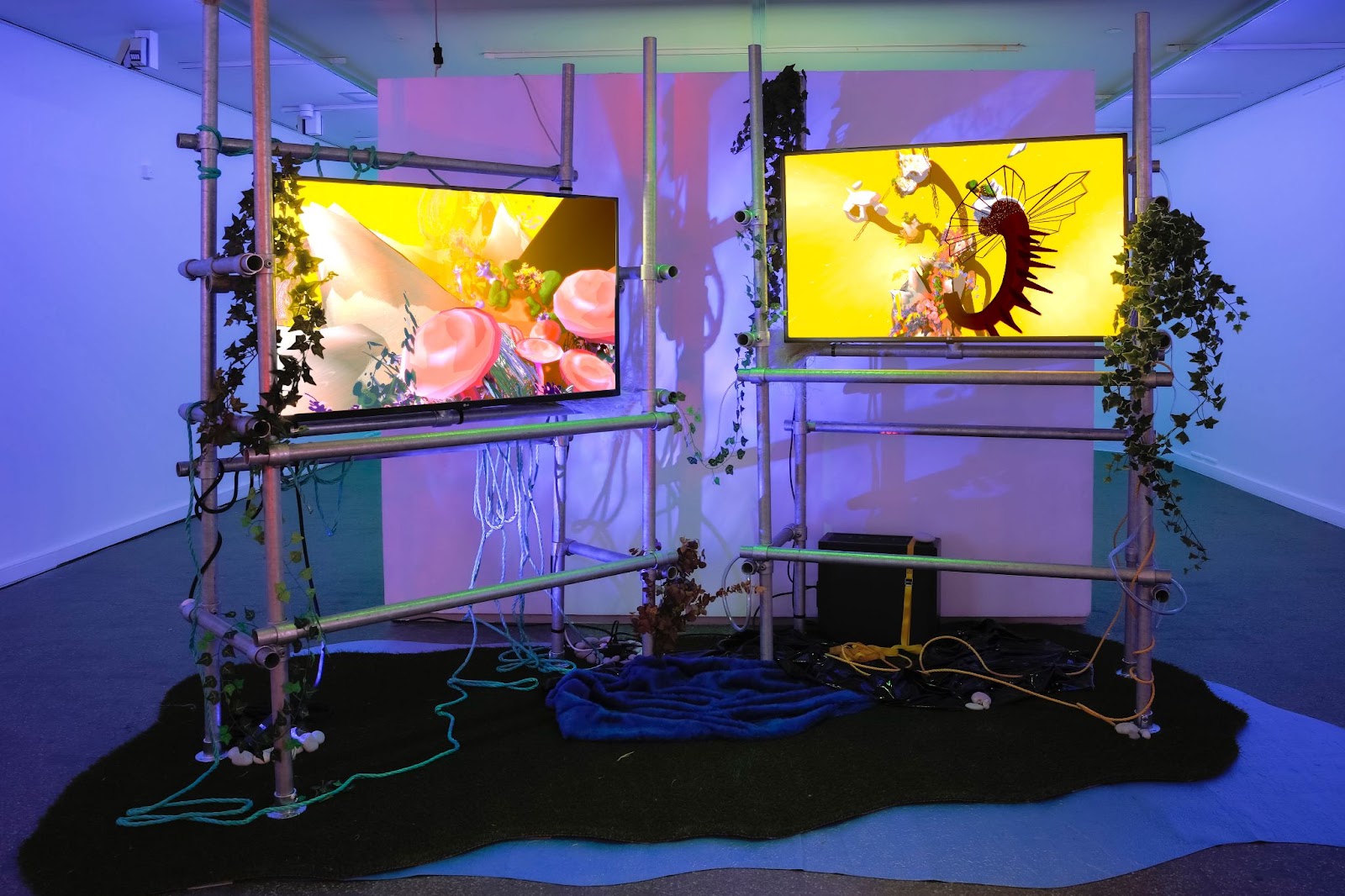

View from the back of the beast

As mentioned above, Supreme is a symbiotic world on the back of a mythic beast. This oneiric space is home to a number of different non-human Critters which interact with each other and with the world itself. Their worldview consists of a series of myths about the workings of their universe.

All non-human entities in this virtual universe interact using an animistic neural network system to give them life as an emergent property of the network. It is a virtual living symbiotic ecosystem which can be watched in the form of an infinitely unfolding animated story.

The Healer - one of the Critters found on the back of the World Beast.

The piece is imagined in the form of a diptych on two screens which offer two synchronised windows into the world. One screen shows a close-up view of the world with a focus on the entities which live there. The second presents a zoomed-out view of the world in which we can see the World Beast itself. In this way, the piece dynamically presents a multitude of perspectives from the individual to the hyperobject scale of the world itself.

Installation of Supreme for the exhibition Faire Monde, Arles, France, 2021

Photo by: Grégoire de Brevedent d'Ablon

Tools

In its essence, Supreme is a non-interactive video game. We made it using tools which will be familiar to any small independent team making a game: Unity for our game engine, Blender for 3d modeling and animation, Photoshop for creating and editing textures, Ableton for sound design and Wwise as our real-time audio engine.

Presentation

Supreme is designed to be presented in a gallery or art space as part of a scenographic art installation, as seen in the photo above from an exhibition in Arles, France. It is presented as a diptych on two 4K displays with stereo sound on speakers. The scenography can change from venue to venue, but the basic setup remains the same.

Here is an encounter with the World Beast in a booth at ArtVerona 2021:

Photo by: Virginia Bianchi

Symbiotic Soundscape - The Song of the Beast

The worlds that we make as Xenoangel are non-linear. In most cases, they don’t have an obvious start or end point, and it is the characters themselves which determine which actions they will undertake and when they will do them. Because of this, we have to design the soundscapes for the world to also be non-linear. The way we usually try to achieve this is to set up an audio pipeline which is principally sample-based and can be re-orchestrated and mixed with real-time effects in the audio engine.

An important element of Supreme is a symbiotic soundscape which is determined by a Call & Response style interaction between the World Beast and the Critters:

The World Beast periodically sings. It is a guttural growl which is often rumbling in the sub frequencies of the audio. When Supreme sings, each of the Critters try to respond. Supreme is expecting a certain rhythmic response and the Critters try to learn this rhythm over time using a neural network/genetic algorithm. As they get closer to the expected rhythm, their Synchronicity with their world increases.

This Synchronicity parameter underpins the entire Supreme ecosystem. The value of this parameter is used by the Critters when deciding on all of their actions.

Making Samples

The samples used in Supreme come from a number of sources, from sample packs to original recorded material. We like to reuse and improve upon material from project to project, and so now we have a small library of sounds which we can dip into and remix each time we need a new sound.

For the Critters’ voices, we are mainly using samples from a recording session where we asked a singer to make a variety of alien sounds with her voice - to try and stretch her voice so that it no longer sounds human. These samples have appeared in almost all of our projects since they were recorded about 5 years ago.

Designing Sounds

Once we have our source material, I use Ableton as my DAW of choice to mess with the sounds. This is probably the most fun part of the process. I take the samples and layer them on top of each other, or stretch them so they are no longer recognisable. I run them through effects, reverse them, re-sample them, pitch-shift them… all of the fun stuff that you can do in Ableton when you don’t hold back trying to make something weird! At this stage, I also add synthesized sounds from virtual instruments in Ableton.

Some of my favorite things to use here are the effects and instruments from u-he, iZotope Iris 2, Valhalla Shimmer, zplane Elastique Pitch… the list goes on.

Programming

The sample-based soundscape in Supreme is composed in real-time and controlled by a number of C# classes in Unity.

For the song of the World Beast, there is a sequencer class which reads a MIDI file containing a number of different call options for the beast, as well as the ideal responses to each call. The sequencer chooses one of these calls, remixes it with a bit of randomness (because life is full of randomness!) and then passes it to the World Beast to sing.

Next, every Critter who hears the call of the beast passes the notes they hear as an input for their neural network mind…

A quick note on our neural network:

We have been playing around with ideas of AI since our project, Sunshowers. For that project we created a simple neural network and genetic algorithm system in C# for Unity which is adapted from the C++ examples in Mat Buckland's book, “AI Techniques for Game Programming” from 2002.

It is a playful neural network whose only job is to learn to navigate the weird parameters that we have set up in our game worlds.

It is slow, with each tick of the network coinciding with an event in the game world. It can take the whole duration of an exhibition to train the network. The idea is that the characters learn about their world in real-time. We call this code Slow Thinking.

.. So the neural network does its black box magic and outputs the notes for the Critter to sing.

After all of this, the notes are passed as Events to Wwise with parameters for pitch, velocity, duration, etc.

In Wwise

In Wwise I try to keep the setup as simple as possible, especially because we are only two people working on all aspects of these quite big projects.

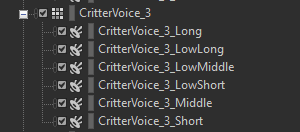

For Supreme, all of the samples are arranged in the Actor-Mixer Hierarchy and nested in various containers (Random, Switch, etc.) depending on their use cases.

One of the Critter Voices is set up like this for example:

These are called using Events and local Game Parameters and Switches to change parameters for the sound.

Keeping this simple and organised within the Wwise Authoring tool allows for easy iteration on both the Unity side and in terms of sound design in Ableton and Wwise. As most of the logic for the audio is controlled by classes within Unity, I am able to make changes to this and test it immediately without having to re-configure the setup within Wwise each time.

Finally, I use the real-time effects in Wwise to add atmosphere and variety to the samples, as well as for the mixdown of the audio. The initial mix is done in our studio; however, we tweak this in-situ for each presentation of the piece.

At the Install

Our pieces are designed to be shown in galleries or arts venues as part of custom installations. Therefore, each presentation is unique and has to be adapted to work for the space available. This is particularly important for the sound, as the speakers and the physical dimensions of the space have a big impact on the way a piece sounds.

With that in mind, as part of an install for our work, we usually dedicate a good chunk of time to edit the mix and EQ of the sound in the exhibition space. This is one of the big benefits of working with Wwise as an audio engine in this context - the Wwise Authoring tool makes it really easy to mix and change effect parameters as the piece is playing in real-time.

In order to do this, we use the Remote Connection capability of the Wwise Authoring tool to connect to the piece from another computer. Then we sit in the space, walk around, listen and make adjustments to the mix until we are happy with how it is sounding. After that we can easily rebuild the SoundBanks for that particular presentation.

For some of our pieces, we have even integrated a UI for mixing and EQ into the user interface of the Unity app. While this takes a bit more development time to set up, it allows for someone else to install our work without the need to rebuild SoundBanks each time. This is important for our piece Sunshowers, which is part of a touring exhibition about AI.

Conclusions and Next Steps

After reading this, I hope you have an idea of the work I am doing with Xenoangel and a little bit of an insight into the sound world of Supreme. For us, our work is explorative with each new project building on what we’ve learnt from the last.

We are lucky that the tools available these days allow us to undertake such projects with our tiny two person team. Without such democratizing tools as Ableton, Unity and Wwise, this would not be possible. In terms of real-time sound for Unity-based projects, Wwise has allowed us a lot of flexibility to creatively and efficiently develop our ideas.

As for next steps, we are interested in non-standard speaker configurations for our scenographic installations. This is something which can be non-trivial when using game engines which are usually intended to work with little effort on any standard home console setup but not so easily with unconventional installations. I have already begun some R&D into this by creating custom Wwise plugins for ambisonic outputs. It’s in the early stages but I hope that this will allow us to create new and exciting simulated worlds of sound! Be sure to watch this space!

댓글