Version

-

-

-

-

Samples

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

The SpatialAudio module exposes a number of services related to spatial audio, notably to:

Under the hood, it:

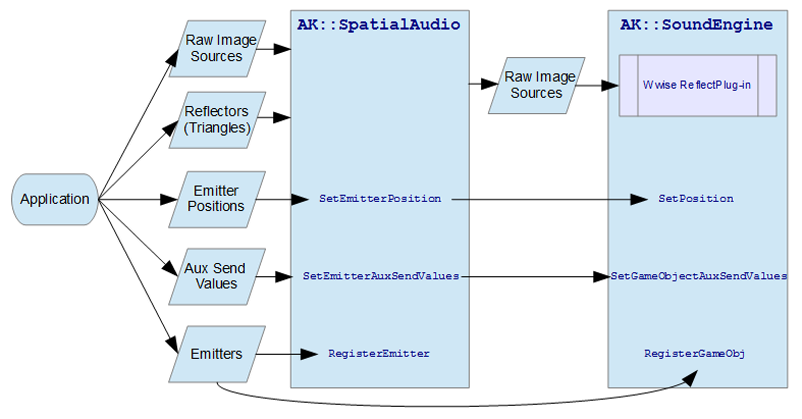

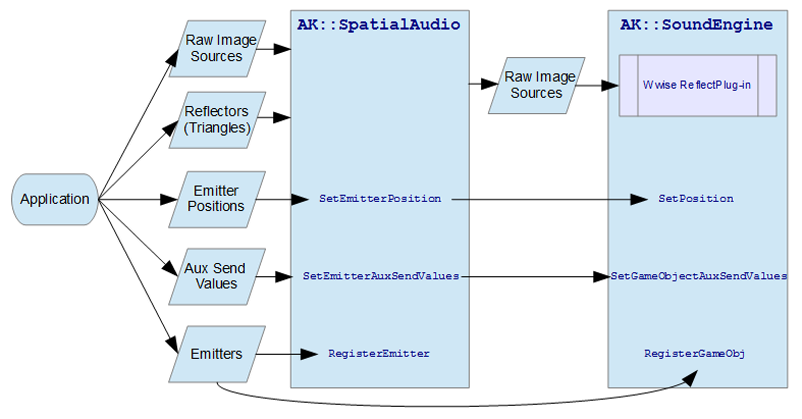

It is a game-side SDK component that wraps a part of the Wwise sound engine, as shown in the following flowchart.

The SpatialAudio functions and definitions can be found in SDK/include/AK/SpatialAudio/Common/. Its main functions are exposed in namespace AK::SpatialAudio. Here is an overview:

AK::SpatialAudio::RegisterEmitter and AK::SpatialAudio::UnregisterEmitter: to make SpatialAudio aware of emitter game objects used in the rest of the APIAK::SpatialAudio::SetEmitterPosition and AK::SpatialAudio::SetEmitterAuxSendValues: Use these functions instead of their AK::SoundEngine counterpart ( AK::SoundEngine::SetPosition and AK::SoundEngine::SetGameObjectAuxSendValues) for the emitters registered to SpatialAudio.AK::SpatialAudio::AddImageSource and AK::SpatialAudio::RemoveImageSource: Used for direct control of Wwise Reflect image sources. See 'Using "raw" Image Sources' below.AK::SpatialAudio::AddGeometrySet and AK::SpatialAudio::RemoveGeometrySet: Have SpatialAudio calculate image sources based on geometry, to later send to Wwise Reflect instances.AK::SpatialAudio::AddRoom/AK::SpatialAudio::RemoveRoom, AK::SpatialAudio::AddPortal/AK::SpatialAudio::RemovePortal, AK::SpatialAudio::SetGameObjectInRoom: Creates rooms and portals, and lets AK::SpatialAudio know in which room emitters are located.

|

Note: You may replace all your calls to AK::SoundEngine::SetPosition and AK::SoundEngine::SetGameObjectAuxSendValues with those of AK::SpatialAudio. The calls for which the object has not been registered to AK::SpatialAudio via RegisterEmitter are forwarded directly to AK::SoundEngine. |

As mentioned above, Spatial Audio may be used in two different (but complementary ways) to produce dynamic early reflections (ER, for short): by using the Geometry API or by feeding Wwise Reflect directly with raw image sources. This is described in the two following subsections.

Please refer to our Creating compelling reverberations for virtual reality blog, for example, for an introduction to geometry-driven ER.

Initialize SpatialAudio using AK::SpatialAudio::Init().

For each emitter that should support dynamic ER, call AK::SpatialAudio::RegisterEmitter() after having registered the corresponding game object to the sound engine. Use the AkEmitterSettings for determining the parameters of the reflections calculation.

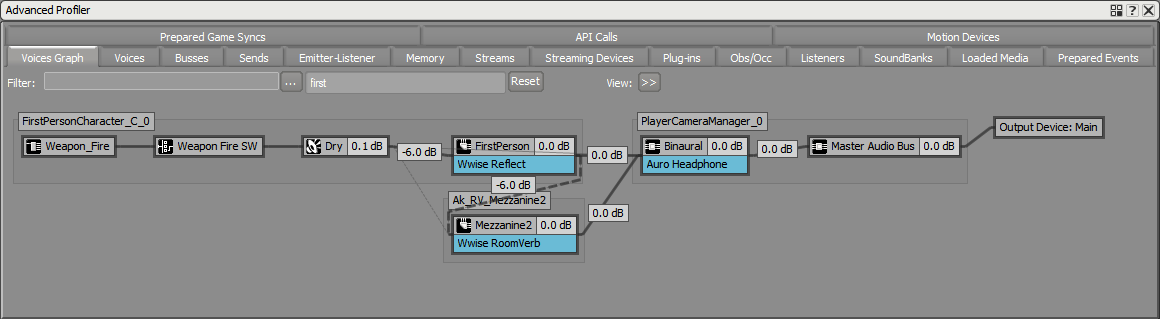

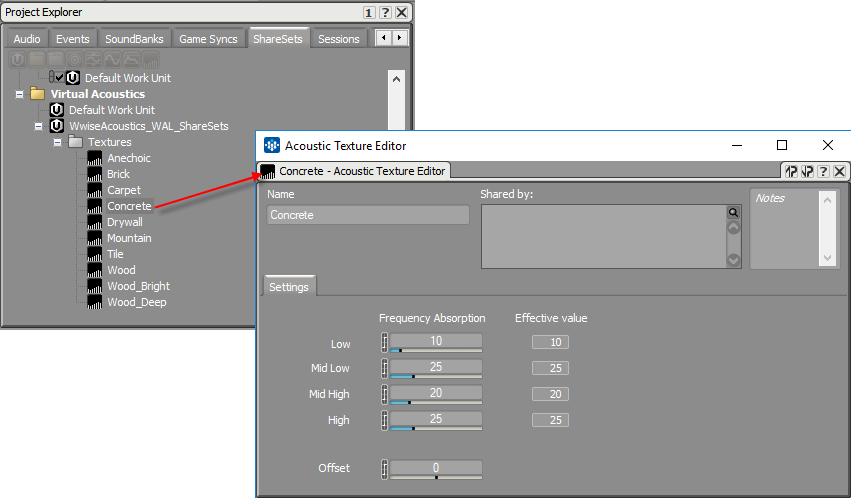

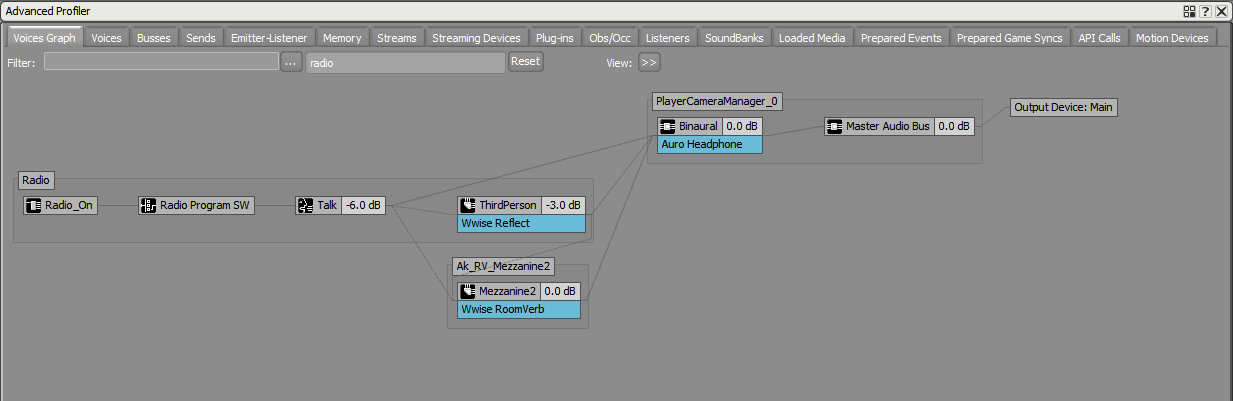

reflectAuxBusID: the ID of the Auxiliary Bus that hosts the desired Wwise Reflect plug-in. SpatialAudio will establish an aux send connection to this bus, but to an instance of the bus that is associated with the same game object as the emitter. This means that different emitters will send to different instances of the auxiliary bus, and thus to different instances of the plugin. This is crucial, as sets of early reflections are unique to each emitter. This is illustrated in the screenshot of the Voice Graph in 'Wwise project setup', below.reflectionsAuxBusGain: Send level towards the auxiliary bus.reflectionsOrder: Determines the order of reflections calculated. Reflections caused by hitting one surface are called first order reflections. Second order reflections are produced by sounds hitting two surfaces before reaching the listener, and so on. Beware! The number of generated reflections grows exponentially with order.reflectionMaxPathLength: Heuristic to stop the computation of reflections. It should be set to no more than the sounds' Max Distance played by this emitter. Use AK::SpatialAudio::SetEmitterPosition and AK::SpatialAudio::SetEmitterAuxSendValues for these emitters instead of the sound engine's functions. Push the relevant geometry to SpatialAudio using AK::SpatialAudio::AddGeometrySet and AK::SpatialAudio::RemoveGeometrySet. For each reflector passed to AK::SpatialAudio::AddGeometrySet, you are asked to identify an Acoustic Texture ShareSet, as defined in the Wwise Project. See AkTriangle::textureID. These Acoustic Textures may be seen as the material's reflective properties, and will have an effect on the filtering applied to the calculated early reflections.You need to understand the following aspects of the bus structure design for handling dynamic environmental effects in your Wwise project effectively.

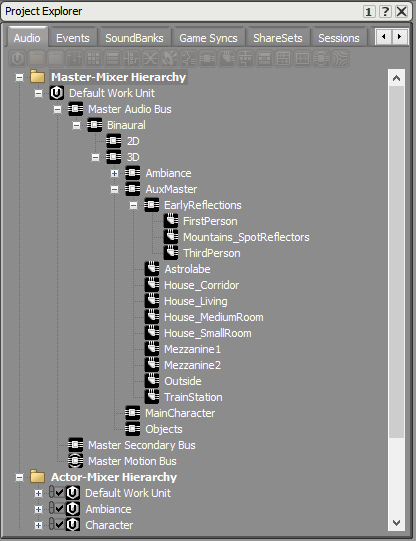

Auxiliary bus design Typically, different Auxiliary Busses are used to represent different environments, and these busses may host different reverb ShareSets that emulate the reverberating characteristics of these environments. When using dynamic ER, such as those processed by Wwise Reflect under SpatialAudio, late reverberation may still be designed using reverbs on Auxiliary Busses. However, you may want to disable the ER section of these reverbs (if applicable), as this should be taken care of by Wwise Reflect.

On the other hand, Wwise Reflect should run in parallel with the aux busses used for the late reverberation. The figure below shows a typical bus structure, where the three Auxiliary Busses under the EarlyReflections bus each contain a different ShareSet of Wwise Reflect. You will note that in this design, we only use a handful of ShareSets for generating early reflections. This is motivated by the fact that the "spatial aspect" of this Effect is driven by the game geometry at run-time. We only use different ShareSets here because we want different attenuation curves for sounds emitted by the player (listener) than those emitted by other objects.

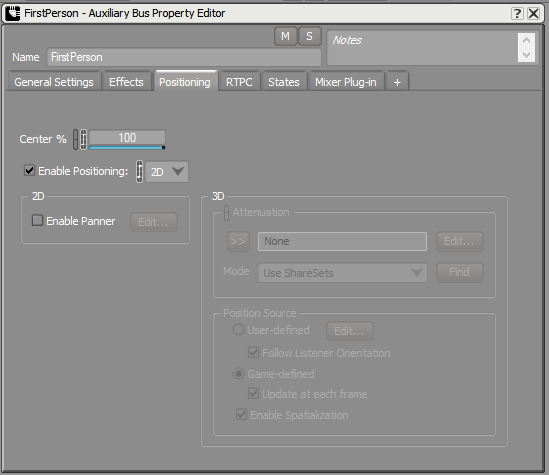

Bus instances The ER bus (hosting Wwise Reflect) will exist in as many instances as there are emitters for which you want early reflections to be generated. This is done by registering these emitters with the Spatial Audio API and sending them to this bus, as was described in the previous section. In order for this to work properly, you need to enable the Positioning check box for this bus, as shown in the image below. By doing this, the signal generated by the various instances of the ER bus will be properly mixed into a single instance of the next mixing bus, downstream. This single instance corresponds to the game object that is listening to this emitter (set via AK::SoundEngine::SetListeners), which is typically the final listener, that corresponds to the player (or camera).

|

Warning: Although the ER bus's positioning must be enabled in order to ensure that all its emitter-instances merge into the listener's busses, the positioning type must be set to 2D, not 3D, to avoid "double 3D positioning" by Wwise. See the documentation of Wwise Reflect, for more details. |

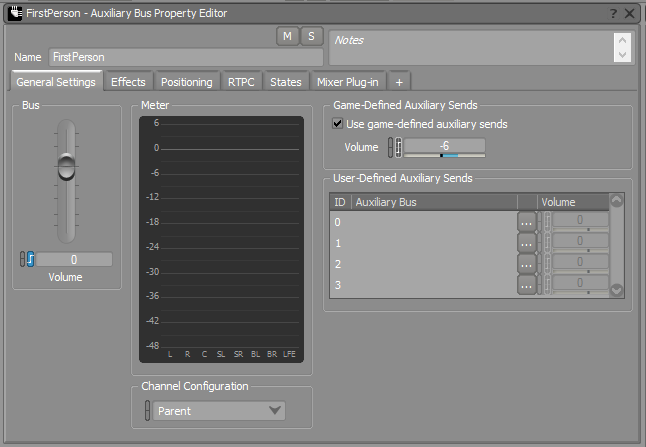

Early reflections sending to late reverberation Also, by virtue of the game object (emitter) sending to the Auxiliary Bus used to process the late reverberation, a connection will also be made between the ER bus and the late reverb bus. This is usually desirable because the generated ER are then utilized to color and "densify" the late reverb. In order to enable this, you need to make sure you enable the Use game-defined auxiliary sends check box on the ER bus. You may then use the Volume slider below to balance the amount of early reflections you want to send to the late reverb against the direct sound.

The following figure is a run-time illustration of the previous discussion.

Using Acoustic Textures For each reflecting triangle, the game passes the ID of a material. These materials are edited in the Wwise Project in the form of Acoustic Textures, in the Virtual Acoustics ShareSets. This is where you may define the absorption characteristics of each material.

While Wwise Reflect may be used and controlled by the game directly using AK::SoundEngine::SendPluginCustomGameData, AK::SpatialAudio makes its use easier by providing convenient per-emitter bookkeeping, as well as packaging of image sources. Also, it lets you mix and match "raw" image sources with surface reflectors (potentially on the same target bus/plug-in).

Call AddImageSource for each image source. Target the bus ID and optional game object ID (note that the game object ID may also be a listener or the main listener). Refer to AkReflectImageSource for more details on how to describe an image source.

Image sources may be provided to Reflect by game engines that already implement this functionality, via ray-casting or their own image-source algorithm, for example.

See the Wwise project setup above for the Geometry API: Using Surface Reflectors for Simulating Early Reflections - which is the same. You can also refer to the Wwise Reflect documentation in the Wwise Help for an example design of Reflect on FPS sound.

The Rooms and Portals services present an abstraction for positioned (and oriented) rooms, which utilizes the 3D busses functionality under the hood. Typically, different Auxiliary Busses are used to represent different environments, and these busses may host different reverb ShareSets that emulate the reverberating characteristics of these environments. "Rooms" are just like environments, and are modeled in the Wwise project with auxiliary busses too: Sounds inside Rooms are mixed together in the auxiliary bus, and this downmix is typically run into a reverb that represents the room effect (late reverberation). However, Rooms differ from standard environments in that they are associated to a distinct game object, instead of being implicitly associated to the listener. A Room's game object is thus both a listener (of emitters inside the room) and an emitter (the room's reverberation). This allows Rooms, that is, the output of the room's reverb, to be positioned in the 3D world, just as any other 3D emitter.

Additionally, the AK::SpatialAudio module lets you define Portals, that is, openings by which the reverberated room sound may leak into other rooms.

The AK::SpatialAudio module manages the Rooms' and Portals' game objects, and the association with emitters inside Rooms. The Portals are located at the position that is set by the game. On the other hand, the game only sets Room orientation. Room position is maintained by AK::SpatialAudio and is set equal to the listener's position when the listener is inside the Room.

See the Wwise project setup above for the Geometry API: Using Surface Reflectors for Simulating Early Reflections to gain a better understanding of the use and functioning of 3D busses in the context of spatial audio.

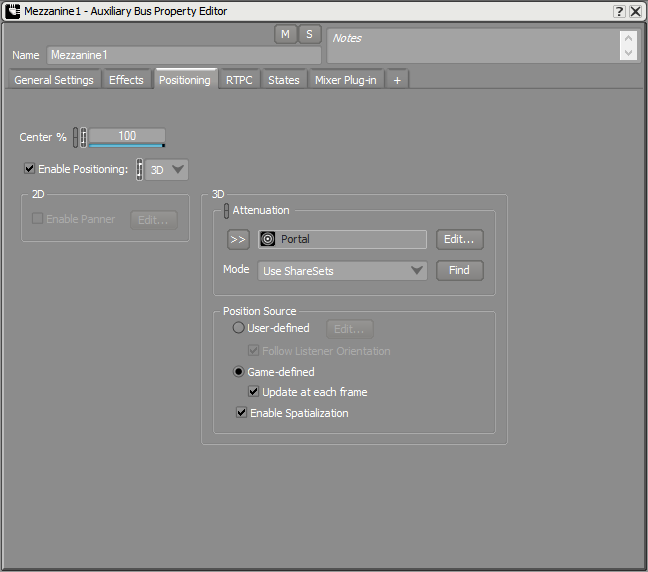

The Auxiliary Bus design is not fundamentally different than in the traditional modeling of environments. The only difference is that it should be made 3D by enabling positioning (in the Positioning tab) and setting the Positioning Type to 3D, as shown in the figure below.

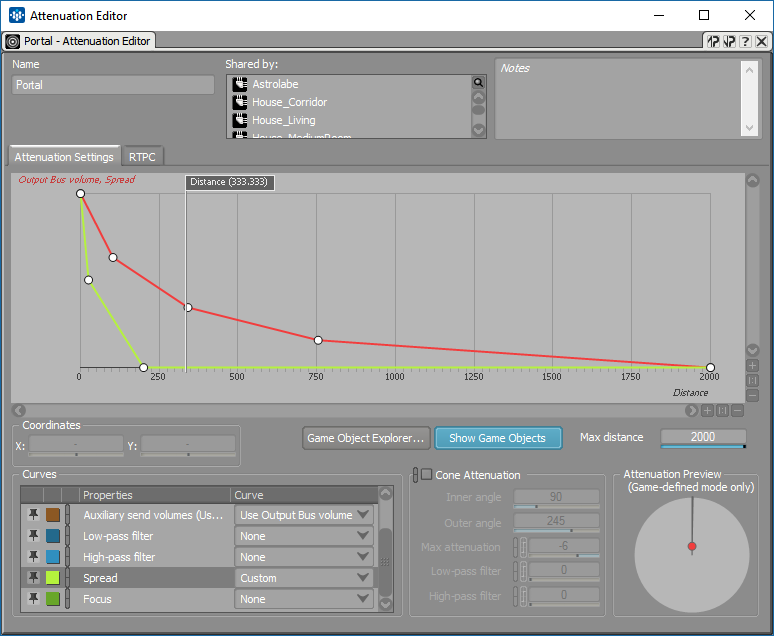

In order to allow the Rooms' reverb to envelop the listener when she is inside the Room, you must specify an attenuation ShareSet, and set a Spread curve with 100 spread at distance zero.

|

Caution: It is important to understand that the attenuation settings and 3D panning set on the 3D auxiliary bus apply between the Room Portal game objects and listener. The attenuation applied between emitters inside the Room and the 3D auxiliary bus is that which is defined on the sound/actor-mixer structures that are playing on the emitters in the Room. |

The ShareSet used in the example below is called "Portal" because it is designed according to the behavior of the Rooms' Portals: as the listener moves away from a Portal, it becomes a point source. At distance zero, the listener has completely crossed the Portal and is inside the Room. As mentioned above, the Room object follows the listener when it is inside the room, so the curves are always evaluated at distance zero when this is the case.

The screenshot below shows an emitter, the radio, sending to the Auxiliary Bus Mezzanine2. This environment has been registered as a Room per the AK::SpatialAudio module, and you can see that a separate game object has been created that is neither the Radio nor the listener (PlayerCameraManager_0).

The game needs to register Rooms and Portals using AK::SpatialAudio::AddRoom and AK::SpatialAudio::AddPortal. Then, it periodically updates the send values of emitters using AK::SpatialAudio::SetEmitterAuxSendValues. Additionally, it has to declare in which Room the emitters and, possibly, the listeners are at any given time using AK::SpatialAudio::SetGameObjectInRoom.

When using the rooms and portals API, it is expected that all late reverb aux busses are set to “Positioning Enabled” and positioning type “3D”. This will ensure that the aux bus is correctly instantiated in the voice graph for both portals and oriented rooms. Spatial audio uses the same aux bus for both for rooms that the listeners is in, and for portals (rooms that the listener is not in); however, in each scenario the aux bus is instantiated on a different game object. The behaviour in these two scenarios is as follows:

If the emitter and the listener are in the same room:

AkRoomParams.If the emitter is in a room that is adjacent to the listener, connected by one or more portals:

AkPortalParams.Questions? Problems? Need more info? Contact us, and we can help!

Visit our Support pageRegister your project and we'll help you get started with no strings attached!

Get started with Wwise