Version

-

-

-

-

Samples

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

The Spatial Audio module exposes a number of services related to spatial audio, notably to:

Under the hood, it:

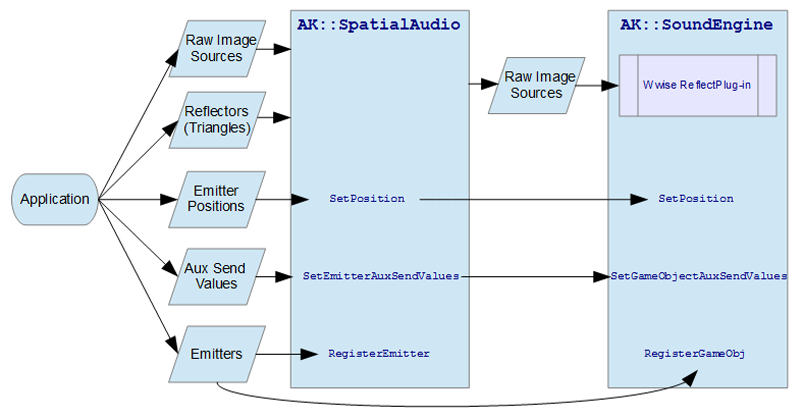

It is a game-side SDK component that wraps a part of the Wwise sound engine, as shown in the following flowchart.

The Spatial Audio functions and definitions can be found in SDK/include/AK/SpatialAudio/Common/. Its main functions are exposed in namespace AK::SpatialAudio. There are 4 API categories:

Beyond AK::SpatialAudio::Init(), Basic Functions are used to make Spatial Audio aware of game objects as emitters and/or listeners and their position, in order to use the other services of Spatial Audio. The Rooms and Portals API is a simple, high-level geometry abstraction used for modeling propagation of sound emitters located in other rooms. The Geometry API uses triangles directly to compute image sources for simulating dynamic early reflections with Wwise Reflect. Spatial Audio also exposes helper functions for accessing the raw API of Wwise Reflect directly.

Initialize Spatial Audio using AK::SpatialAudio::Init().

All game objects need to be registered to the Wwise sound engine with AK::SoundEngine::RegisterGameObj, but in order to use them with Spatial Audio, you also need to register them to AK::SpatialAudio as emitters, using AK::SpatialAudio::RegisterEmitter.

You also need to register your one and only listener to AK::SpatialAudio with AK::SpatialAudio::RegisterListener.

|

Warning: At the moment, Spatial Audio only supports one top-level listener. |

To let Spatial Audio know where emitters and the listener are, call AK::SpatialAudio::SetPosition instead of its AK::SoundEngine counterpart (AK::SoundEngine::SetPosition). Also, since it may set game-defined auxiliary sends transparently, you need to call AK::SpatialAudio::SetEmitterAuxSendValues instead of AK::SoundEngine::SetGameObjectAuxSendValues if you want to set your own custom game-defined auxiliary sends without interfering with what Spatial Audio does.

|

Note: You may replace all your calls to AK::SoundEngine::SetPosition and AK::SoundEngine::SetGameObjectAuxSendValues with those of AK::SpatialAudio. The calls for which the object has not been registered to Spatial Audio via AK::SpatialAudio::RegisterEmitter are forwarded directly to AK::SoundEngine. |

|

Warning: Spatial Audio handles multiple calculated positions of Rooms and Portals. However, multiple positions cannot be specified for Spatial Audio emitters or listeners. So, if you have set multiple positions for a game object, do not register it to Spatial Audio as either an emitter or a listener. |

The Geometry API uses emitter and listener positions, and triangles of your game's (typically simplified) geometry in order to compute image sources for simulating dynamic early reflections, in conjunction with the Wwise Reflect plug-in. Sound designers control translation of image source positions directly in Wwise Reflect, per tweaking of properties based on distance and materials.

Please refer to our Image Source Approach to Dynamic Early Reflections and Creating compelling reverberations for virtual reality blogs, for example, for an introduction to geometry-driven early reflections (ER for short).

For each emitter that should support dynamic ER, call AK::SpatialAudio::RegisterEmitter() after having registered the corresponding game object to the sound engine. Use the AkEmitterSettings for determining the parameters of the reflections calculation.

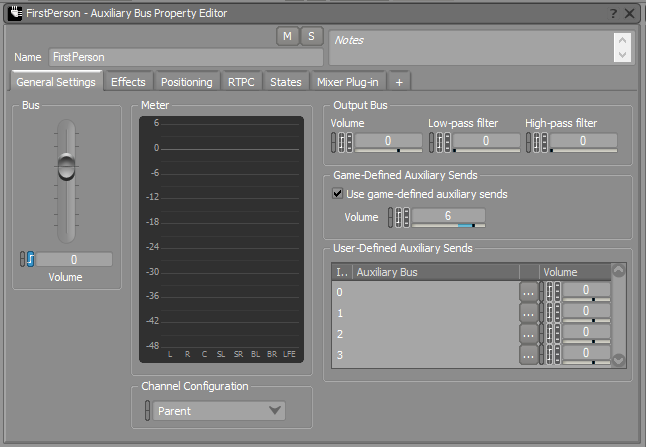

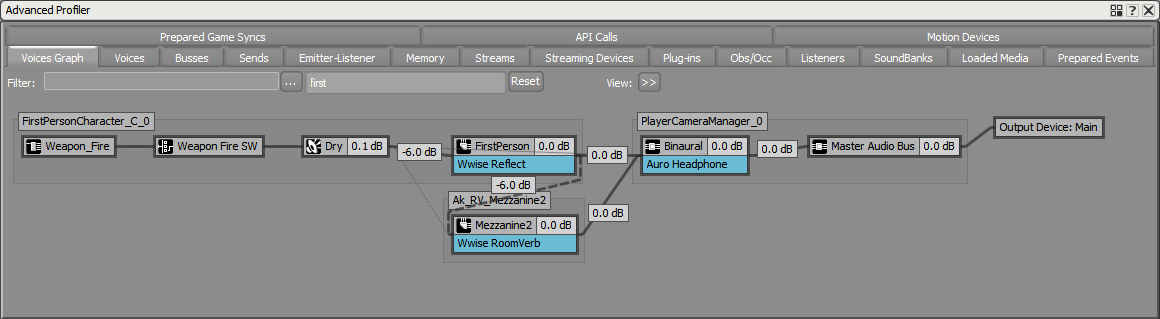

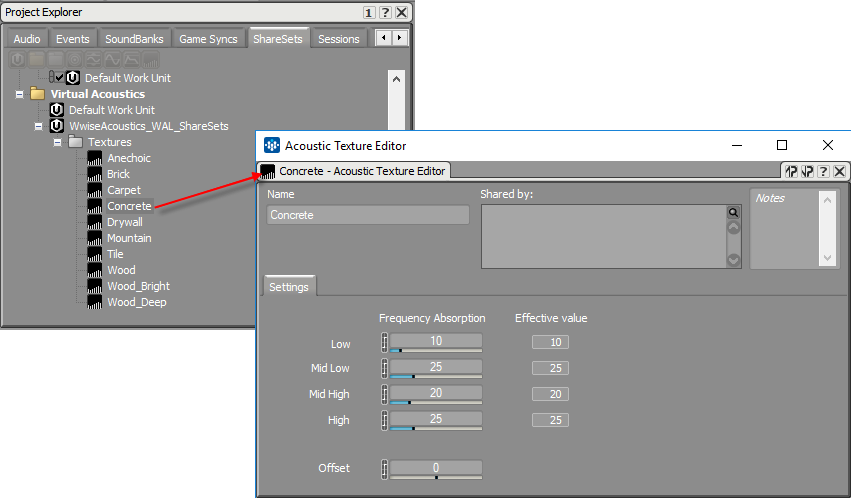

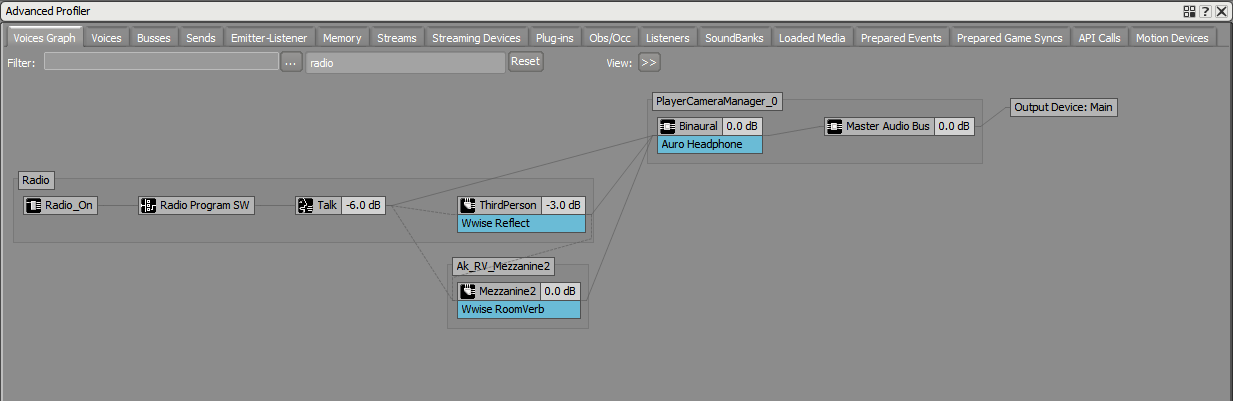

AkEmitterSettings::reflectAuxBusID: The ID of the Auxiliary Bus that hosts the desired Wwise Reflect plug-in. Spatial Audio will establish an aux send connection to this bus. It will, however, be to an instance of the bus that is associated with the same game object as the emitter. This means that different emitters will send to different instances of the Auxiliary Bus, and thus to different instances of the plug-in. This is crucial because sets of early reflections are unique to each emitter. This is illustrated in the screenshot of the Voices Graph in 'Wwise project setup', seen below.AkEmitterSettings::reflectionsAuxBusGain: The send level directed towards the Auxiliary Bus.AkEmitterSettings::reflectionsOrder: The order of reflections to be calculated. Reflections caused by hitting one surface are called first order reflections. Second order reflections are produced by sounds hitting two surfaces before reaching the listener, and so on. Beware! The number of generated reflections grows exponentially with each order.AkEmitterSettings::reflectionMaxPathLength: A heuristic to stop the computation of reflections. It should be set to no more than the sounds' Max Distance played by this emitter. Use AK::SpatialAudio::SetPosition and AK::SpatialAudio::SetEmitterAuxSendValues for these emitters instead of the sound engine's functions. Push the relevant geometry to SpatialAudio using AK::SpatialAudio::SetGeometry and AK::SpatialAudio::RemoveGeometry. For each reflector passed to AK::SpatialAudio::SetGeometry, you are asked to identify an Acoustic Texture ShareSet, as defined in the Wwise Project. See AkTriangle::textureID. These Acoustic Textures may be seen as the material's reflective properties, and will have an effect on the filtering applied to the calculated early reflections.You need to understand the following aspects of the bus structure design for handling dynamic environmental effects in your Wwise project effectively.

Typically, different Auxiliary Busses are used to represent different environments, and these busses may host different reverb ShareSets that emulate the reverberating characteristics of these environments. When using dynamic ER, such as those processed by Wwise Reflect under Spatial Audio, late reverberation may still be designed using reverbs on Auxiliary Busses. However, you may want to disable the ER section of these reverbs (if applicable), as this should be taken care of by Wwise Reflect.

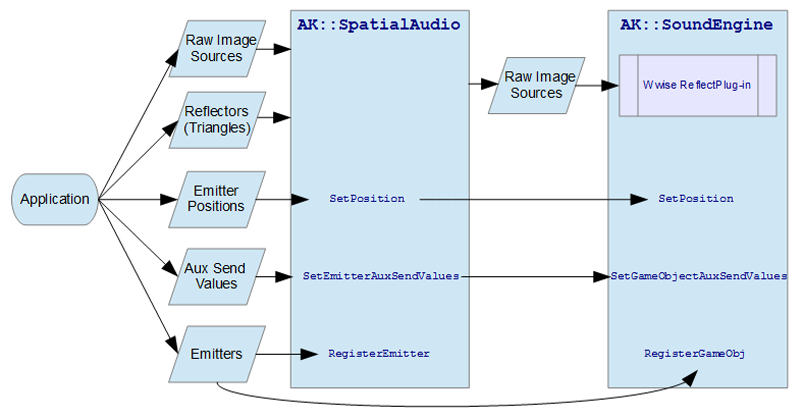

On the other hand, Wwise Reflect should run in parallel with the aux busses used for the late reverberation. The figure below shows a typical bus structure, where the three Auxiliary Busses under the EarlyReflections bus each contain a different ShareSet of Wwise Reflect. You will note that in this design, we only use a handful of ShareSets for generating early reflections. This is motivated by the fact that the "spatial aspect" of this Effect is driven by the game geometry at run-time. We only use different ShareSets here because we want different attenuation curves for sounds emitted by the player (listener) than those emitted by other objects.

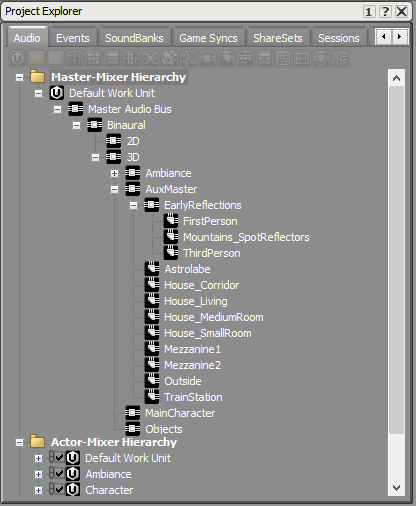

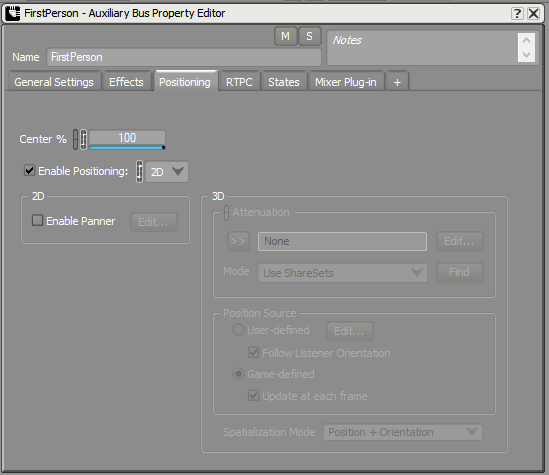

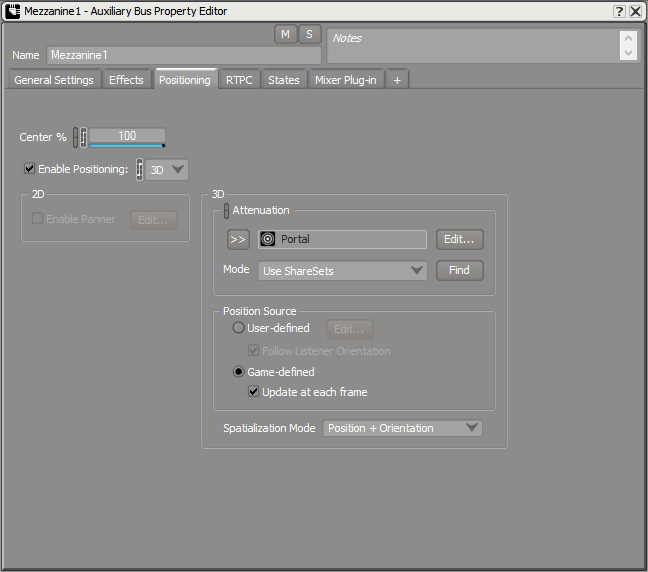

The ER bus (hosting Wwise Reflect) will exist in as many instances as there are emitters for which you want early reflections to be generated. This is done by registering these emitters with the Spatial Audio API and sending them to this bus, as was described in the previous section. In order for this to work properly, you need to enable the Positioning check box for this bus, as shown in the image below. By doing this, the signal generated by the various instances of the ER bus will be properly mixed into a single instance of the next mixing bus, downstream. This single instance corresponds to the game object that is listening to this emitter (set via AK::SoundEngine::SetListeners), which is typically the final listener, that corresponds to the player (or camera).

|

Warning: Although the ER bus's positioning must be enabled in order to ensure that all its emitter-instances merge into the listener's busses, the positioning type must be set to 2D, not 3D, to avoid "double 3D positioning" by Wwise. See the documentation of Wwise Reflect, for more details. |

Also, by virtue of the game object (emitter) sending to the Auxiliary Bus used to process the late reverberation, a connection will also be made between the ER bus and the late reverb bus. This is usually desirable because the generated ER are then utilized to color and "densify" the late reverb. In order to enable this, you need to make sure you enable the Use game-defined auxiliary sends check box on the ER bus. You may then use the Volume slider below to balance the amount of early reflections you want to send to the late reverb against the direct sound.

The following figure is a run-time illustration of the previous discussion.

For each reflecting triangle, the game passes the ID of a material. These materials are edited in the Wwise Project in the form of Acoustic Textures, in the Virtual Acoustics ShareSets. This is where you may define the absorption characteristics of each material.

While Wwise Reflect may be used and controlled by the game directly using AK::SoundEngine::SendPluginCustomGameData, Spatial Audio makes its use easier by providing convenient per-emitter bookkeeping, as well as packaging of image sources. Also, it lets you mix and match "raw" image sources with surface reflectors (potentially on the same target bus/plug-in).

Call AK::SpatialAudio::SetImageSource for each image source. Target the bus ID and optional game object ID (note that the game object ID may also be a listener or the main listener). Refer to AkReflectImageSource for more details on how to describe an image source.

Image sources may be provided to Reflect by game engines that already implement this functionality, via ray-casting or their own image-source algorithm, for example.

See the Wwise project setup above for the Using the Geometry API for Simulating Early Reflections - which is the same. You can also refer to the Wwise Reflect documentation in the Wwise Help for an example design of Reflect on FPS sound.

The Spatial Audio module exposes a simple, high-level geometry abstraction called Rooms and Portals, which allows it to efficiently model sound propagation of emitters located in other rooms. The main features of room-driven sound propagation are diffraction and coupling and spatialization of reverbs. It does so by leveraging the tools at the disposal of the sound designer in Wwise, giving them full control of the resulting transformations to audio. Furthermore, it allows you to restrict game engine-driven raycast-based obstruction, which is highly game engine-specific, and typically costly in terms of performance, to emitters that are in the same room as the listener.

Rooms are dimensionless and are connected with one another by Portals, which together form a network of rooms and apertures by which sound emitted in other rooms may reach the listener. Spatial Audio uses this network to modify the distance traveled by the dry signal, the apparent incident position, and the diffraction angle. The diffraction angle is mapped to obstruction and/or to a built-in game parameter called Diffraction, which designers may bind to properties (such as volume and low-pass filtering) using RTPC. Spatial Audio also positions adjacent rooms' reverberation at their portals, and permits coupling of these reverbs into the listener's room reverb, using 3D busses. Lastly, rooms have an orientation, which means that inside rooms the diffuse field produced by the associated reverb is rotated prior to reaching the listener, tying it to the game's geometry instead of the listener's head.

As with all Spatial Audio services, you need to register emitters and the listener with AK::SpatialAudio::RegisterEmitter and AK::SpatialAudio::RegisterListener after having registered the corresponding game objects to the sound engine (see AK::SoundEngine::RegisterGameObj). The only emitter setting that pertains to Rooms and Portals is AkEmitterSettings::roomReverbAuxBusGain, which gives you per-emitter control of the send level to aux busses.

You need to create Rooms and Portals, based on the geometry of your map or level, with AK::SpatialAudio::SetRoom and AK::SpatialAudio::SetPortal. Rooms and Portals have settings that you may change at run-time by calling these functions again with the same ID. Then the game calls AK::SpatialAudio::SetGameObjectInRoom for each emitter and the listener to tell Spatial Audio in what room they are. From the point of view of Spatial Audio, Rooms don't have a defined position, shape, or size. They can thus be of any shape, but it is the responsibility of the game engine to perform containment tests to determine in which Room the objects are.

|

Warning: Beware of Room IDs. They share the same scope as game objects, so make sure that you never use an ID that is already used as a game object. |

|

Warning: Under the hood, Spatial Audio registers a game object to Wwise for each Room. This game object should not be used explicitly in calls to AK::SoundEngine. |

The most important Room setting is AkRoomParams::ReverbAuxBus, which tells Spatial Audio to which auxiliary bus emitters should send when they are in that Room. Other settings will be discussed in sections below (see Using 3D Reverbs and Transmission).

Portals represent openings between two Rooms. Contrary to Rooms, Portals have position and size, so Spatial Audio can perform containment tests itself. Portal size is given by the Portal setting AkPortalParams::Extent. Width and height (X and Y) are used by Spatial Audio to compute diffraction angle and spread, while depth (Z) defines a region in which Spatial Audio performs a smooth transition between the two connected Rooms by carefully manipulating the auxiliary send levels, Room object placement, and Spread (3D positioning). Refer to sections Using 3D Reverbs and About Game Objects below for more details. Additionally, Portals may be enabled (open) or disabled (closed) using the AkPortalParams::bEnabled Portal setting.

When using any Spatial Audio services for a given emitter, you should use AK::SpatialAudio::SetPosition instead of AK::SoundEngine::SetPosition. Incidentally, you cannot use AK::SoundEngine::SetMultiplePositions with a Spatial Audio emitter because Spatial Audio relies on raw multi-positioning internally. However there is no problem with using AK::SoundEngine::SetMultiplePositions on game objects that are not used with Spatial Audio. AK::SoundEngine::SetMultiplePositions should be considered like a low-level positioning feature: if you use it then you are on your own for managing its environments. Likewise, you should never use AK::SoundEngine::SetGameObjectAuxSendValues with Spatial Audio emitters. However, you may use AK::SpatialAudio::SetEmitterAuxSendValues with Spatial Audio emitters that are in Rooms. See section Implementing Complex Room Reverberation for more details.

Additionally, you should not use AK::SoundEngine::SetObjectObstructionAndOcclusion or AK::SoundEngine::SetMultipleObstructionAndOcclusion with Spatial Audio emitters. Spatial Audio manages obstruction/occlusion for you when emitters are in other Rooms, via the more accurate concepts of diffraction and transmission. In-room obstruction needs to be set via AK::SpatialAudio::SetEmitterObstruction and AK::SpatialAudio::SetPortalObstruction. Refer to section Modeling Sound Propagation from Other Rooms and Modeling Sound Propagation from the Same Room for more details on obstruction and occlusion and how to use them with Spatial Audio Rooms and Portals.

The Integration Demo sample (in SDK/samples/IntegrationDemo) has a demo page which shows how to use the API. Look for Demo Positioning > Spatial Audio: Portals.

The following paragraphs provide an overview of the fundamental Spatial Audio concepts for Rooms and Portals:

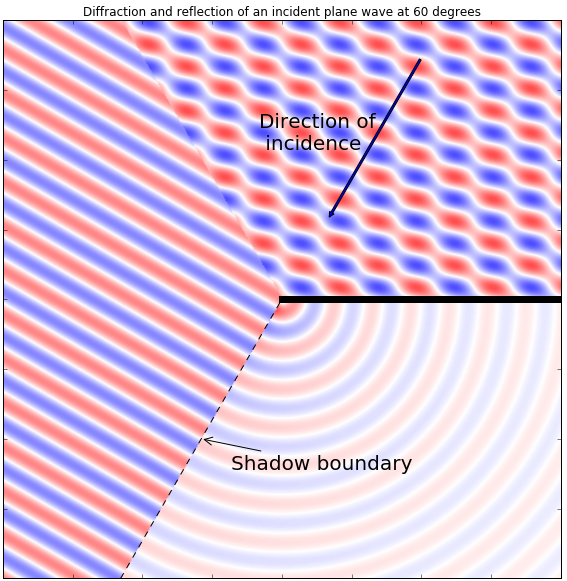

Diffraction occurs when a sound wave strikes a small obstacle, or when it strikes the edge of a large obstacle or opening, and bends around it. It represents sound that propagates through openings (portals) and towards the sides, meaning that a listener does not need to be directly in front of the opening to hear it. Diffraction is usually very important in games because it gives a hint to players about paths that exist between sound emitters and them. The following figure is a sound field plot of a plane wave coming from the top right and hitting a finite surface (the black line) that starts in the center of the figure. The perturbation caused by this edge is called diffraction. The region on the left is the View Region, where the plane wave passes through unaltered. The region on the top right is the Reflection Region, where reflection with the surface occurs and is mixed with the incident wave, resulting in this jagged pattern. The region on the lower right is the Shadow Region, where diffraction plays a significant role. This figure is just a coarse approximation; in real life the field is continuous at the region boundaries, and edge diffraction occurs in the View Region as well, although it is generally negligible compared to the incident wave itself.

We see that the edge can be considered as a point source, with amplitude decreasing with distance. Also, the amplitude of higher frequencies decreases faster than that of lower frequencies, which means that it can be adequately modeled with a low-pass filter. Refer to Diffraction for more details on how Spatial Audio Rooms and Portals lets you model portal diffraction.

Another relevant acoustic phenomenon is the absorption of sound by obstacles and its reciprocal, transmission, which is the proportion of energy that passes through an obstacle. Typically, the proportion of transmitted energy is negligible compared to the energy reaching the listener from diffraction, when an opening exists nearby.

Sound emitters excite the environment in which they are. In time, this produces a diffuse field that depends on the environment's acoustical properties. In games, this is typically implemented using reverb effects with parameters that are tweaked to represent the environment with which they are associated. Diffuse fields also make their way across openings (and walls) and reach the listener.

Obstruction represents a broad range of acoustical phenomena, and refers to anything that happens when a sound wave strikes an obstacle. Occlusion is similar but implies that sound cannot find its way around an obstacle. The Wwise sound engine lets games set Obstruction and Occlusion values on game objects, which are mapped to a global set of volume, low-pass filter, and high-pass filter curves. The difference between the two is that Obstruction affects only the dry/direct signal between an Actor-Mixer or bus and its output bus, whereas Occlusion also affects the auxiliary sends. Obstruction, therefore, better emulates obstruction by obstacles when emitter and listener are in the same room, whereas Occlusion is better to model transmission through closed walls.

In the context of Spatial Audio, sound propagation from other rooms is entirely managed by the Rooms and Portals abstraction. Rooms with no open Portals will utilize Wwise Occlusion to model transmission, while Rooms with at least one propagation path to the listener via open Portals will use either Obstruction or the Diffraction built-in game parameter. For same-room obstruction, games are invited to implement their own algorithm based on the geometry or raycasting services they have at their disposal and the desired level of detail. But, they need to use AK::SpatialAudio::SetEmitterObstruction and AK::SpatialAudio::SetPortalObstruction so that it plays nice with diffraction. Refer to section Modeling Sound Propagation from the Same Room for more detail on how to use same-room obstruction with Spatial Audio.

The table below summarizes the features of Spatial Audio Rooms and Portals by grouping them in terms of acoustic phenomena, describing what Spatial Audio does for each, and how sound designers can incorporate them in their project.

| Acoustic Phenomenon | Spatial Audio | Sound Design in Wwise |

|---|---|---|

| Diffraction of direct path |

|

|

| Diffuse field (reverb) |

|

Reverb, bus volume and game-defined send offset on Actor-Mixer |

| Room coupling: reverb spatialization and diffraction of adjacent room's diffuse field |

|

|

| Transmission (only when no active portal) | Occlusion | Volume or filtering on Actor-Mixer |

The Auxiliary Bus design used with Spatial Audio Rooms and Portals is not fundamentally different than in the traditional modeling of environments. It requires that an Auxiliary Bus be assigned for each Room, mounted with the reverb Effect of the designer's choice, and it is the same bus that is used whether the listener is inside or outside the Room. The only difference is that it should be made 3D by enabling positioning (in the Positioning tab) and setting the Positioning Type to 3D, as shown in the figure below. This way, Spatial Audio may spatialize the reverberation of adjacent Rooms at the location of their Portal by acting on the Rooms' underlying game object position and Spread.

The Room's reference orientation is defined in the room settings (AkRoomParams::Up and AkRoomParams::Front), and never changes. The corresponding game object's orientation is made equal to the Room's orientation. When the listener is in a Room, the bus's Spread is set to 100 (360 degrees) by Spatial Audio. Thanks to 3D positioning, the output of the reverb is rotated and panned into the parent bus based on the relative orientation of the listener and the Room. This happens because the Auxiliary Bus is tied to the Room's game object, while its parent bus is tied to the listener. The screenshot below shows an emitter, the radio, sending to the Auxiliary Bus Mezzanine2. You can see that a separate game object has been created for this room, Ak_RV_Mezzanine, that is neither the Radio nor the listener (PlayerCameraManager_0).

If, for example, there is a spatialized early reflection pattern "baked" into the reverb (such patterns exist explicitly in the Wwise RoomVerb's ER section, and implicitly in multichannel IR recordings used in the Wwise Convolution Reverb), then they will be tied to the Room instead of following the listener as it turns around. This is desirable for proper immersion. On the other hand, it is preferable to favor configurations that "rotate well". Ambisonic configurations are invariant to rotation, so they are favorable. Standard configurations (4.0, 5.1, and so on), less so. When using standard configurations, it is better to opt for those without a center channel, to use identical configurations for aux busses and their parent, and to set a Focus to 100. In these conditions, with a 4.0 reverb oriented with the Room towards the north, a listener looking at the north would hear the reverb exactly as if it were "2D" (direct speaker assignment). A listener looking straight east, west, or south would hear the original reverb but with channels swapped. Finally, a listener looking anywhere in between would hear each channel of the original reverb being mixed into a pair of output channels.

When the listener is away from a room's Portal, Spatial Audio reduces the spread according to the Portal's extent, which seamlessly contracts the reverb's output to a point source as it gets farther away. The Spread is set to 50 (180 degrees) when the listener is midway into the Portal. And, as it penetrates into the room, the Spread is increased even more with the "opening" being carefully kept towards the direction of the nearest Portal.

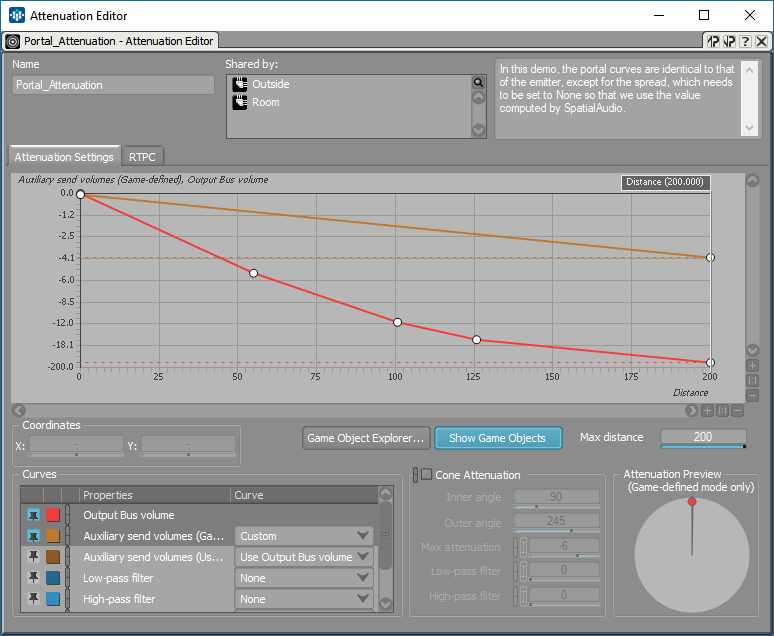

The adjacent Room's reverberation should be regarded as a source located at the Portal, and you may use an Attenuation ShareSet in the Auxiliary Bus's Positioning tab to determine the behavior with respect to distance from the listener. If you select the Enable Game-Defined Sends check box on this bus, you allow it to be further sent to the reverb of the listener's Room (see figure below). You may tweak the amount that is sent to the listener's reverb with the Game-Defined Send Offset, and with distance using the Game-Defined Attenuation. The ShareSet used in the example below is called "Portal" because it is designed according to the behavior of the Rooms' Portals: as the listener moves away from a Portal, it becomes a point source. At distance zero, the listener has completely crossed the Portal and is inside the Room.

|

Warning: Note that in order to let Spatial Audio modify the Spread according to the Portal's geometry, you must not have a Spread curve in your Auxiliary Busses' attenuation settings. Using a Spread curve there would override the value computed by Spatial Audio. |

Inside Rooms, the Room game object position is maintained by Spatial Audio at the same location as the listener, so the attenuation curves are all evaluated at distance 0.

Spatial Audio Rooms and Portals work by manipulating the position of the game objects known to the Wwise sound engine (emitters registered by the game and Room game objects registered by Spatial Audio), and some of their inherent properties, like game-defined sends, obstruction, and occlusion.

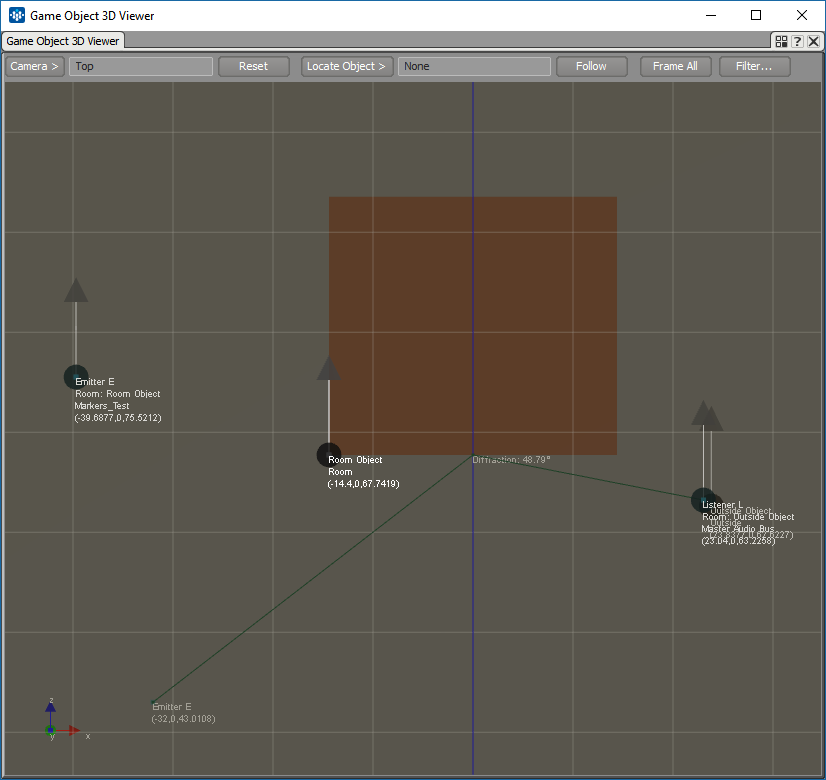

When the SpatialAudio's initialization setting DiffractionFlags_CalcEmitterVirtualPosition is set, the position of the emitters located in Rooms that are adjacent to the listener is modified such that they seem to appear from the diffracted angle, if applicable. In the screenshot of the 3D Game Object Profiler, below, the listener (Listener L) is on the right of the Portal and the "real" emitter is on the lower left (Emitter E, without orientation vectors), resulting in a diffraction angle of 48 degrees. Spatial Audio thus repositions the emitter to the upper left, so that the apparent position seems to come from the corner, all while respecting the traveled distance.

When there are multiple Portals connecting two Room, Spatial Audio may assign multiple positions to an emitter (one per Portal). The MultiPosition_MultiDirection mode is used, so that enabling or disabling a Portal does not affect the perceived volume of other Portals.

|

Warning: Note that you cannot use multipositions with Spatial Audio emitters. Only Spatial Audio can, under the hood. |

Spatial Audio registers one game object to Wwise per Room, under the hood.

|

Warning: This game object should not be used directly. |

When the listener is in a Room, the Room's game object is moved such that it follows the listener. Thus, the distance between the Room and the Listener object is approximately 0. However, its orientation is maintained to that which is specified in the Room settings (AkRoomParams). See Using 3D Reverbs for a discussion on the orientation of 3D busses.

When the listener is outside of a Room, that Room's game object adopts the position(s) of its Portal(s). More precisely, it is placed in the back of the Portals, at the location of the projection of the listener to the Portals' tangent, clamped to the Portals' extent. This can be verified by looking at the Room's game object, as seen in the screenshot of the 3D Game Object Profiler, above, in section Emitters.

For multiple Portals, a Room's game object is assigned multiple positions, in MultiPosition_MultiDirection mode, for the same reason as with emitters.

When transitioning inside a Portal, the "in-Room" and "Portal" behaviors are interpolated smoothly.

With Spatial Audio Rooms and Portals, sound propagation in Rooms other than that of the listener is managed by the Rooms and Portals abstraction. An emitter in another Room reaches the listener via Portals, and their associated diffraction, or, if no such path exists, via transmission through rooms' "walls".

For each emitter in adjacent Rooms, Spatial Audio computes a diffraction angle from the Shadow Boundary, from the closest edge of the connecting Portal. See Diffraction, above. This diffraction angle is given to the Wwise user for driving corresponding audio transformations, by one of two means. It can set the Obstruction value on the emitter game object, or set the value of a built-in game parameter, Diffraction. Whether Spatial Audio does one or the other, or both, depends on the choice of AkDiffractionFlags with which you initialize it.

As seen above, the diffraction angle ranges from 0 to 180 degrees. To use the Diffraction built-in parameter, you need to create a game parameter and set its Bind to Built-in Parameter drop-down menu to Diffraction. Values pushed to this game parameter are scoped by game object, so they are unique for each emitter. You may then use it with an RTPC to control any property of your Actor-Mixer. The most sensible choice is the Output Bus Volume and Output Bus LPF, to emulate the frequency-dependent behavior of diffraction. Output Bus Volume and LPF are privileged over the base Volume and LPF because they should apply to the direct signal path only, and not to the auxiliary send to the Room's reverb.

Rooms' diffuse energy is also included in the sound propagation model of Spatial Audio as the output of Rooms' Auxiliary Busses. Spatial Audio computes diffraction for this too ("wet diffraction"). Spatial Audio assumes that the diffuse energy leaks out of a Room perpendicular to its Portals. Thus, it computes a diffraction angle relative to the Portal's normal vector. This diffraction value can be used in Wwise exactly like with emitters' dry path. When using the built-in game parameter, it should be used with an RTPC on the room's auxiliary bus, typically on the bus's Output Bus Volume and Output Bus LPF. The bus's Output Bus Volume property should be favored over the Bus Volume property for the same reason as with Actor-Mixers: it should not affect the auxiliary send path that is used for coupling this reverb to the reverb of the listener's room.

Alternatively, users can use built-in, project-wide obstruction for modifying audio from Spatial Audio's diffraction. When doing so, Spatial Audio scales the [0,180] range of Diffraction to the [0,100] range expected by obstruction. Compared to the Diffraction built-in game parameter, project-wide obstruction is mapped to curves that are global to a Wwise Project. They can be authored in the Project Settings. Obstruction Volume, LPF, and HPF are effectively applied on Output Bus Volume, LPF, and HPF, as discussed above. Because the obstruction curves are global, project-wide obstruction is less flexible than the Diffraction built-in game parameter. On the other hand, they require less manipulation and editing (using RTPCs). Also, different Obstruction values apply to each position of a game object, whereas built-in game parameters may only apply a single value for all positions of a game object. (When multiple values are set, the smallest is taken.) Recall that multiple game object positions are used when a Room has more than one Portal.

When no propagation path with active Portals exists between an emitter and the listener, Spatial Audio falls back to model transmission (through walls), and does it using the emitter game object's Occlusion. The Occlusion value is taken from the Room settings' AkRoomParams::WallOcclusion; the maximum occlusion value is taken between the listener's Room and the emitter's Room. Occlusion maps to volume, LPF, and HPF via global, project-wide curves, defined in the Obstruction/Occlusion tab of the Project Settings. As opposed to Obstruction, Occlusion also affects the signal sent to auxiliary busses, so the contribution of an occluded emitter to its Room's reverb, and any coupled reverb, will be scaled and filtered according to the Occlusion curves. Occlusion thus properly models absorption (transmission).

|

Note: Spatial Audio cannot model diffraction and transmission at the same time, in parallel, due to limitations in the Wwise sound engine. However, the energy transmitted through a wall is typically negligible compared to the energy diffracted through an open door. |

The diffuse energy of adjacent rooms penetrating into the listener's room through Portals can be seen as sources located at these Portals and, as such, they should also contribute to exciting the listener's room. In other words, they should send to the Auxiliary Bus of the listener's Room. As written earlier, you can do this by checking the Enable Game-Defined Sends check box on the adjacent Room's auxiliary bus. You may tweak the amount that is sent to other rooms' reverb with the Game-Defined Send Offset, and with distance using the Game-Defined Attenuation.

Obstruction of sound emitted from the same room as that of the listener is not covered by Spatial Audio Rooms and Portals, and is therefore deferred to the game. The representation of geometry, methods, and the desired level of details for computing in-room obstruction is highly game-engine specific. Games typically employ ray-casting, with various degrees of sophistication, to carry out this task.

With Spatial Audio Rooms and Portals though, you don't need to do this for all emitters, but only those that are in the same Room as the listener. This is beneficial because ray-casting is usually much more expensive than the algorithm used by Spatial Audio for computing propagation paths. Since in-room obstruction between an emitter and the listener happens in the same room, by definition, we assume that the obstacle will not cover the listener or emitter integrally, and that the sound will reach the listener through its reflections in the room. This can be properly modeled by affecting the dry/direct signal path only, and not the auxiliary send, which means that Obstruction is the proper mechanism. For this purpose, the game should call AK::SpatialAudio::SetEmitterObstruction instead of AK::SoundEngine::SetObjectObstructionAndOcclusion. This is the only way to let Spatial Audio know that there is in-room obstruction between the emitter and listener, so that it can incorporate it in its calculations without interfering with its own use of Obstruction and Occlusion for other purposes (diffraction and transmission).

Furthermore, Portals to adjacent rooms should be considered like sound emitters in the listener's Room. Therefore, games should also run their obstruction algorithms between the listener and the Portals of the Room in which it is. Then, they need to call AK::SpatialAudio::SetPortalObstruction for each of these portals in order to safely declare in-room obstruction with the listener.

|

Warning: You should never call AK::SoundEngine::SetObjectObstructionAndOcclusion or AK::SoundEngine::SetMultipleObstructionAndOcclusion for game objects that are registered as Spatial Audio emitters. |

Sound propagation also works across multiple rooms. The Room tree is searched within SpatialAudio when looking for propagation paths. Circular connections are avoided by stopping the search when Rooms have already been visited. The search depth may be limited by Spatial Audio's initialization setting AkSpatialAudioInitSettings::uMaxSoundPropagationDepth (default is 8).

As opposed to AK::SoundEngine::SetGameObjectAuxSendValues which overrides the game object send values and should never be used with Spatial Audio emitters, AK::SpatialAudio::SetEmitterAuxSendValues adds up to the auxiliary sends already set by Spatial Audio. This may be useful when designing complex reverberation within the same room, for example, if there are objects or terrain that call for different environmental effects. A Room's AkRoomParams::ReverbAuxBus can also be left to "none" (AK_INVALID_AUX_ID), so that its send busses are only managed by the game via AK::SpatialAudio::SetEmitterAuxSendValues.

Questions? Problems? Need more info? Contact us, and we can help!

Visit our Support pageRegister your project and we'll help you get started with no strings attached!

Get started with Wwise