Introduction

Wiggle Room is an interactive installation that invites children to engage with a dynamic, sound-responsive environment. Exhibited at The Metropolitan Arts Centre (MAC) in Belfast as part of Belfast 2024, the installation combined real-time body tracking, physics-driven visuals, and interactive sound to create an open-ended play experience.

Developed in collaboration with Boom Clap Play, PlayBoard NI, and Big Motive, Wiggle Room was shaped through research and co-design sessions with young participants. The project focused on exploring free play, allowing children to interact intuitively without structured goals or rules.

Designing for Free Play

The core idea behind Wiggle Room was to create a space where sound and movement interact seamlessly. We wanted the audio to feel playful yet immersive, reinforcing the tactile, physics-driven visuals while maintaining a balance between stimulation and a sense of calm.

From an audio perspective, we aimed for a warm and inviting atmosphere that wouldn't become overwhelming, especially for younger participants. The sound design needed to be nuanced and responsive, reinforcing the sense of movement without creating fatigue or excessive density during high-activity periods.

To avoid an overly static soundscape, we introduced short musical cues that were tied to animated characters appearing in the space. These cues added moments of contrast and playfulness while preventing the ambient musical layers from becoming stagnant.

Technical Implementation: Tracking & System Architecture

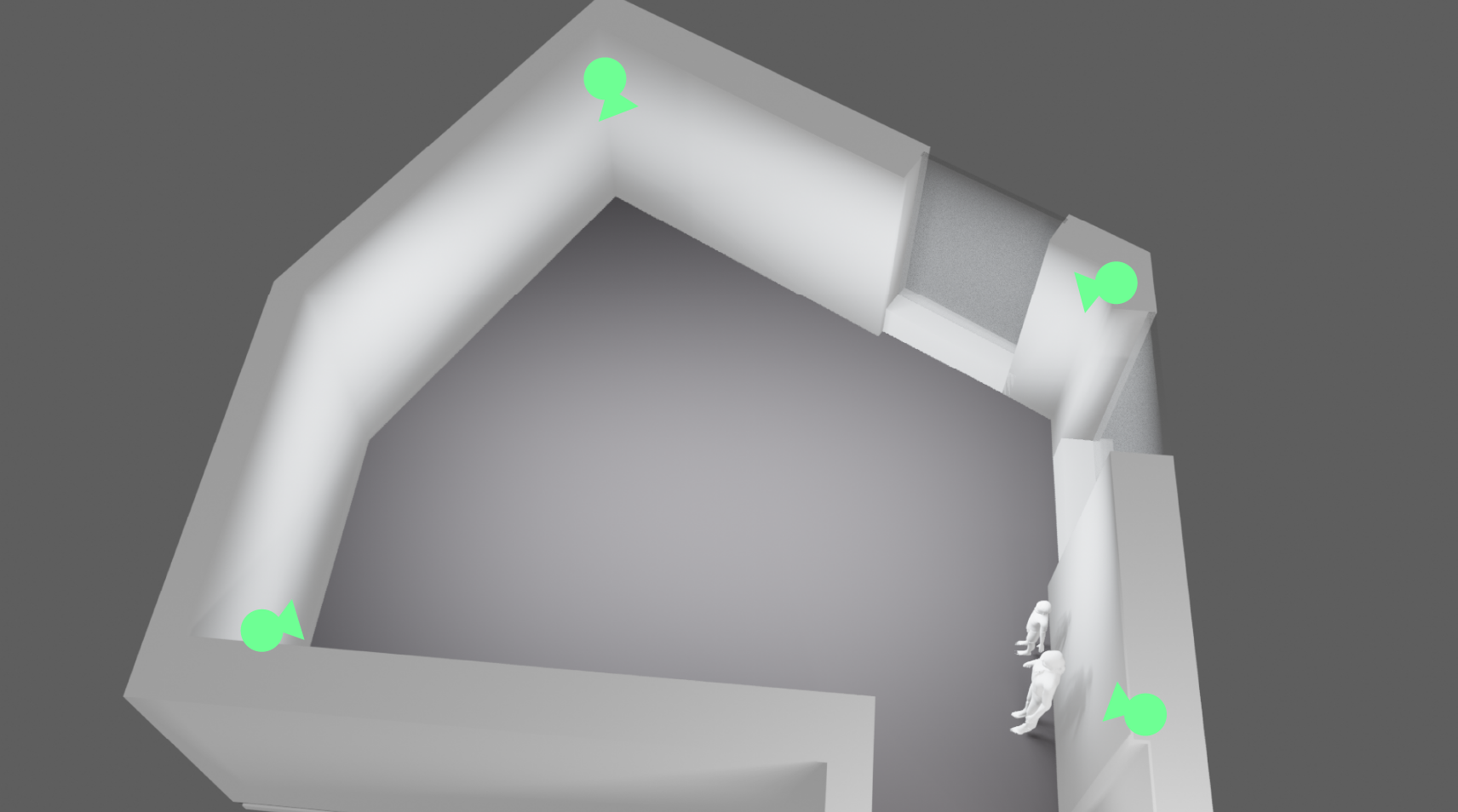

The installation ran in Unity, with Stereolabs ZED 2i cameras handling body tracking. Each camera was connected to a ZED Box Orin AI computer, which configured camera settings and processed joint tracking data before sending it over Ethernet to the host PC.

Room plan with cameras

The tracking system processed data from multiple cameras, averaging skeleton data on the host PC. It handled user detection, tracked entries and exits, and continuously updated joint positions.

Within Unity, each user was represented by a custom body object consisting of:

- Limb transform data

- Collision detection points for triggering hotspots

- Particle-based physics behaviour

While latency is often a key consideration in interactive projects, in this case, it was not a significant issue due to the nature of the visual and interactive motion design. The physics-driven particle collisions inherently introduced a fluid and organic quality to movement, which not only aligned with the aesthetic goals of the installation but also happened to complement the responsiveness of the audio.

Wwise Implementation & Sound Design Workflow

Wwise was central to Wiggle Room’s interactive audio system. We structured it around Switches, Events, RTPCs, and Blend Containers to ensure dynamic, real-time responses to movement and interactions.

Music & Ambience

The music system was built using Switches to progress through different sections over time. To keep the ambient layers light, we implemented a secondary music object dedicated to triggering the short character music cues that added playful moments.

Particle Impact Sounds

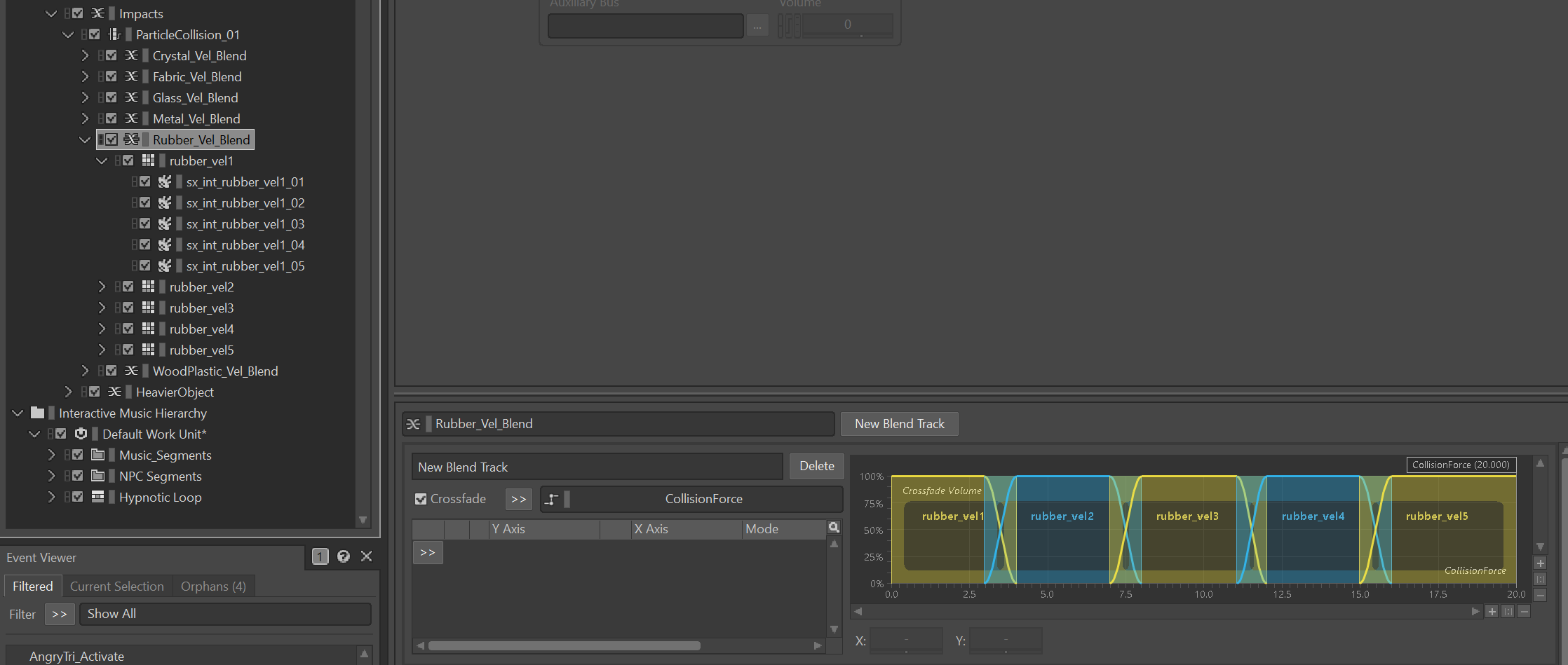

The physics-based particle system played a central role in shaping the installation’s sound design. Given the high volume of particle interactions happening simultaneously, we needed an efficient approach to managing polyphony while ensuring a natural and dynamic response.

To achieve this, we implemented an object pooling system on the Unity side to control voice polyphony and prevent excessive event triggering. In Wwise, we then structured a single impact audio object as a Switch container, assigning the correct material type to each particle. Within this, a Blend Container managed five distinct velocity layers, each containing multiple randomised variations to maintain variation and prevent repetition. The force data from the physics system was passed to an RTPC. This data dynamically scaled the Blend Container to select the appropriate velocity layer, while also modulating pitch, volume, and filter settings for a more expressive and responsive result.

Hotspot Interactions

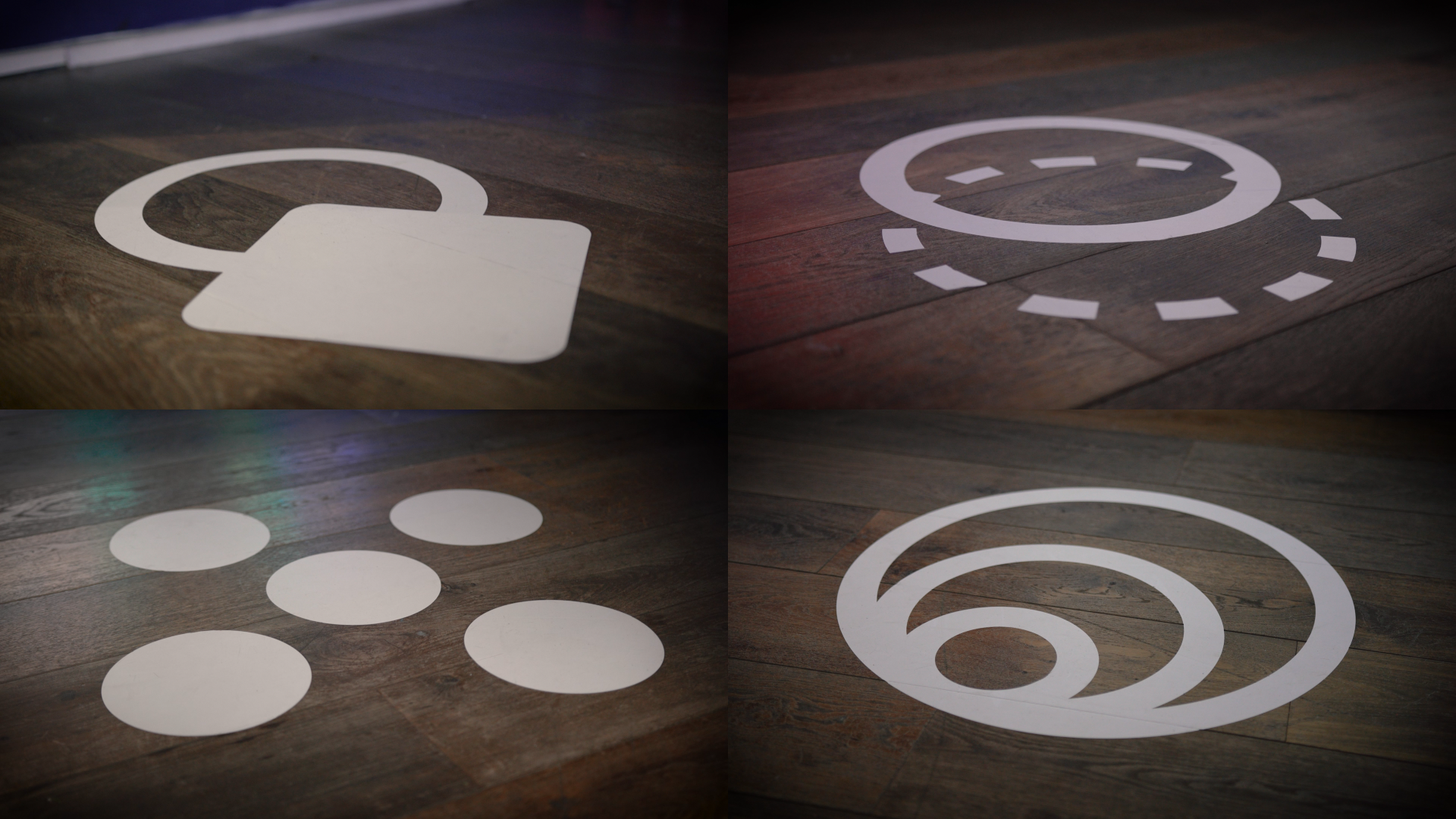

Hotspots marked with vinyl stickers

Hotspots were designated interactive areas within the installation, marked with vinyl stickers that encouraged users to engage with different effects. When a user stepped into one of these zones, it would trigger a specific interactive event, reinforced with audio feedback. To support this, each hotspot included a base charge, cancel, and activation sound, along with unique trigger sounds tailored to the individual hotspot’s function.

To prevent unintended activations, wait and cool-down timers were implemented, ensuring that rapid or accidental stepping didn’t cause excessive triggering. Activation was managed through collider-based detection, tuned with delay adjustments to provide a smooth, intentional interaction.

In addition to triggering event-specific sounds, some hotspots leveraged RTPCs to create more dynamic audio responses. For instance, one hotspot allowed users to inflate their particle clusters, increasing their virtual size. This effect used an RTPC to scale pitch and filter values, while also blending in a lower-frequency impact sound to reinforce the perception of added weight and mass.

Timing & Synchronisation

Certain elements of the experience required tight integration with the music system, particularly the character music cues, which needed to align with the larger ambient soundscape. To ensure synchronisation, randomised timers were first used to initiate character events. Once triggered, these events waited for the next available grid-based music callback event in Wwise before proceeding.

When the callback event occurred, it triggered the character animation logic and a corresponding, randomised secondary music system event. This approach prevented interactions from feeling rigid or repetitive while maintaining a seamless sync between character events and the evolving musical landscape.

Reception & Impact

Observing how different demographics engaged with Wiggle Room was fascinating. Younger children (as young as two) often engaged instantly, while older kids (8-9) were sometimes less inclined. Teenagers and adults were initially more reserved but, in some cases, spent extended periods exploring the interactions.

One memorable moment involved two elderly women spending 45 minutes in the space, fully immersed in playful movement. Some parents also expressed surprise at how quickly their children adapted to the interaction, even when they themselves were initially unsure how to engage.

From a sound perspective, the physics-based interactions created an intuitive audio feedback loop that helped ground the experience. Some users intentionally experimented with movement speeds and intensities, and a number commented on the satisfying qualities of the hotspot-triggered sounds.

Smaller, MIDI-Controlled Offshoot Exhibit

An unexpected opportunity arose to create a smaller, music-driven offshoot exhibit at the venue. Expanding on the existing Wiggle Room particle system, this version introduced MIDI keyboards as the primary input method, allowing users to interact with the installation through musical gestures.

The system mapped MIDI note data to both visual and audio elements, using note pitch, velocity, and duration to influence the particle and sound behaviour. Velocity determined particle size, while sustained notes increased swirling intensity, creating evolving, physics-based motion. Additionally, Wwise’s procedural synthesis tools were used to dynamically morph sound, responding in real-time to user input.

Since the installation repurposed the existing particle system, all impact audio remained intact, requiring only minor refinements. The LED-lit keys on the keyboards provided a colour-coded visual reference, reinforcing the connection between musical input and its resulting audiovisual effects.

Conclusion

Wiggle Room demonstrates how sound can transform an interactive space, reinforcing movement and play in a way that feels both intuitive and immersive. Through careful balancing of ambience, interactive feedback, and real-time physics-driven audio, we created an environment that dynamically adapted to its users.

The use of Wwise’s real-time parameter control, Switch-based event management, and callback-driven synchronisation allowed us to shape a soundscape that was both playful and unobtrusive. By layering dynamic music, tactile impact sounds, and hotspot-driven events, the installation maintained an organic, evolving sound world that encouraged continuous engagement without overwhelming the senses. The principles explored in Wiggle Room—sound-driven play, intuitive movement interaction, and adaptive audio techniques—will continue to shape how we approach interactive experiences.

Commentaires