When you are authoring audio in Wwise, CPU usage is an essential concern. It is also

important to balance performance and quality. If you are authoring for multiple

platforms, you will have to be aware of each platform's technical limitiations and

requirements, and also consider the CPU resources required by any accompanying

software.

Many Wwise features and plug-ins affect CPU usage. The topics in this section provide

an overview of the major factors that affect CPU usage and offer different strategies

and best practices for CPU optimization. In general, the process consists of using the

Profiler (refer to Profiling) to monitor CPU performance, determine

which factors you need to address, and then use the guidelines in this section as a

starting point to explore possible solutions.

Every Wwise project is different, available CPU resources vary by platform, and

quality and performance requirements also vary, so there is no single set of specific

guidelines or recommendations that apply to all cases. Instead, you will have to

experiment, listen to your sounds in Wwise, and determine the optimal settings. The

following major factors affect CPU usage:

Number of concurrent voices. The number of voices, especially physical

voices, has a large impact on CPU. As the number increases, CPU usage

increases with it.

Effects. Wwise audio Effects consume CPU and the amount varies depending

on the specific Effect, where the Effect is used, and whether it is

rendered.

Audio codecs. Audio file conversion with codecs uses CPU, and some codecs

require more CPU resources than others.

Spatial Audio. The various options and settings that control 3D acoustics

can have a major impact on CPU usage.

Each of these topics is described in further detail in the following sections.

Because there are so many variables that contribute to CPU usage in Wwise, there is no

single, easy way to optimize CPU usage in all cases. Instead, you have to consider all

of the factors that are relevant to your project, determine acceptable levels of CPU

usage (which can vary by platform), then use the Wwise Profiler to identify which

properties and settings use the most CPU and adjust them accordingly. The right balance

between CPU and quality is ultimately a matter of judgment.

As a general approach, use the Profiler (refer to Profiling) to

monitor performance during Capture Sessions, identify overall CPU usage and peaks, and

then view the specific factors that contribute to CPU usage in the Advanced Profiler and

Performance Monitor. In particular, pay attention to the following things:

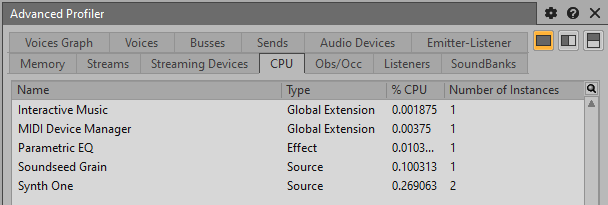

In the Advanced Profiler, the CPU tab displays all of the elements that

contributed to CPU usage during the session. You can then identify which

plug-ins, audio codecs, Effects, and so on use the most CPU

resources.

In the Performance Monitor Settings dialog, select any relevant items

that include CPU in the name. The following options can be useful in the

graph, the list, or both:

CPU - Plug-in Total

CPU - Total

Spatial Audio - CPU

Spatial Audio - Path Validation CPU

Spatial Audio - Portal Path Validation CPU

Spatial Audio - Portal Raytracing CPU

Spatial Audio - Raytracing CPU

A combination of the options in the Advanced Profiler and Performance Monitor provide

a comprehensive portrait of CPU usage during a Capture Session. After you identify which

factors contribute to CPU usage, you can use the suggestions described in the other

topics in this section to optimize your project's performance.

The number of concurrent physical voices (that is, sounds that actually play) has a

major impact on CPU usage. To reduce the amount of CPU resources that voices use, you

can use the following settings to reduce the number of concurrent voices:

Playback limits determine the maximum number

of instances of a sound that can play at the same time.

Priority determines which sounds to kill or

send to virtual voice behavior in the event that the playback limit is

exceeded.

Virtual voices are sounds that occur during

runtime but do not actually play if they fall below a defined volume threshold

or exceed the defined playback limits. The recommended virtual voice behavior is

"Kill if finite else virtual". Refer to Advanced Settings Tab: Actor-Mixer Objects.

For more information, refer to Managing priority.

Effects consume CPU resources. In general, CPU usage increases with the number of

Effect instances that play at the same time, although there are several additional considerations:

Number of channels. CPU usage increases

with the number of audio channels, and the number of channels varies with

the audio bus. For example, three instances of an Effect on a mono sound

(three channels) use fewer CPU resources than a single instance of an Effect

on a 7.1 bus (eight channels).

In addition, certain Effects such as Object Processors, are only

instantiated once per bus instance. For more information on Effects, Audio

Objects, and buses, refer to Using Effects with Audio Objects.

Type of Effect. Different types of Effect

use different amounts of CPU. Reverb Effects are a special case, because

although most other Effects cause a linear increase in CPU usage as the

number per channel increases, reverb Effects have a flatter rate of

increase. Within the reverb Effect category, different types of reverb use

different amounts of CPU: Matrix Reverb uses less CPU than RoomVerb, for

example, although it has fewer options. Other Effects, such as Compressor or

Parametric EQ, use less CPU than reverbs.

Effect rendering. One way to reduce

Effect CPU consumption is to render Effects so that they are part of the WEM

files themselves. When Effects are rendered, they are processed before they

are packaged in SoundBanks and therefore do not consume runtime processing

power. You can identify Effects that are good candidates for rendering

through the Integrity Report: select the

Optimizations option and run the report. For more information about

rendering, refer to Rendering Effects.

Bypassing Effects. If certain Effects are

only applicable in certain situations, you can selectively bypass them. For

example, if you have a distortion Effect that only occurs during certain

player states in a game, you can use an RTPC to apply a Bypass Effect linked

to a Game Parameter that monitors player states.

Wwise uses audio codecs to compress and decompress audio files. Typically, you need to

compress audio files so that they fit within a project's memory budget. However, codecs

require CPU resources, and in general the codecs that produce the highest quality audio

also use the most CPU. When deciding which codecs to use, you will have to balance CPU

usage, memory usage, storage space, and audio quality.

If you need to support multiple platforms, you can use different codecs for different

platforms through Conversion Settings ShareSets. For more information, refer to Creating audio Conversion Settings ShareSets.

Wwise supports the following codecs:

PCM is not an audio codec, but rather an

uncompressed file format. It provides high-quality sound and uses minimal

CPU processing power, but requires a lot of storage space.

To minimize CPU usage, use this format for short, repetitive sounds or

high-fidelity audio.

Vorbis is a very popular codec that

provides good quality audio for a modest amount of CPU resources, and in

Wwise it is optimized for interactive media and gaming platforms.

To minimize CPU usage, avoid this format for audio files that repeat

frequently because some CPU power is required to decompress the files

whenever they play. Vorbis works well with most other types of sound,

however.

Opus provides similar quality audio to

Vorbis with greater file compression. However, it requires more CPU

resources than Vorbis. It can be useful for long, non-repeating audio

sources such as dialog or ambiences. Refer to the platform-specific sections

in the Wwise SDK for more details on decoding Opus files.

ADPCM provides good compression and uses

minimal CPU power at the cost of quality. Use it with mobile platforms to

minimize CPU usage and runtime memory.

In Wwise, the term "spatial audio" refers to a variety of settings and configurations

that relate to sound in simulated 3D environments, such as attenuation, diffraction,

reflection, and so on. There are many aspects of spatial audio that require CPU

processing power so you will have to test different options and decide on the

appropriate balance between quality and performance.

In Wwise Authoring, many of the options are available on the Positioning tab in the

Property Editor (refer to Positioning Tab: Actor-Mixer and Interactive Music Objects and Positioning Tab: Audio and Auxiliary Busses).

Spatial audio uses CPU processing power for numerous calculations related to emitter

and listener positioning, sound attenuation, sound reflection off of 3D objects,

diffraction, and so on. There are many possible combinations of spatial audio Effects

and settings, so there are no simple guidelines for CPU optimization. However, the

following suggestions might be helpful if you notice that spatial audio is consuming a

lot of CPU resources in the Profiler:

Ensure that the spatial geometry is as simple as possible. Minimize the number

of triangles and the number of diffraction edges.

Use finite attenuations for sounds. As the maximum attenuation radius

increases, more CPU processing power is required to search for paths. When a

finite attenuation curve reaches its maximum distance, the curve drops below the

audibility threshold set in the project settings.

Avoid playing sounds if they are too far away to be heard, and stop them when

they move beyond the attenuation radius and will not return.

Use rooms and portals to isolate independent sections of a scene.

When initializing spatial audio, you can set several Spatial Audio Initialization Settings to control CPU usage:

Use CPU load balancing.

Increase the movement threshold required to calculate reflections

and diffraction, which reduces CPU usage at the cost of

accuracy.

Reduce the number of primary rays used in the raytracing

engine.

Reduce the maximum reflection order. First-order and second-order

are sufficient in most cases.

Reduce the diffraction order. Start with 4, but even 2 or 1 can be

usable.

For more information on spatial audio, including suggestions on CPU optimization,

refer to the following documents:

Additional CPU optimization resources

In addition to this documentation, there are several resources that contain

information about CPU optimization: